Introduction to the Model Context Protocol (MCP): A Developer’s Guide to the MCP for Smarter AI Assistants

As artificial intelligence continues to evolve, the need for more capable, context-aware AI assistants becomes increasingly critical. Today’s large language models (LLMs) demonstrate impressive natural language reasoning and generation. However, they often fall short in one key area—access to dynamic, real-time, and task-specific context.

Enter the Model Context Protocol (MCP), an open standard developed by Anthropic that redefines how AI assistants interact with external data and tools. Often described as the “USB-C for AI integration,” MCP provides a unified, secure, and extensible interface that enables AI models to retrieve and act upon relevant external information, improving performance, adaptability, and usefulness across a wide range of applications.

In this guide, we’ll explore what MCP is, why it matters, how it works, and how it compares to existing approaches such as Retrieval-Augmented Generation (RAG).

What Is the Model Context Protocol (MCP)?

At its core, MCP is an open-source communication protocol that allows language model-based AI assistants to fetch, integrate, and act on external data sources and tools in real time. Think of it as the USB-C of AI—a universal interface for connecting models to services, APIs, files, databases, and more.

Traditionally, LLMs are trained on static datasets and can’t access updated or private information unless explicitly provided within the prompt. However, this static prompting approach has clear limitations: context length constraints, outdated knowledge, and an inability to take actions or fetch fresh data. MCP solves this by introducing dynamic, on-demand context retrieval from trusted sources.

Why MCP Matters in Modern AI Workflows

Here’s why MCP is gaining traction among developers and enterprises:

- Real-time contextualization: MCP allows LLMs to access up-to-date knowledge by retrieving information from context providers like document databases, APIs, or internal knowledge bases.

- Extensibility: Developers can integrate tools once using the MCP standard and reuse them across multiple AI systems.

- Security and modularity: AI assistants don’t directly interact with external systems. Instead, requests go through well-defined MCP servers, enabling fine-grained access control.

- Reduced redundancy: MCP eliminates the need for model-specific tool integrations by offering a “write once, integrate anywhere” framework.

MCP Architecture: A Layered Overview

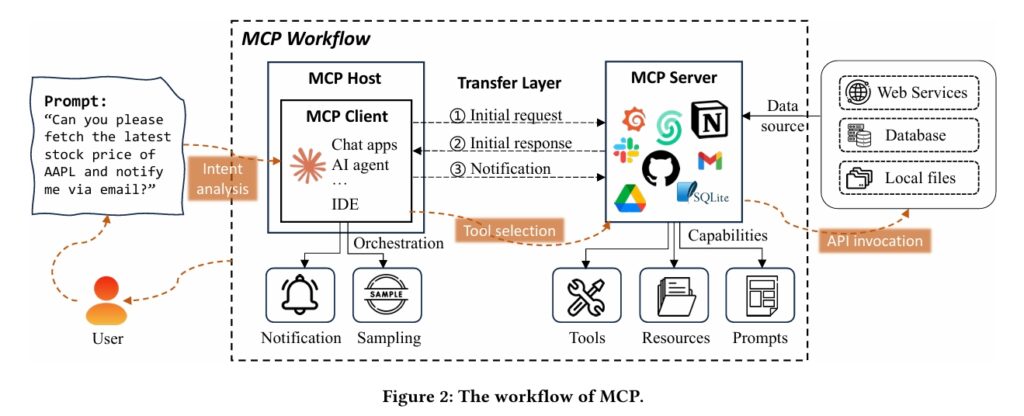

The MCP architecture follows a client–server design and is composed of three key components:

1. MCP Host (AI Assistant Environment)

The environment where the AI assistant runs—such as a chatbot, code editor, or productivity suite. This is where the user interacts with the LLM.

2. MCP Client

A library within the host environment responsible for:

- Routing requests to MCP servers

- Parsing AI intents

- Managing message formats

- Handling tool selection and delivery of context

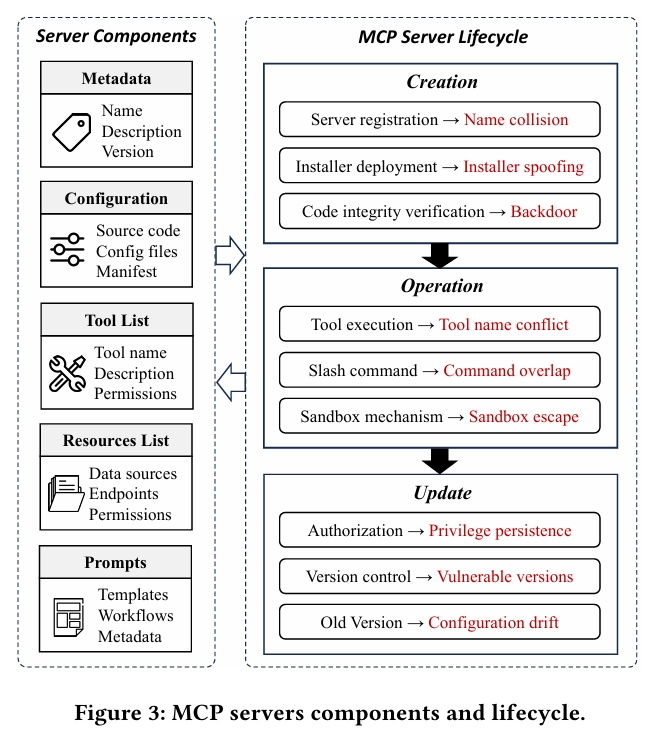

3. MCP Server (Context Provider)

A standalone service that exposes access to a data source or performs specific actions. Examples include:

- Reading files from local directories

- Querying a database or API

- Retrieving documents from knowledge bases

- Executing web searches

Each server adheres to the MCP request/response specification, which ensures interoperability between servers and clients.

How Context Providers Work

An MCP Server acts as a context provider. These servers can connect to a wide range of external systems—anything from CRM systems to documentation databases. They are responsible for:

- Indexing data (for fast retrieval)

- Enforcing permissions

- Returning relevant chunks of information when queried

Context providers serve two core roles:

- Information retrieval: Returning relevant snippets, documents, or structured results.

- Action execution: Performing tasks (e.g., sending emails, scheduling events) and returning confirmation.

The AI assistant doesn’t need to know how the context provider works—it only communicates through standardized MCP interfaces.

Behind the Scenes: Document Indexing & Retrieval

To serve relevant information efficiently, MCP servers often preprocess and index documents. This involves:

- Splitting large documents into manageable text chunks

- Converting them into vector representations using embeddings

- Storing these embeddings in a vector database or search index

When a query is made, the server performs a semantic similarity search and returns the most relevant results to the AI model.

This retrieval-augmented generation (RAG) mechanism ensures that the model only receives useful context, enabling faster and more accurate responses—while avoiding bloated prompts.

Query Resolution Workflow in MCP

When a user submits a prompt, MCP follows a multi-step query resolution process:

- Intent analysis: The MCP Client interprets the request and determines whether external data is needed.

- Tool selection: The client chooses the right MCP server(s) based on intent (e.g., file retrieval, search, calendar scheduling).

- Request dispatch: The selected server is queried using a standardized request format (typically JSON-based).

- Data retrieval or action execution: The server searches its source or executes a command.

- Context delivery: The result is passed back to the client and then appended to the AI model’s prompt.

- Response generation: The LLM generates a final answer using the injected context.

This approach allows AI assistants to simulate dynamic knowledge, such as providing company policy answers from internal wikis or pulling in the latest sales data.

Context Delivery to the Model

Context returned from an MCP server can be:

- Static: Such as a paragraph from a document.

- Structured: Like JSON responses from a database query.

- Action results: Confirmation of completed tasks (e.g., “Invoice generated successfully.”)

MCP clients are responsible for formatting the response before injecting it into the model prompt. This could involve:

- Appending a preamble like “Reference Document:” before the prompt.

- Including context as part of a system message.

- Structuring the input using predefined templates.

This ensures the LLM has the necessary background to produce accurate and relevant outputs without exceeding context limits.

Use Cases of MCP

The power of MCP lies in its flexibility. It can be applied across numerous domains, such as:

- Customer Support: Enabling LLMs to pull data from internal help docs, past ticket logs, or CRM records.

- Enterprise Search: Allowing users to query knowledge bases without needing to navigate UI-heavy systems.

- Coding Assistance: Letting AI coding agents access version history, API docs, and local project files.

- Productivity Tools: Enabling assistants to access emails, schedule meetings, or manipulate spreadsheets.

- Legal & Compliance: Fetching policy clauses, contract excerpts, or regulations dynamically from corpora.

MCP vs Traditional Plugin Systems

| Feature | Traditional Plugins | MCP |

|---|---|---|

| Protocol Standardization | No | Yes |

| Tool Reusability | Limited | High |

| Cross-AI Compatibility | Low | High |

| Security & Access Control | Varies | Strong (via server isolation) |

| Real-Time Context Retrieval | Partial | Yes |

| Indexing/Document Search | Rare | Common via vector databases |

MCP vs RAG: What’s the Difference?

| Aspect | MCP | RAG |

|---|---|---|

| Integration Scope | Retrieval + Actions (read/write) | Read-only retrieval |

| Standardization | Fully standardized protocol | Varies by implementation |

| Context Access | Dynamic via client-server routing | Limited to vector similarity |

| Tool Support | External APIs, databases, cloud services | Text documents only |

| Security | Controlled access via MCP servers | Less granular by default |

In essence, MCP generalizes and extends RAG, enabling a more robust, secure, and action-capable assistant experience.

Security and Governance in MCP

Security is foundational to MCP’s design. Because AI assistants often operate on sensitive user data, MCP:

- Allows fine-grained permissioning (per tool, per request)

- Keeps the model isolated from raw system access

- Let the server control data exposure and format

This structured interface prevents data leakage and unintended model behaviors, ensuring compliance and control across deployments.

Getting Started with MCP

To start using MCP, developers can:

- Choose or build an MCP-compliant client (libraries are available in multiple languages).

- Deploy context providers (MCP servers) based on their data or service needs.

- Use an AI assistant that supports MCP (e.g., those integrated with Anthropic’s Claude or open-source platforms).

The official MCP GitHub repository and tutorials provide sample code for building custom context providers and integrating with host applications.

Conclusion

The Model Context Protocol (MCP) is a significant advancement in how language models interface with the real world. By separating the model from the data and using a modular, standardized approach, MCP enables:

- Smarter, real-time responses

- Secure integration with private tools

- Lower development overhead for tool integrations

- Greater scalability and maintainability in enterprise-grade AI applications

As AI adoption grows across industries, protocols like MCP will be essential in building assistants that are not just powerful—but context-aware, extensible, and trustworthy.

Check out the Paper & Antropic Docs. All credit for this research goes to the researchers of this project.

Do you have an incredible AI tool or app? Let’s make it shine! Contact us now to get featured and reach a wider audience.

Explore 3800+ latest AI tools at AI Toolhouse 🚀. Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Read our other blogs on LLMs 😁

If you like our work, you will love our Newsletter 📰