Microsoft Launches Code Researcher: An AI Agent for Autonomous Debugging of Large-Scale System Code

Introduction: A New Era in Autonomous System-Level Debugging

As software systems become more complex and expansive, debugging them has become an increasingly intricate challenge. Traditional tools and developer workflows often fall short, particularly when dealing with legacy systems, kernel-level components, or codebases that span decades. Recognizing this gap, Microsoft AI has unveiled Code Researcher—a deep research agent purpose-built to autonomously analyze and debug large systems code by deeply reasoning over both code semantics and commit history.

This article dives into the architecture, methodology, benchmark performance, and real-world implications of Code Researcher, offering a complete technical overview for researchers, developers, and enterprises exploring the frontier of intelligent software maintenance.

The Problem: Debugging in Large-Scale Systems is Inherently Complex

Operating systems, hypervisors, and multimedia infrastructure often consist of thousands of interdependent files maintained by a multitude of contributors over many years. Even small changes in such systems can have cascading effects. Debugging crashes in these environments is particularly difficult because:

- Bug reports often include minimal context (e.g., raw stack traces)

- A single symptom may stem from multiple modules or deeply nested dependencies

- Historical changes and commit reasoning are rarely considered in existing agent pipelines

As such, repairing faults in these settings requires more than pattern matching or simple code edits—it demands deep contextual understanding, multi-step reasoning, and an appreciation of software evolution over time.

Limitations of Existing LLM-Based Coding Agents

Existing agents like SWE-agent, OpenHands, and AutoCodeRover rely heavily on structured issue descriptions and often restrict themselves to high-level application codebases. These systems:

- Struggle with unstructured crash reports (e.g., kernel faults)

- Operate under the assumption that buggy files are known

- Rarely utilize historical commit data, which can reveal causal relationships

- Are not designed to explore wide swaths of code autonomously

Consequently, these agents perform poorly when tasked with diagnosing bugs from raw crash logs in large, low-level systems.

Introducing Code Researcher: Microsoft’s Deep Research Agent

Microsoft Research’s Code Researcher addresses these gaps by treating debugging as a research process rather than a scripted fix. Designed specifically for large-scale system software, Code Researcher is the first AI agent to incorporate causal reasoning over commit history, allowing it to discover the root causes of bugs even in legacy or multi-module environments.

What Makes Code Researcher Unique?

- Autonomous Discovery: It doesn’t require prior knowledge of the buggy file

- Causal Analysis: Uses historical commits to uncover patterns behind regressions

- Structured Memory: Retains contextual data gathered during exploration

- Multi-Trajectory Reasoning: Explores multiple hypotheses in parallel

- Real-World Validation: All generated patches are tested on reproducible crash scenarios

Architecture: Three-Phase Reasoning Framework

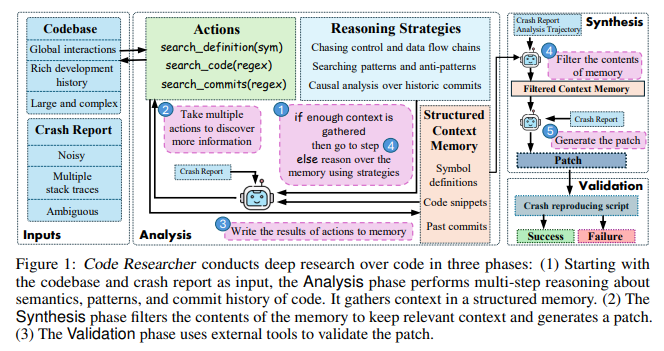

Code Researcher operates in a structured loop comprising three sequential phases:

1. Analysis Phase

- Begins with a raw crash report or stack trace

- Executes exploratory steps such as:

- Symbol resolution

- Code pattern searches using regex

- Diff analysis from historical commit logs

- Builds a structured memory bank of relevant information (e.g., function flow, historical changes, keyword traces)

2. Synthesis Phase

- Filters noise from collected data

- Identifies one or more candidate code snippets

- Generates minimal, targeted patches

- Leverages advanced models (e.g., GPT-4o, o1) to reason about semantic intent and control flow

3. Validation Phase

- Automatically compiles and tests the modified code

- Executes predefined crash reproduction scenarios

- Validates the effectiveness of patches before surfacing them

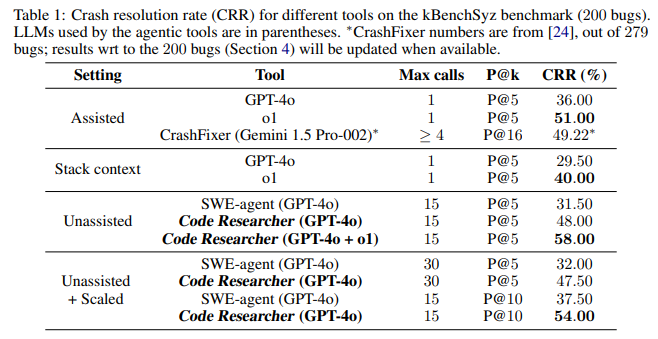

Benchmark Results: Setting a New Standard in System Debugging

Linux Kernel: kBenchSyz

- Benchmark: 279 Syzkaller-generated crashes

- Code Researcher (GPT-4o): Resolved 58% of cases

- SWE-agent (baseline): Resolved only 37.5%

- Average files explored per bug:

- Code Researcher: 10

- SWE-agent: 1.33

- In targeted tests (buggy files known):

- Code Researcher success rate: 61.1%

- SWE-agent: 37.8%

FFmpeg Evaluation

- Applied to 10 real-world FFmpeg crashes

- Successfully prevented crashes in 7 out of 10 cases

- Demonstrated applicability beyond system kernels into multimedia and cross-platform libraries

Core Innovations of Code Researcher

| Feature | Description |

|---|---|

| Causal Commit Analysis | Traces historical regressions using commit diffs and logs |

| Exploratory Symbol Resolution | Matches crash patterns with potential upstream causes |

| Multi-Hop Reasoning | Connects seemingly unrelated modules or files through functional linkage |

| High-Recall Patch Generation | Prioritizes minimal, stable patches that preserve existing functionality |

| Generalization Across Codebases | Performs well on unrelated systems without fine-tuning |

Broader Implications: Autonomous Maintenance at Scale

The breakthrough demonstrated by Code Researcher opens new doors for scalable, intelligent software maintenance. Its ability to autonomously explore, hypothesize, and repair offers significant advantages in:

- Legacy system upkeep (e.g., embedded OS)

- Open-source triaging (e.g., Linux, FFmpeg, QEMU)

- Enterprise system diagnostics

- Continuous integration resilience (auto-fix regressions in CI pipelines)

By reducing the need for human triage, and increasing accuracy through context-aware patching, this agent could dramatically improve productivity and reduce MTTR (Mean Time To Repair).

Final Thoughts

Microsoft’s Code Researcher is a compelling demonstration of how AI can move beyond assistance to autonomous investigation and repair in complex environments. It bridges the gap between static code understanding and historical evolution analysis—an area long neglected in traditional tooling.

As enterprises increasingly rely on large, interconnected systems, tools like Code Researcher point toward a future where intelligent agents can reason about causality, context, and correctness at a level once thought to be exclusive to experienced human engineers.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to subscribe to our Newsletter.