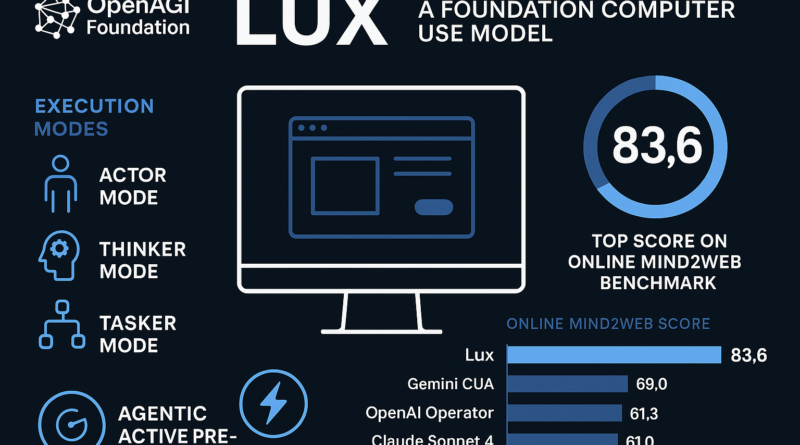

OpenAGI Foundation Launches Lux: A Game-Changer in Foundation Computer Use Models

The artificial intelligence ecosystem is undergoing a quiet revolution, one where agents are not just chatting, summarizing, or coding, but directly operating computers. In this emerging category of computer-use foundation models, the OpenAGI Foundation has introduced a transformative entrant: Lux. Unlike traditional language models, Lux doesn’t just understand instructions. It acts on them, navigating interfaces, executing tasks across desktops and browsers, and adapting to nuanced workflows.

In this article, we explore what makes Lux distinct, how it was built, the infrastructure enabling it, and how it compares to other notable models like OpenAI Operator, Anthropic’s Claude Sonnet 4, and Google Gemini CUA. We also examine how Lux leverages OSGym and Agentic Active Pre-training to push the boundaries of digital autonomy.

From Language Models to Computer-Use Agents

Most AI models, including ChatGPT or Claude, excel at natural language understanding and generation. Some are enhanced with tool use, like browsing or file reading, via APIs or plugins. However, they are still fundamentally “language-first”, they operate within the bounds of text or structured environments.

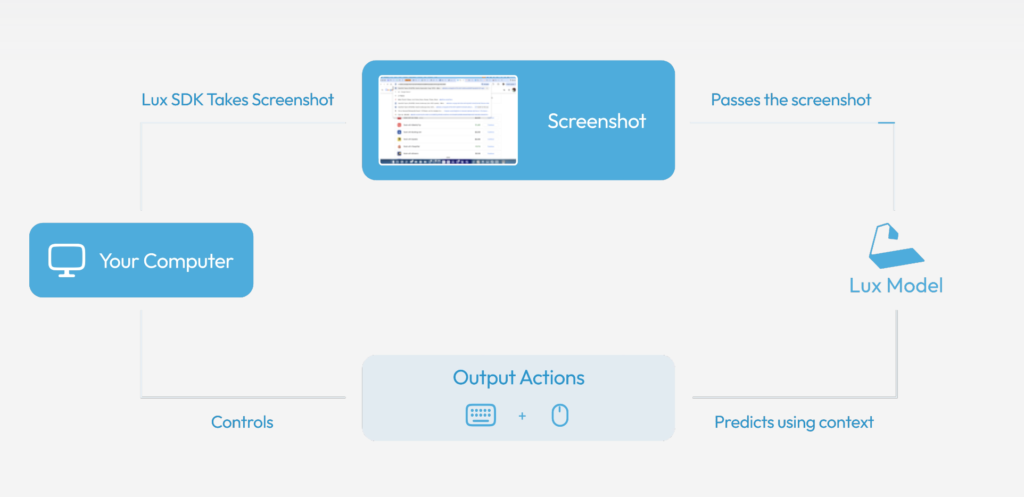

Lux belongs to a new breed. It is not a chatbot with plugins; it is a screen-and-action-first model, a foundation computer-use agent trained to operate real desktops and applications. It can handle raw pixels and manipulate interfaces the same way a human would: by clicking, typing, scrolling, and selecting.

The significance lies in the operational paradigm. Lux doesn’t merely output text or command snippets. It perceives the rendered user interface and acts accordingly, bridging the gap between high-level intent and low-level GUI control.

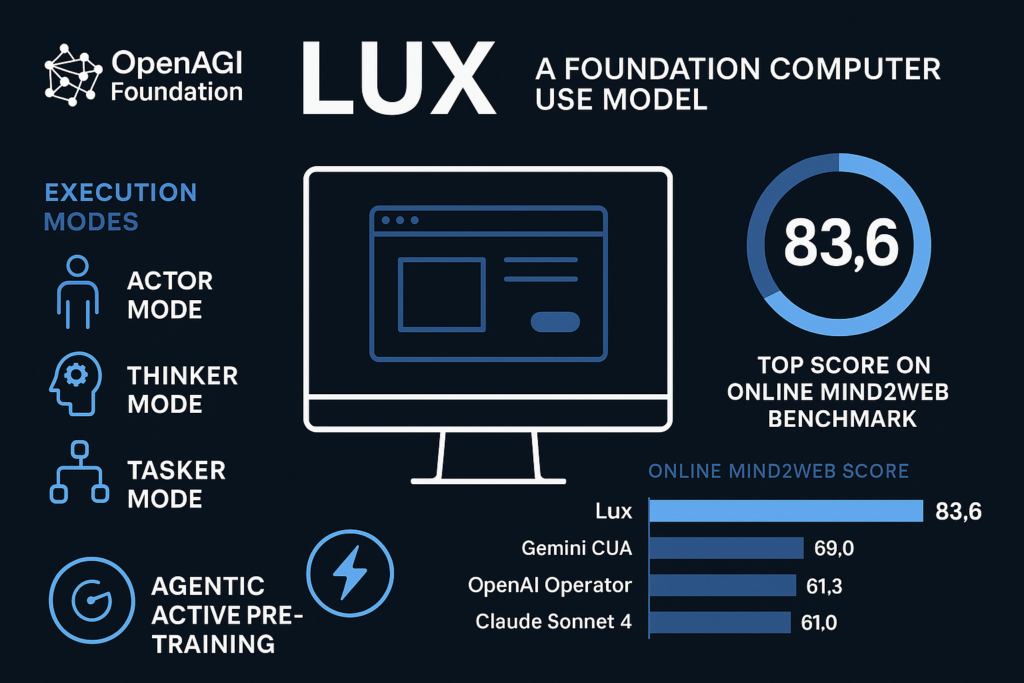

Benchmark Leadership: Topping Online Mind2Web

A core highlight of Lux’s debut is its performance on the Online Mind2Web benchmark, a large-scale suite of over 300 real-world computer-use tasks. These tasks span platforms like LinkedIn, Amazon, Wikipedia, and Notion, testing how well an agent can interact with modern web apps under real constraints.

Lux achieves a state-of-the-art score of 83.6, surpassing all current alternatives:

- Google Gemini CUA: 69.0

- OpenAI Operator: 61.3

- Anthropic Claude Sonnet 4: 61.0.

These are not minor differences. The margin of performance demonstrates Lux’s ability to consistently understand intent and execute tasks over long horizons. Whether it’s updating a product listing on Shopify, triaging a Gmail inbox, or extracting metrics from an analytics dashboard, Lux gets it done reliably.

What Lux Actually Does: Screen-to-Action Execution

Lux accepts natural language goals and translates them into low-level UI operations. It perceives rendered UI as input (like a screenshot or live screen feed) and emits actions like:

- Mouse clicks

- Keyboard input

- Scrolls

- Selections

- Window switching

This allows it to interact with:

- Web browsers (Chrome, Firefox)

- Office software (Word, Excel)

- Development tools (VS Code, Terminal)

- Email clients, dashboards, and CRMs

Unlike plugin-based systems that rely on structured APIs, Lux operates API-agnostically, enabling it to work with closed-source apps or legacy tools, much like a human virtual assistant.

Three Execution Modes: Actor, Thinker, and Tasker

To accommodate different levels of task complexity and developer control, Lux introduces three modes of operation:

1. Actor Mode (Fastest Execution)

- ~1 second per action

- Designed for routine, well-defined tasks like:

- Filling online forms

- Downloading reports

- Copying data between spreadsheets

- Ideal for macro-like workflows where latency and throughput matter.

2. Thinker Mode (Autonomous Reasoning)

- Handles vague or high-level instructions

- Breaks down goals into subtasks before acting

- Suitable for:

- Multi-page research

- Email triaging

- UI navigation where the path is not fixed

- Balances autonomy and goal alignment.

3. Tasker Mode (Maximum Determinism)

- Executes a scripted list of UI actions

- Includes retry loops and error handling

- Perfect for teams that want to retain control but offload execution

- Supports rigorous workflows like automated QA or test automation.

Together, these modes offer flexibility, from fast robotic automation to intelligent multi-step planning to highly deterministic batch execution.

Agentic Active Pre-training: A New Learning Paradigm

Lux’s performance is not just due to scale or architecture, but a new training strategy called Agentic Active Pre-training (AAP).

Unlike traditional pre-training, where models passively learn from static text datasets, AAP involves active interaction with operating systems and apps. Lux learns by:

- Taking actions

- Observing outcomes

- Refining its behavior through feedback from the environment

This mirrors how humans learn: not by reading manuals, but by doing.

AAP avoids the pitfalls of reward-engineering seen in traditional reinforcement learning. Instead, it focuses on exploratory behavior and skill acquisition, encouraging the model to generalize to unseen tasks.

This behavioral grounding sets Lux apart from even the most capable large language models.

OSGym: The Simulation Engine Behind Lux

Training a model to act in digital environments at scale requires a powerful data pipeline. Enter OSGym, OpenAGI’s open-source simulation platform.

Key Features of OSGym:

- Runs full OS replicas, not just web environments

- Can simulate:

- Browsers

- Spreadsheets

- IDEs

- File systems and multi-app workflows

- Achieves ~1,400 multi-turn interaction trajectories per minute

- Scales up to 1,000+ parallel environments

- Licensed under MIT, allowing both research and commercial adoption

This infrastructure unlocks rapid prototyping and evaluation of agents, making it possible to run months’ worth of human trials in days.

Latency, Cost, and Practical Deployment

In production environments, cost and latency dictate feasibility.

Lux offers an impressive profile:

- ~1 second per step, compared to ~3 seconds for OpenAI Operator

- 10x cheaper per token, due to optimized inference and fine-tuned model size

These gains matter when workflows involve hundreds of steps. A customer support automation or compliance audit may require thousands of actions across emails, forms, and dashboards. Lux makes such workloads economically viable.

The OpenAGI SDK provides integration hooks for:

- Web backends

- RPA pipelines

- Workflow orchestration tools

- Human-in-the-loop control panels

How Lux Stands Against the Competition

| Feature | Lux (OpenAGI) | OpenAI Operator | Claude Sonnet 4 | Gemini CUA |

|---|---|---|---|---|

| Online Mind2Web Score | 83.6 | 61.3 | 61.0 | 69.0 |

| Screen Input | Yes | Limited | Partial | Yes |

| Modes | Actor, Thinker, Tasker | Not exposed | Not exposed | Not exposed |

| Execution Speed | ~1s per step | ~3s per step | ~3s per step | ~2–2.5s per step |

| Cost | ~10× cheaper | Expensive | Expensive | Mid-tier |

| Training Method | Active Pre-training | Passive LLM Pre-training | RLHF | Passive + RL |

| Infrastructure | OSGym | Custom | Unknown | Unknown |

Real-World Applications and Use Cases

Lux opens up a spectrum of automation and augmentation possibilities:

- Enterprise Automation: Pulling metrics from dashboards, formatting reports, filing claims

- Customer Support: Resolving tickets across tools like Zendesk, Jira, Outlook

- Data Entry: Large-scale, structured input into ERPs or CRMs

- Research Workflows: Conducting searches, summarizing results, organizing documents

- QA and Testing: Running click-by-click tests in production-like environments

Its ability to operate across systems, interpret UIs, and persist through long tasks makes it a drop-in upgrade for many business processes.

Final Thoughts: Lux and the Future of Agentic Computing

With Lux, OpenAGI has moved the goalposts for what foundation models can achieve in the realm of computer use. It combines a high-performing agent with flexible execution, fast performance, and a novel learning architecture, all supported by scalable open-source infrastructure.

In an era where LLMs saturate benchmarks and drift into abstraction, Lux reminds us that intelligence is not only about understanding, but about doing. By anchoring learning in interaction, Lux sets the stage for a new category of agents that don’t just predict, they perform.

As OpenAGI continues to refine Lux and the broader ecosystem around OSGym, it paves the way for developers, researchers, and businesses to build their own task-solving digital workers. From button clicks to strategic workflows, Lux offers a compelling glimpse of the future, one where AI doesn’t just assist us but acts for us.

Check out the official announcement from AGI Foundation. All credit for this news goes to the researchers of this project. Explore one of the largest MCP directories created by AI Toolhouse, containing over 4500+ MCP Servers: AI Toolhouse MCP Servers Directory