Trainium3, Nova 2, and AI Agents: Breaking Down AWS’s Biggest re:Invent 2025 Announcements

Amazon Web Services (AWS) took center stage at re:Invent 2025 with a flood of announcements that solidify its leadership in AI infrastructure, custom silicon, and autonomous agent technologies. Among the highlights were the launch of Frontier Agents—a new class of long-running AI agents, the next-generation Trainium3 UltraServers, and the expansion of the Amazon Nova family of models. Together, these offerings chart a bold course for how developers, enterprises, and governments will build and scale intelligent applications in the years ahead.

Here’s an in-depth look at the three standout innovations from re:Invent 2025 and how they compare to competing technologies in the fast-evolving AI landscape.

1. Frontier Agents: A New Era of Autonomous, Specialized AI

What Are Frontier Agents?

Frontier Agents are AWS’s answer to the growing demand for autonomous, intelligent agents capable of operating over extended timeframes. These agents aren’t just chatbots or prompt-based tools—they are persistent, modular systems that function as extensions of enterprise software teams.

AWS unveiled three initial Frontier Agents:

- Kiro Autonomous Agent: Functions as a full-fledged virtual developer capable of UI automation, code generation, and deployment.

- AWS Security Agent: Monitors, identifies, and mitigates threats across cloud environments in real-time.

- AWS DevOps Agent: Acts as a 24/7 operations assistant for deployment pipelines, incident resolution, and observability.

What makes Frontier Agents different is their long-running autonomy, allowing them to function for hours or days without user prompts, while integrating with existing workflows via Kiro Powers, memory modules, and role-based access through AgentCore.

Comparison with Other Agent Frameworks

While platforms like OpenAI’s Assistants API and Google’s ADK focus on task-specific prompt-based interactions, AWS Frontier Agents deliver persistent task execution, state memory, and integration with enterprise observability tools. The modularity, ability to operate in high-security contexts (via AWS Identity services), and direct deployment through AgentCore make them more production-ready.

Additionally, the ecosystem of Kiro Powers—ready-to-deploy integrations for tools like Postman, Netlify, and Figma—enables instant upskilling of agents for specific workflows. This significantly reduces agent development time and enhances token efficiency.

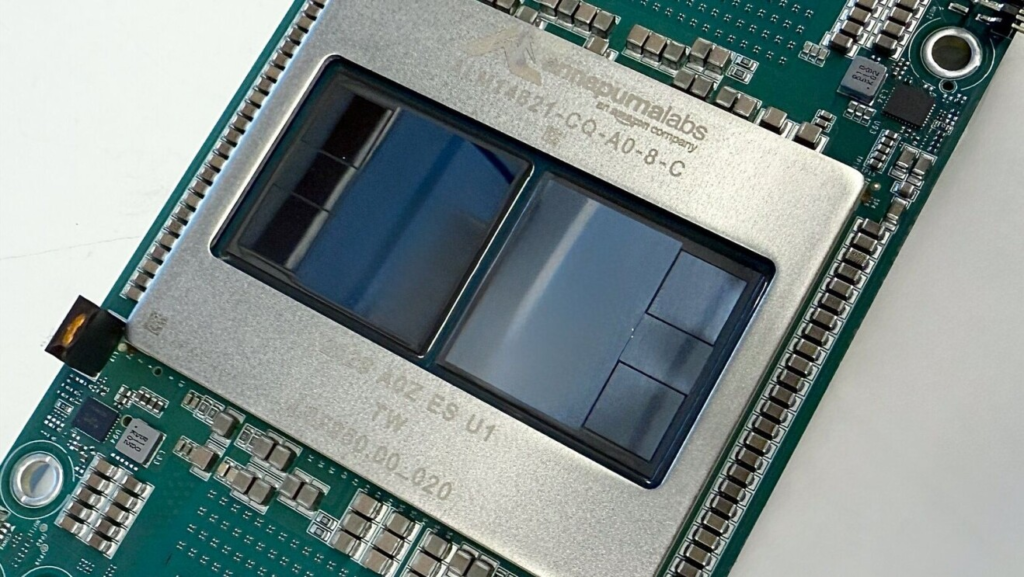

2. Trainium3 UltraServers: Custom Silicon to Power Next-Gen AI

Overview of Trainium3

With the launch of Trainium3, AWS pushed the envelope of AI training performance. The Trainium3 chip—built on a 3nm process—packs more than 144 chips into a single EC2 Trn3 UltraServer, enabling unmatched throughput and efficiency for training trillion-parameter models. Key benefits include:

- 4.4x compute performance over Trainium2

- 4x greater energy efficiency

- 3x higher throughput per chip

- 50% reduction in training and inference costs for customers like Anthropic, Karakuri, and Amazon Bedrock

Technical Innovations

Each Trainium3 UltraServer includes advanced thermal and memory control, leveraging AWS’s Nitro interconnect for low-latency, high-bandwidth performance. The result is a system capable of reducing model training times from months to weeks.

The infrastructure also supports checkpointless training, via SageMaker HyperPod, meaning training jobs can now automatically recover from hardware faults in minutes—maximizing cluster utilization and saving millions in compute costs.

Comparison with Competing Hardware

Compared to NVIDIA’s GB300 NVL72 (used in AWS P6e-GB300 UltraServers), Trainium3 offers similar or better efficiency for specific workloads at a lower cost, especially for customers fully integrated into the AWS ecosystem.

OpenAI, Microsoft Azure, and Google Cloud are heavily reliant on NVIDIA GPUs. AWS, in contrast, offers a vertical stack—Trainium chips, EC2 instances, Bedrock inference endpoints, and S3 Vectors—optimized to work together, offering reduced latency and better total cost of ownership.

3. Amazon Nova 2: Next-Gen Multimodal, Reasoning-Ready AI Models

Meet the Nova Family

AWS has doubled down on AI model development with the Nova 2 series, including:

- Nova Act: Optimized for UI automation and complex reasoning

- Nova Sonic: Delivers advanced multilingual speech recognition and TTS

- Nova Forge: Pioneers “open training” for enterprise finetuning

All Nova models boast industry-leading performance across tasks such as coding, multimodal processing, and natural language understanding. Nova Forge, in particular, allows organizations to blend their proprietary data with Amazon-curated datasets for fully transparent training workflows.

Use Cases and Early Results

- Reddit replaced multiple specialized LLMs with a unified Nova Forge model.

- Hertz accelerated feature development by 5x using Nova Act.

- Amazon Connect adopted Nova Sonic for multilingual voice agents, driving natural voice interactions across 30+ languages.

Comparison with Whisper, Gemini, and Claude

Nova Sonic stands as AWS’s challenger to OpenAI Whisper, Deepgram, and Google’s Gemini Audio for speech-based applications. While Whisper is open-source and widely adopted, Nova Sonic excels in multilingual support, real-time integration, and enterprise reliability. Nova models also support real-time RAG (retrieval-augmented generation) via Amazon Bedrock and S3 Vectors.

Compared to Anthropic Claude and Gemini 1.5, Nova Forge provides unique “open checkpoint” features for enterprise compliance and auditing—an important differentiator for regulated industries.

Bonus Highlights: Supporting Innovations Fueling AWS’s AI Vision

Bedrock AgentCore and Agent Memory

AgentCore has emerged as the backbone for agentic AI at AWS. With features like natural language policy enforcement, automated evaluations, and episodic memory modules, it allows teams to deploy agents that are compliant, safe, and continuously improvable.

Customers like MongoDB and Swisscom have already implemented AgentCore to scale multi-agent systems across operations, customer service, and DevOps.

AI Factories: Bring AWS to Your Data Center

AWS AI Factories now offer prepackaged high-performance infrastructure—including Trainium3 chips and NVIDIA GPUs—deployed on-premises. This meets data sovereignty and compliance needs without sacrificing model performance. Saudi Arabia’s HUMAIN initiative is building a 150,000-chip data center powered by this approach.

Final Thoughts: What It All Means for Developers and Enterprises

AWS re:Invent 2025 marks a pivotal moment in the evolution of cloud AI infrastructure and agentic computing. The three announcements—Frontier Agents, Trainium3, and Nova 2—collectively enable:

- Autonomous, reliable AI systems that can run for days without supervision

- Faster, cheaper model training and inference at enterprise scale

- Transparent, multimodal models with open training capabilities

When compared with peers like OpenAI, Google Cloud, and Microsoft Azure, AWS offers a tightly integrated, vertically optimized ecosystem for building AI systems—from silicon to service.

Enterprises looking to deploy AI agents, automate workflows, or accelerate model training at scale now have more reasons than ever to choose AWS.

As the generative AI race accelerates, AWS isn’t just keeping pace—it’s setting the rules of the game.

Check out the official announcement from Amazon. All credit for this news goes to the researchers of this project. Explore one of the largest MCP directories created by AI Toolhouse, containing over 4500+ MCP Servers: AI Toolhouse MCP Servers Directory