What is S1 AI Model? The Open-Source, Cost-Efficient Rival to OpenAI’s O1 Trained for Less Than $50

In the rapidly evolving AI landscape, the cost of training large-scale models has traditionally been a limiting factor. State-of-the-art models like OpenAI’s O1 reasoning model require vast computational resources, making them accessible only to well-funded research labs and tech giants. However, a team of AI researchers from Stanford and the University of Washington has introduced S1, an open-source reasoning model trained for under $50 in cloud compute credits—significantly challenging the industry’s assumptions about AI training costs.

What is the S1 AI Model?

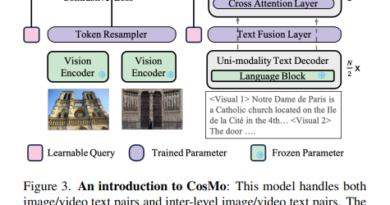

The S1 model is a 32B-parameter open-source large language model (LLM) focused on advanced reasoning tasks. Unlike traditional LLMs, which rely on extensive pretraining with massive datasets, S1 utilizes test-time scaling, a technique that allows the model to refine its responses dynamically by engaging additional computational resources only when needed during inference.

This innovative approach makes S1 a direct competitor to OpenAI’s O1 reasoning model, as it mimics human-like cognitive processes to break down complex queries into smaller sub-questions before generating a response. This structured thought process ensures better logical consistency and improves the accuracy of multi-step reasoning tasks.

For instance, if you ask S1 a real-world question like:

“What is the economic impact of replacing iPhones with Android tablets worldwide?”

Instead of responding immediately, S1 would break the problem into structured steps, such as:

- Estimating the number of active iPhone users

- Calculating the cost of producing Android tablets at scale

- Analyzing the effect on global supply chains

This method allows the model to verify its own responses and arrive at more accurate, well-reasoned conclusions—a capability that distinguishes it from conventional AI models.

How Was S1 Trained for Under $50?

One of the most groundbreaking aspects of the S1 model is its incredibly low training cost. Unlike traditional LLMs that require millions of dollars worth of computing power, S1 was trained using a novel strategy that drastically reduced expenses.

1. Dataset: The S1K Benchmark

The S1 model was trained on a curated dataset of just 1,000 high-quality reasoning questions, referred to as S1K. These questions were carefully selected based on complexity, diversity, and real-world applicability. The dataset covered mathematics, logical reasoning, and scientific problem-solving, making it highly optimized for reasoning tasks.

2. Transfer Learning from Pretrained Models

Instead of training from scratch, S1 leveraged a pre-existing language model—Qwen2.5-32B-Instruct. This allowed the researchers to build upon existing knowledge rather than starting from zero, significantly reducing compute costs while maintaining high accuracy.

3. Efficient Fine-Tuning Using Supervised Fine-Tuning (SFT)

The Supervised Fine-Tuning (SFT) process further refined S1’s reasoning capabilities. This step involved just 26 minutes of training on 16 NVIDIA H100 GPUs, an impressive feat given the complexity of LLM training. Unlike models requiring weeks or months of training, S1 achieved its final form in under 30 minutes—a clear testament to its efficiency.

4. Learning from Google’s Gemini Flash Thinking

S1 also drew inspiration from Google’s Gemini 2.0 Flash Thinking Experimental model, which is designed to expose the step-by-step reasoning behind its responses. By mimicking the structured thinking process of Gemini, S1 was able to enhance its reasoning capabilities without the need for extensive additional training.

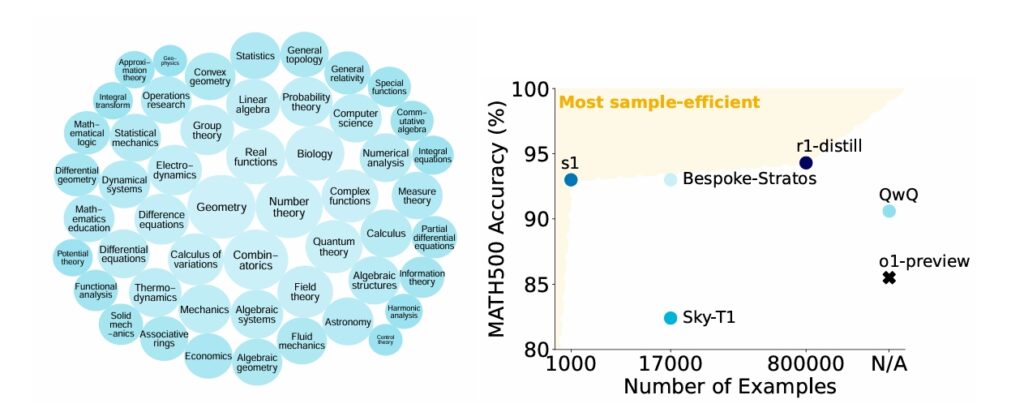

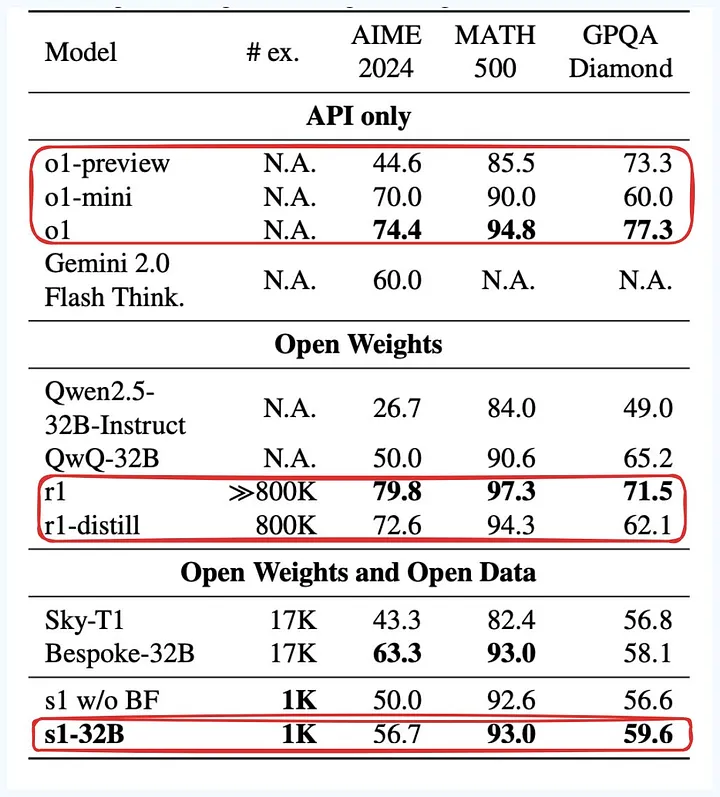

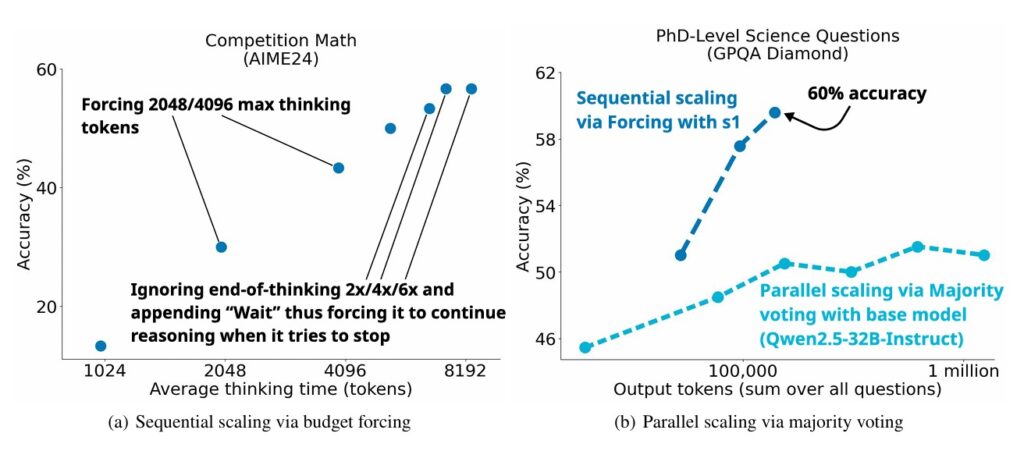

Performance Benchmarks: How Does S1 Compare to OpenAI’s O1?

Despite its minimal training cost, S1 outperforms several closed-source models on leading reasoning benchmarks, including:

- AIME24 (Advanced Mathematics Reasoning)

- MATH500 (Complex Math Problem Solving)

- GPQA Diamond (General Purpose Question Answering)

Key Findings:

- S1 achieved a 27% improvement over previous reasoning models on mathematical problem-solving tasks.

- It outperformed OpenAI’s O1 Preview model on structured reasoning challenges.

- Unlike reinforcement learning-heavy models, S1 demonstrated robust performance with minimal training data.

These results suggest that S1’s test-time scaling approach is a viable alternative to traditional deep-learning methods that require extensive resources.

Why S1 Matters for AI Development

1. The Rise of Cost-Effective AI Models

S1 challenges the long-standing belief that training powerful AI models requires billions of dollars in compute resources. By demonstrating that an effective AI reasoning model can be built for less than $50, S1 paves the way for more accessible AI development worldwide.

2. Open-Source and Transparent Development

Unlike proprietary models like OpenAI’s O1, S1 is open-source, making its training process and dataset available to the AI community. This transparency encourages collaboration and further innovation, allowing researchers to improve and build upon S1’s capabilities.

3. The Future of Test-Time Scaling

The test-time scaling approach used in S1 could redefine how AI models are trained and deployed. Instead of relying solely on massive pretraining, future AI systems may adopt dynamic resource allocation, significantly improving cost efficiency and scalability.

Final Thoughts

The S1 AI model is a groundbreaking step toward making advanced AI reasoning more affordable and accessible. By leveraging a small but high-quality dataset, test-time scaling, and efficient fine-tuning, researchers have demonstrated that AI training doesn’t need to be expensive to be effective.

As AI development shifts towards efficiency and transparency, S1’s open-source methodology may set a new standard for the industry, challenging the dominance of proprietary models like OpenAI’s O1. With further refinements, S1 could inspire the next wave of reasoning models—offering powerful AI solutions without the excessive costs.

What are your thoughts on S1’s test-time scaling approach? Could this be the future of low-cost AI training?

Check out the Details. All credit for this research goes to the researchers of this project.

Do you have an incredible AI tool or app? Let’s make it shine! Contact us now to get featured and reach a wider audience.

Explore 3800+ latest AI tools at AI Toolhouse 🚀. Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Read our other blogs on LLMs 😁

If you like our work, you will love our Newsletter 📰