Mistral AI Launches Devstral 2507: Advancing Code-Centric Language Modeling for Developers

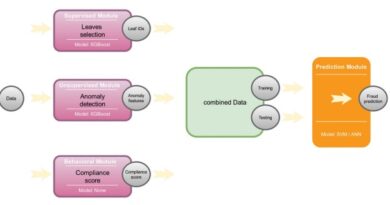

Mistral AI has once again positioned itself at the forefront of developer-focused language modeling with the release of Devstral 2507, a suite of large language models purpose-built for code-centric applications. Released in collaboration with All Hands AI, this latest update includes two distinct offerings: Devstral Small 1.1, an open-source model designed for local and embedded environments, and Devstral Medium 2507, an enterprise-grade model optimized for high-accuracy code generation and reasoning.

With the increasing adoption of AI agents and autonomous coding assistants, Mistral’s latest release aims to meet the growing demand for models that can handle complex codebases, long-context reasoning, and seamless integration with developer tools.

Devstral Small 1.1: Open Source and Locally Deployable

Devstral Small 1.1 is a versatile, developer-friendly LLM that offers local inference capabilities and open-source accessibility. Built on the Mistral-Small-3.1 architecture with approximately 24 billion parameters, it is fine-tuned to handle structured outputs such as XML, JSON, and function-calling formats. It supports a 128k token context window, making it suitable for reasoning over large codebases or multiple files at once.

Key Features:

- Open-source under Apache 2.0 license for commercial and research use

- Native support for structured outputs

- Seamless integration with agentic frameworks like OpenHands

- Optimal for tasks such as bug fixing, refactoring, multi-file code navigation, and testing

What sets this model apart is its balance between performance and usability. Developers can run it locally using quantized GGUF formats with frameworks like llama.cpp, vLLM, or LM Studio. This offers significant flexibility for those prioritizing privacy, cost-efficiency, or on-device inference.

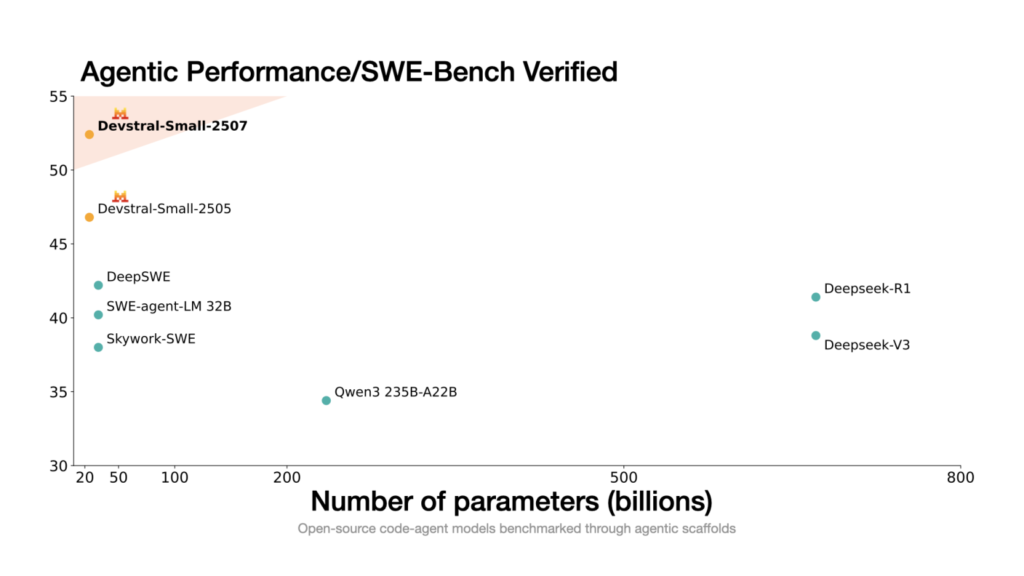

Performance Benchmarks

According to SWE-Bench Verified, an industry-standard benchmark for software engineering tasks, Devstral Small 1.1 achieves a 53.6% accuracy rate—a notable improvement from version 1.0. These evaluations were conducted using the OpenHands scaffold, which simulates real-world GitHub issue patches and is widely used for testing coding agents.

While not matching the scale of the largest proprietary models, its efficient inference and strong contextual understanding make it a powerful option for early prototyping, experimentation, or integration into IDE plugins and lightweight developer tools.

Deployment and Pricing

Devstral Small 1.1 can be run:

- Locally on GPUs like NVIDIA RTX 4090 or on Apple Silicon with at least 32GB RAM

- Via API, with pricing set at $0.10 per million input tokens and $0.30 per million output tokens

This dual deployment model offers teams flexibility in choosing the most appropriate inference strategy depending on budget, security, and integration requirements.

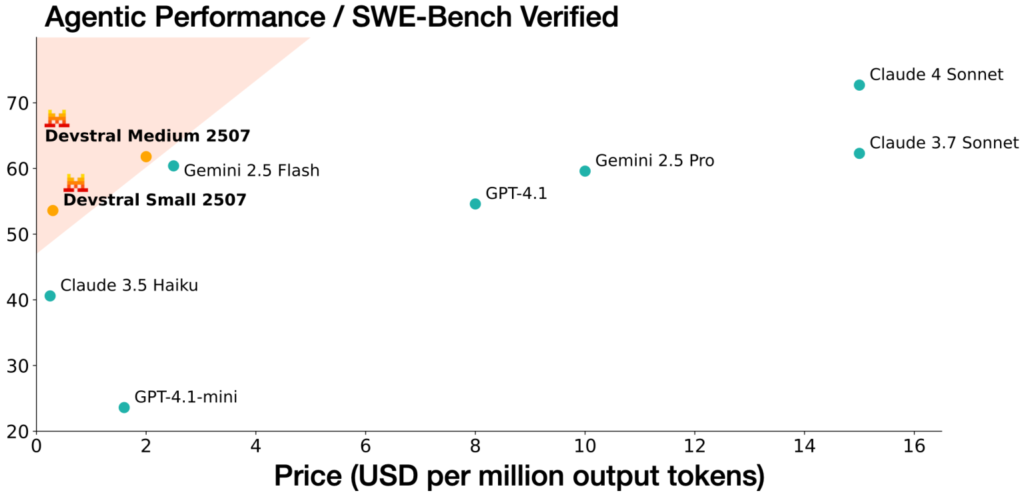

Devstral Medium 2507: High-Performance API Model

Designed for production environments where precision and large-scale reasoning are critical, Devstral Medium 2507 pushes the boundaries of code-centric LLM performance. Unlike its smaller sibling, this model is not open-source and is accessible only via API or enterprise deployment.

It delivers a 61.6% score on SWE-Bench Verified, outperforming models like Gemini 2.5 Pro and GPT-4.1 in structured code generation tasks.

Ideal Use Cases:

- Refactoring across large monorepos

- Pull request triage

- Test generation and validation

- Enterprise-level coding copilots

Pricing:

- $0.40 per million input tokens

- $2.00 per million output tokens

- Enterprise fine-tuning available

Comparative Summary: Devstral Small vs. Devstral Medium

| Feature | Devstral Small 1.1 | Devstral Medium 2507 |

|---|---|---|

| SWE-Bench Score | 53.6% | 61.6% |

| Open Source | Yes | No |

| Context Length | 128k tokens | 128k tokens |

| Input Token Cost | $0.10 / million tokens | $0.40 / million tokens |

| Output Token Cost | $0.30 / million tokens | $2.00 / million tokens |

| Best Use Cases | Local prototyping, agents | Production pipelines |

This table highlights the intended use case split between lightweight, developer-side integrations (Small) and high-accuracy backend services (Medium).

Ecosystem Compatibility: Designed for Developers

One of the standout strengths of the Devstral 2507 series is their agentic design compatibility. Both models natively support XML output, structured reasoning, and function call generation. This allows them to plug directly into modern agent frameworks and developer tools, including:

- IDE extensions (VSCode, JetBrains)

- GitHub and GitLab bots for PR reviews

- Test generation and refactoring pipelines

- Documentation and summarization agents

Mistral’s investment in developer-centric tooling is evident in their support for modular integration, model quantization, and scalable APIs.

Conclusion

The release of Devstral 2507 signals Mistral AI’s strategic focus on delivering practical, cost-effective solutions for developers. With Devstral Small offering open-source flexibility and Devstral Medium delivering enterprise-grade reasoning performance, the suite is well-positioned to support a wide range of use cases—from local agent development to full-scale production code generation.

In an increasingly competitive AI landscape, the Devstral series stands out not through sheer parameter count, but through thoughtful optimization for real-world developer workflows.

Whether you’re building an AI-enhanced IDE plugin, scaling automated code review systems, or embedding intelligent agents in internal tools, Devstral 2507 provides a compelling foundation for the next wave of developer productivity tools.

Check out the Technical details, Devstral Small model weights at Hugging Face and Devstral Medium will also be available on Mistral Code for enterprise customers and on finetuning API. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to subscribe to our Newsletter.