Enhancing Graph Neural Networks for Heterophilic Graphs: Introducing Directional Graph Attention Networks (DGAT)

Graph Neural Networks (GNNs) have revolutionized the field of graph representation learning, enabling researchers to analyze and learn from complex networked data. GNNs have proven effective in various domains, including social networks, molecular structures, and communication networks. However, traditional GNNs face challenges when applied to heterophilic graphs, where connections are more likely between dissimilar nodes. To address this limitation, researchers from McGill University and the Mila-Quebec Artificial Intelligence Institute have introduced Directional Graph Attention Networks (DGAT), a novel framework designed to enhance GNNs for heterophilic graphs.

Understanding the Limitations of Traditional GNNs

GNNs excel at capturing the intricate relationships within graphs by effectively processing and learning from non-Euclidean data. Graph Attention Networks (GATs), in particular, have gained popularity due to their innovative use of attention mechanisms. These mechanisms assign varying levels of importance to neighboring nodes, allowing the model to focus on more relevant information during the learning process.

Explore 3600+ latest AI tools at AI Toolhouse 🚀

However, traditional GATs are optimized for homophily, which means they perform well when connections are more likely between similar nodes. This design limitation hampers their effectiveness in scenarios where understanding diverse connections is crucial. In heterophilic graphs, where dissimilar nodes are more likely to be connected, traditional GATs struggle to capture long-range dependencies and global structures within the graph. Consequently, their performance on tasks that require such information is significantly compromised.

Introducing Directional Graph Attention Networks (DGAT)

To address the limitations of traditional GATs in heterophilic graphs, researchers from McGill University and Mila-Quebec Artificial Intelligence Institute have developed DGAT. This novel framework enhances GATs by incorporating global directional insights and feature-based attention mechanisms.

DGAT introduces a new class of Laplacian matrices, which enables a more controlled diffusion process. This control allows the model to effectively prune noisy connections and add beneficial ones, enhancing the network’s ability to learn from long-range neighborhood information.

The topology-guided neighbor pruning and edge addition strategies of DGAT are particularly noteworthy. DGAT leverages the spectral properties of the newly proposed Laplacian matrices to selectively refine the graph’s structure, enabling more efficient message passing. Additionally, DGAT introduces a global directional attention mechanism that utilizes topological information to enhance the model’s ability to focus on specific parts of the graph. These sophisticated approaches to managing the graph’s structure and attention mechanism significantly advance the field of graph neural networks.

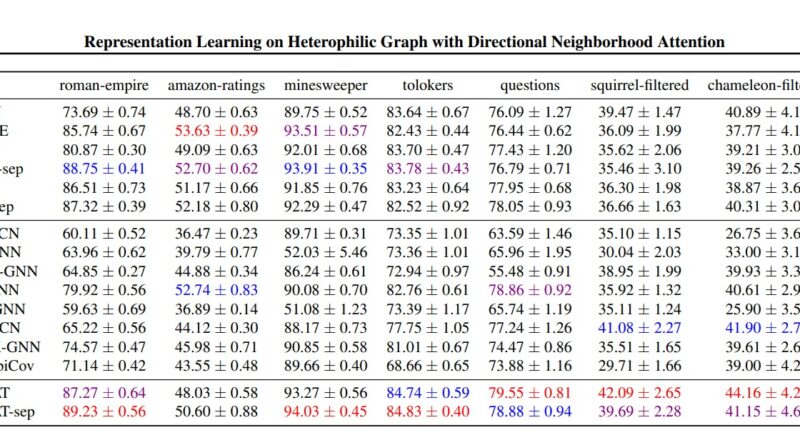

Empirical Evaluations and Performance

Empirical evaluations of DGAT have demonstrated its superior performance across various benchmarks, particularly in handling heterophilic graphs. The research team reported that DGAT outperforms traditional GAT models and other state-of-the-art methods in several node classification tasks. On six of seven real-world benchmark datasets, DGAT achieved remarkable improvements, highlighting its practical effectiveness in enhancing graph representation learning in heterophilic contexts.

The results of these empirical evaluations validate the effectiveness of DGAT in capturing long-range dependencies and global structures in heterophilic graphs. By incorporating global directional insights and feature-based attention mechanisms, DGAT enables GNNs to leverage diverse connections and improve their performance in scenarios where such information is vital for accurate analysis and learning.

Conclusion

DGAT emerges as a powerful tool for graph representation learning, bridging the gap between the theoretical potential of GNNs and their practical application in heterophilic graph scenarios. By addressing the limitations of traditional GNNs in handling heterophilic graphs, DGAT allows researchers and practitioners to extract valuable insights from complex networked information.

The Directional Graph Attention Networks (DGAT) framework, introduced by researchers from McGill University and the Mila-Quebec Artificial Intelligence Institute, enhances the capabilities of Graph Attention Networks (GATs) by incorporating global directional insights and feature-based attention mechanisms. Through the innovative use of Laplacian matrices and topology-guided neighbor pruning, DGAT significantly improves the ability of GNNs to capture long-range dependencies and global structures in heterophilic graphs.

The empirical evaluations of DGAT demonstrate its superior performance across various benchmarks, outperforming traditional GAT models and other state-of-the-art methods in node classification tasks. DGAT’s practical effectiveness in enhancing graph representation learning in heterophilic contexts makes it a promising tool for researchers and practitioners in diverse domains.

With DGAT as a powerful addition to the arsenal of graph neural networks, the analysis and learning from complex networked data can reach new heights, unlocking valuable insights in fields ranging from social networks to molecular structures. By embracing the directionality and diversity of connections within heterophilic graphs, DGAT paves the way for more accurate and comprehensive analysis of real-world networks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰