NVIDIA AI Introduces ‘garak’: The LLM Vulnerability Scanner for Enhanced Security in AI Applications

As the adoption of Large Language Models (LLMs) continues to expand across industries, so too does the need for robust security frameworks to safeguard these powerful AI systems. LLMs have proven their worth in generating contextually accurate and human-like text, making them indispensable in applications such as virtual assistants, content generation, and customer support. However, they are not without vulnerabilities. Issues such as hallucinations, data leakage, prompt injections, misinformation, toxicity, and jailbreak scenarios pose significant risks to their reliability and security.

In response to these challenges, NVIDIA has launched ‘garak’, a pioneering vulnerability scanner specifically designed to perform AI red-teaming and assess LLM vulnerabilities. This tool provides an automated, comprehensive approach to identifying, evaluating, and mitigating the risks associated with deploying LLMs in real-world applications.

The Growing Need for LLM Security

Understanding LLM Vulnerabilities

Large Language Models, while impressive in their capabilities, are prone to certain weaknesses:

- Hallucinations: Instances where an LLM generates false or misleading information.

- Prompt Injection Attacks: Exploits that manipulate the input prompt to cause unintended behavior.

- Data Leakage: Unintentional exposure of sensitive information present in the model’s training data.

- Misinformation: Generating inaccurate or harmful content that can damage trust.

- Jailbreaks: Methods used to bypass restrictions, enabling outputs that violate ethical or operational guidelines.

- Toxicity: Responses containing offensive, biased, or harmful language.

These vulnerabilities can lead to reputational damage, financial losses, and potential harm to users, emphasizing the critical need for proactive security measures.

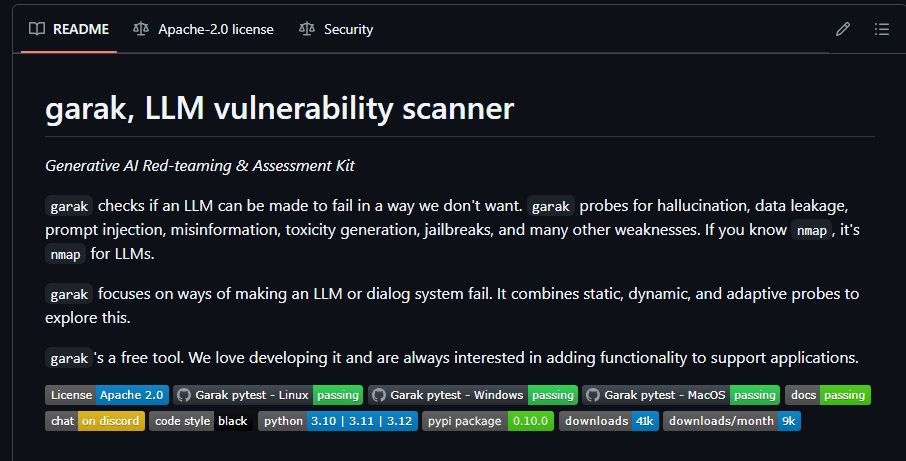

What Is Garak?

Garak, short for Generative AI Red-teaming and Assessment Kit, is NVIDIA’s response to the pressing need for a robust LLM vulnerability scanning tool. Unlike traditional manual testing approaches, Garak automates the vulnerability assessment process and provides actionable insights to mitigate risks. This framework is built to probe LLMs for vulnerabilities, classify issues based on severity, and recommend strategies to enhance the resilience of the models.

Key Features of Garak

1. Comprehensive Vulnerability Detection

Garak scans LLMs for a wide range of vulnerabilities, including:

- Hallucinations

- Data leakage

- Prompt injection attacks

- Toxicity and offensive content

- Misinformation

- Jailbreak scenarios

This breadth of coverage ensures that even subtle or hidden issues are detected and addressed.

2. Multi-Layered Assessment Framework

The tool employs a three-pronged methodology:

- Static Analysis: Examines the underlying architecture and training data of the model.

- Dynamic Analysis: Simulates real-world interactions using diverse prompts to uncover behavioral weaknesses.

- Adaptive Testing: Iteratively refines testing based on findings to uncover less obvious vulnerabilities.

3. Severity-Based Classification

Identified vulnerabilities are categorized based on their severity, exploitability, and potential impact. This structured approach helps prioritize mitigation efforts, enabling developers to address the most critical issues first.

4. Automated Recommendations

Garak provides actionable recommendations tailored to the specific vulnerabilities detected. For instance:

- Refining prompts to prevent malicious inputs.

- Retraining models to strengthen resilience.

- Implementing output filters to block inappropriate or harmful responses.

5. User-Friendly Architecture

Garak’s modular architecture consists of:

- Generator: Facilitates interaction with the LLM.

- Prober: Crafts and executes test cases.

- Analyzer: Evaluates the responses and identifies vulnerabilities.

- Reporter: Compiles findings and suggests remediation steps in a detailed report.

How Garak Stands Out

Comparison with Existing Methods

Traditional approaches like manual red-teaming or adversarial testing are often labor-intensive, require domain expertise, and lack scalability. Garak overcomes these limitations with its automated, systematic, and adaptable design.

| Feature | Garak | Traditional Methods |

|---|---|---|

| Automation | Fully automated testing and reporting | Manual and resource-intensive |

| Coverage | Comprehensive vulnerability scanning | Limited to specific attack vectors |

| Scalability | Easily scales across multiple models | Difficult to scale |

| Expertise Required | Minimal expertise required | Requires specialized knowledge |

Deployment Flexibility

Garak is available as an open-source tool, making it accessible to organizations of all sizes. Its flexible deployment options allow it to integrate seamlessly into existing workflows, whether on-premises or in cloud environments.

Real-World Applications

1. Enterprise Security

Organizations leveraging LLMs for customer interactions can use Garak to ensure their systems are robust against potential exploits, thereby maintaining customer trust.

2. Content Moderation

By identifying and mitigating issues like misinformation and toxicity, Garak helps organizations deploy LLMs that align with ethical guidelines and regulatory requirements.

3. Research and Development

Garak supports AI researchers in building more resilient models by providing insights into potential weaknesses and areas for improvement.

4. Education and Training

The tool is a valuable resource for training AI practitioners, providing hands-on experience in LLM vulnerability assessment and mitigation.

Impact of Garak on LLM Security

Garak represents a significant leap forward in the field of AI security. By automating vulnerability assessments and providing actionable insights, it empowers organizations to deploy LLMs with greater confidence. Key benefits include:

- Enhanced reliability and trustworthiness of AI applications.

- Reduced risk of reputational damage and financial losses.

- Faster deployment cycles due to streamlined vulnerability testing.

How to Get Started with Garak

Organizations and developers can begin using Garak by visiting its GitHub repository. The repository includes:

- Comprehensive documentation for installation and configuration.

- Pre-built test cases for common vulnerabilities.

- Guidelines for customizing the tool to specific applications.

Conclusion

NVIDIA’s garak sets a new standard in LLM vulnerability assessment, offering a comprehensive, automated, and user-friendly solution to the challenges faced by organizations deploying large language models. By addressing vulnerabilities such as hallucinations, data leakage, and prompt injections, Garak ensures that LLMs operate securely and effectively.

Whether you’re a developer, researcher, or business leader, Garak provides the tools needed to safeguard AI applications, reduce risks, and enhance user trust. With its robust capabilities and adaptability, Garak is poised to become an essential component of AI security in the era of advanced LLMs.

Do you have an incredible AI tool or app? Let’s make it shine! Contact us now to get featured and reach a wider audience.

Explore 3800+ latest AI tools at AI Toolhouse 🚀. Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Read our other blogs on AI Agents 😁

If you like our work, you will love our Newsletter 📰