Quantum Machine Learning and Variational Quantum Circuits: Enhancing Diffusion-Based Image Generation Models

In recent years, the fields of quantum computing and machine learning have been making significant strides individually. However, researchers at LMU Munich have taken a step further by exploring the integration of quantum machine learning and variational quantum circuits to augment the efficacy of diffusion-based image generation models [1].

Diffusion-based image generation models have emerged as a powerful tool in computer vision and graphics, enabling tasks such as synthetic data creation and aiding multi-modal models. However, these classical models face challenges like slow sampling speed and the need for extensive parameter tuning. Quantum machine learning (QML) offers a promising solution to these challenges by leveraging the principles of quantum mechanics for enhanced efficiency in machine learning tasks.

Quantum Denoising Diffusion Probabilistic Models (QDDPM)

Before diving into the LMU Munich research, it is worth noting that QDDPM, a model by Dohun Kim et al., is the sole notable contribution in the field of quantum diffusion models for image generation thus far [5]. QDDPM utilizes a single-circuit design with timestep-wise and shared layers, achieving space efficiency by requiring only log2(pixels) qubits. It addresses the vanishing gradient issue through constrained circuit depth and employs special unitary (SU) gates for entanglement. However, while it generates recognizable images, it lacks detail compared to the original images [8].

Introducing Q-Dense and Quantum U-Net

To build upon the limitations of existing quantum diffusion models, researchers at LMU Munich have introduced two novel architectures: Q-Dense and Quantum U-Net (QU-Net). These models are specifically designed to augment the efficacy of diffusion-based image generation models [1].

The Q-Dense model utilizes a dense quantum circuit (DQC) with extensive entanglement among qubits, while drawing inspiration from classical U-Nets. This architecture leverages amplitude embedding for input and angle embedding for class guidance, resulting in a trainable parameter count of #layers × 3 × #qubits [1].

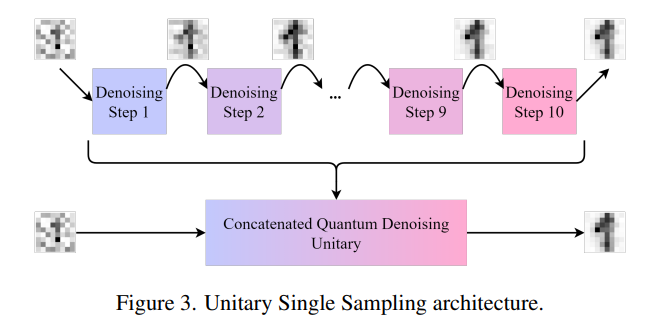

On the other hand, QU-Net incorporates quantum principles into its architecture while using mask encoding for labels and adapting to the quantum context. The unique approach of “Unitary Single Sampling” enables the creation of synthetic images in a single step by combining the iterative diffusion process into one unitary matrix U [1].

Experimental Evaluations

To assess the effectiveness of the proposed quantum models, experiments were conducted using popular datasets like MNIST, Fashion MNIST, and CIFAR10. These datasets are commonly used benchmarks in the field of computer vision.

In the MNIST Digits experiments, the Q-Dense model with 47 layers and 7 qubits outperformed classical networks with 1000 parameters, particularly with τ (time constant) values ranging from 3 to 5. It achieved Fréchet Inception Distance (FID) scores around 100, approximately 20 points better than classical models. For inpainting tasks, the DQC produced consistent samples with minor artifacts. However, the Mean Squared Error (MSE) scores of quantum models were only marginally lower than those of classical networks with twice as many parameters [1].

Overall, the experimental results demonstrated that the proposed quantum models showcased effective knowledge transfer and satisfactory inpainting results without the need for specific training for these tasks. This highlights the potential of quantum diffusion models in accelerating image generation while bridging the gap between quantum diffusion and classic consistency models [1].

Conclusion

The integration of quantum machine learning and variational quantum circuits represents a promising advancement in the field of diffusion-based image generation models. The research conducted by LMU Munich introduces the Q-Dense and QU-Net architectures, showcasing the potential of quantum models in surpassing classical approaches for image generation tasks.

By leveraging the principles of quantum mechanics, these models address the limitations of traditional diffusion models, such as slow sampling speed and high parameter tuning requirements. The experimental evaluations demonstrate the effectiveness of the proposed quantum models in generating high-quality images while showcasing their ability to bridge the gap between quantum diffusion and classic consistency models.

As quantum computing continues to evolve, the integration of quantum machine learning and variational quantum circuits holds tremendous potential for enhancing various machine learning tasks and pushing the boundaries of what is possible in image generation and beyond.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰