Safe Reinforcement Learning: Ensuring Safety in AI Decision-Making

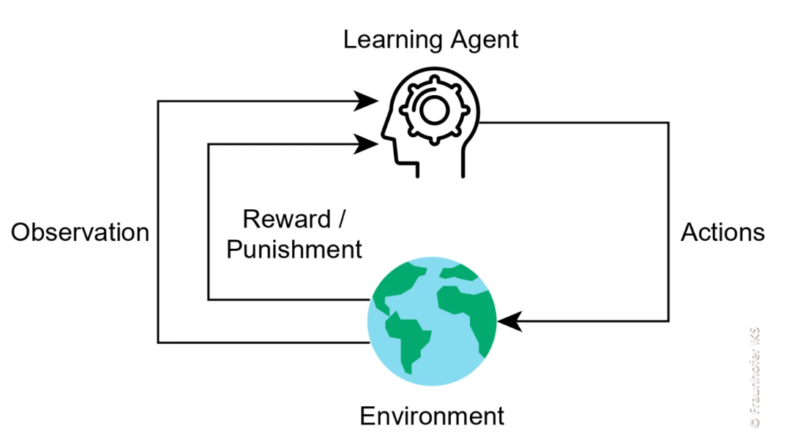

Reinforcement Learning (RL) has revolutionized the field of artificial intelligence by enabling intelligent agents to learn and make decisions without explicit programming. RL algorithms have achieved remarkable success in a wide range of applications, including game playing, robotics, and autonomous systems. However, when it comes to deploying RL in real-world scenarios, safety becomes a cr itical concern. This has led to the emergence of Safe Reinforcement Learning (Safe RL), which focuses on ensuring that RL algorithms operate within predefined safety constraints while optimizing performance.

The Need for Safe Reinforcement Learning

Traditional RL algorithms prioritize maximizing rewards without considering the potential risks or consequences of their actions. While this approach works well in controlled environments such as games, it can lead to dangerous or catastrophic outcomes when deployed in real-world systems. For example, an RL algorithm controlling an autonomous vehicle may learn to drive aggressively to achieve faster arrival times, disregarding traffic rules and endangering the safety of passengers and pedestrians.

To address these safety concerns, Safe RL aims to develop algorithms and techniques that allow RL agents to learn and make decisions while avoiding actions that could lead to undesirable outcomes. By incorporating safety constraints and considerations into the learning process, Safe RL ensures that intelligent agents operate in a controlled and safe manner.

Key Features of Safe Reinforcement Learning

Safe RL leverages various features and techniques to ensure safety while optimizing performance. Some of the key features of Safe RL include:

1. Safety Constraints

Safe RL introduces safety constraints that define the boundaries within which the RL agent should operate. These constraints can be defined based on domain-specific requirements or safety standards. By incorporating safety constraints into the learning process, RL agents learn to avoid actions that violate these constraints, thereby ensuring safety.

2. Exploration and Exploitation Trade-off

In RL, agents need to balance the exploration of new actions and the exploitation of known actions to maximize rewards. Safe RL algorithms incorporate safety considerations into this trade-off by prioritizing actions that have a lower risk of leading to unsafe states or actions. This ensures that RL agents explore and learn while maintaining safety.

3. Uncertainty and Risk Modeling

Safe RL algorithms often include mechanisms to model and estimate uncertainties and risks associated with different actions or states. By considering these uncertainties, RL agents can make informed decisions while minimizing the risk of unsafe actions. This approach is particularly useful in situations where the RL agent has limited knowledge about the environment or when the environment is dynamic and uncertain.

4. Safe Exploration

Exploration is a crucial component of RL, as it allows agents to discover new, potentially better actions. However, traditional exploration methods may lead to unsafe actions in real-world scenarios. Safe RL algorithms develop techniques that ensure exploration is performed in a controlled and safe manner, avoiding actions that could lead to undesirable outcomes.

Prominent Architectures in Safe Reinforcement Learning

Safe RL leverages various architectures and methods to achieve safety. Some of the prominent architectures include:

1. Model-Based Safe RL

Model-based Safe RL algorithms construct a model of the environment and use it to simulate possible actions and their consequences. This allows agents to explore and learn in a simulated environment before deploying learned policies in the real world. By considering the model’s predictions of potential risks and safety violations, agents can avoid unsafe actions.

2. Confidence-Based Filters

Confidence-based safety filters are another approach in Safe RL that aims to ensure safety during the learning and execution phases. These filters use confidence measures to identify and filter out actions that have a high risk of violating safety constraints. By applying these filters, RL agents can learn and operate in a safe and controlled manner.

3. Projection on a Safe Set

Projection on a safe set is a technique that constrains the RL agent’s actions to a predefined safe set. This approach ensures that the agent’s actions always remain within the boundaries of safety constraints. By projecting the RL agent’s actions onto the safe set, the agent can explore and learn while guaranteeing safety.

Recent Advancements in Safe Reinforcement Learning

Recent research has made significant strides in Safe RL, addressing various challenges and proposing innovative solutions. Some notable advancements include:

1. Safe Reinforcement Learning via Shielding

Shielding is an approach that adds a safety layer or shield to the RL agent’s policy. This shield ensures that the agent’s actions are always safe, even during the learning and exploration phases. By incorporating shielding techniques, RL agents can learn policies that guarantee safety.

2. Safe Reinforcement Learning with Time-Varying Constraints

This approach focuses on addressing the challenges of RL in dynamic environments where safety constraints may vary over time. By adapting the RL agent’s policies to changing constraints, safe RL algorithms enable intelligent systems to operate safely in complex and dynamic scenarios.

3. Safe Reinforcement Learning via Confidence-Based Filters

Confidence-based filters use confidence measures to identify and filter out unsafe actions during the learning and execution phases. This approach provides a principled way of incorporating safety considerations into RL algorithms, ensuring safety while optimizing performance.

Applications of Safe Reinforcement Learning

Safe RL has significant applications in several critical domains where ensuring safety is paramount. Some of these domains include:

1. Autonomous Vehicles

Safe RL plays a crucial role in the development of autonomous vehicles, where safety is of utmost importance. By ensuring that RL algorithms controlling autonomous vehicles operate within predefined safety constraints, Safe RL enables the safe and reliable deployment of autonomous driving systems.

2. Healthcare Robotics

Robotic systems used in healthcare settings, such as surgical robots or assistive robots, need to operate with a high degree of safety. Safe RL techniques enable these systems to learn and make decisions while avoiding actions that could harm patients or violate safety protocols.

3. Industrial Automation

Safe RL is also applicable in industrial automation, where robots and autonomous systems are used to perform tasks in manufacturing or hazardous environments. By incorporating safety constraints and ensuring that RL algorithms operate within these constraints, Safe RL enables safe and efficient automation processes.

Open Challenges in Safe Reinforcement Learning

Despite the progress made in Safe RL, several open challenges remain. Some of these challenges include:

1. Scalability and Sample Efficiency

Safe RL algorithms often require large amounts of data and computational resources to learn safe policies. Improving the scalability and sample efficiency of Safe RL algorithms is an ongoing challenge, as it enables the deployment of RL agents in complex and real-world scenarios.

2. Generalization and Transfer Learning

Safe RL algorithms need to generalize their learned policies to unseen environments or adapt to changing conditions. Developing techniques for generalization and transfer learning in Safe RL remains an active area of research.

3. Quantifying and Certifying Safety

Safe RL algorithms should provide guarantees on safety and quantify the level of safety achieved. Developing methods for certifying safety and providing formal guarantees in Safe RL is a challenging problem that researchers are actively addressing.

Conclusion

Safe Reinforcement Learning is a vital area of research aimed at making RL algorithms viable for real-world applications by ensuring their safety and robustness. By incorporating safety constraints, robust architectures, and innovative methods, Safe RL enables RL agents to learn and make decisions while avoiding actions that could lead to undesirable outcomes. With ongoing advancements and research, Safe RL continues to evolve, addressing new challenges and expanding its applicability across various domains. Safe RL paves the way for the safe and reliable deployment of RL in critical, real-world scenarios.

Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰