NVIDIA Researchers Introduce Nemotron-4 15B: A 15B Parameter Large Multilingual Language Model Trained on 8T Text Tokens

AI researchers are constantly striving to develop models that can effectively understand and interpret human language and code. These advanced language models have the potential to bridge the gap between humans and machines, enabling more intuitive interactions and overcoming linguistic barriers. NVIDIA, a pioneering company in artificial intelligence and computing, has recently introduced a groundbreaking solution to this challenge with their Nemotron-4 15B model.

The Need for Multilingual Language Models

One of the major hurdles in developing language models that can seamlessly transition between natural languages and programming languages is the complexity involved in acquiring linguistic nuances, cultural context, and code syntax and semantics. Traditionally, researchers have tackled this issue by training large-scale models on diverse datasets that encompass multiple languages and code snippets. The aim is to strike a balance between training data volume and model capacity, ensuring effective learning without favoring one language domain over another. However, these efforts have often faced limitations in terms of language coverage and consistent performance across different tasks.

NVIDIA’s Innovative Solution: Nemotron-4 15B

NVIDIA’s Nemotron-4 15B model represents a significant breakthrough in the field of multilingual language models. This model, with a staggering 15 billion parameters, has been trained on an unprecedented 8 trillion text tokens, covering a wide range of natural languages as well as programming languages. The scale and diversity of the training set have propelled Nemotron-4 15B to the forefront of the field, surpassing larger specialized models and outperforming models of similar size in terms of multilingual capabilities.

Meticulous Training Methodology

The success of Nemotron-4 15B can be attributed to its meticulous training methodology. The model employs a standard decoder-only Transformer architecture, enhanced with Rotary Position embedding and a SentencePiece tokenizer. This architectural choice, combined with the strategic selection and processing of the training data, ensures that Nemotron-4 15B not only learns from a vast array of sources but does so efficiently, minimizing redundancy and maximizing coverage of low-resource languages.

Unparalleled Performance

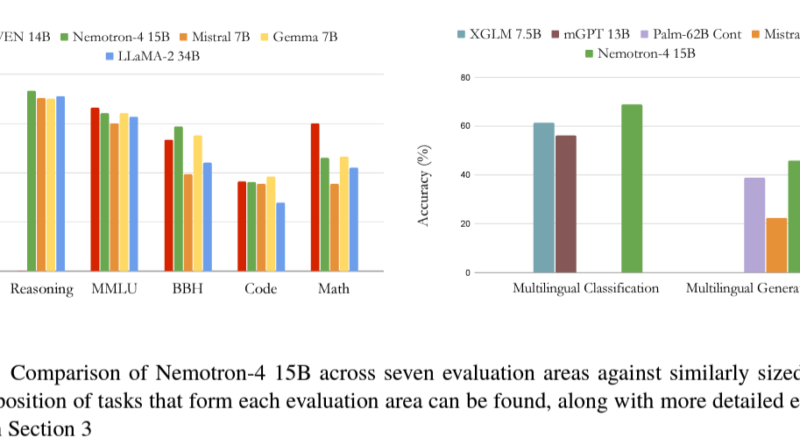

Nemotron-4 15B has demonstrated exceptional proficiency in comprehensive evaluations covering English, coding tasks, and multilingual benchmarks. In coding tasks, it exhibits better average accuracy than models specialized in code, such as Starcoder, and showcases superior performance in low-resource programming languages. Furthermore, Nemotron-4 15B has set new records in multilingual evaluations, achieving a significant improvement in the XCOPA benchmark. Its performance surpasses that of other large language models, including the LLaMA-2 34B model, which has over twice the number of parameters in multilingual capabilities.

Implications and Future Applications

The exceptional performance of Nemotron-4 15B underscores its advanced understanding and generation capabilities across domains. It solidifies its position as the leading model in its class, excelling in both general-purpose language understanding and specialized tasks. NVIDIA’s Nemotron-4 15B opens up a new era of AI applications, mastering the dual challenges of multilingual text comprehension and programming language interpretation.

The applications of Nemotron-4 15B are far-reaching and have the potential to revolutionize various aspects of technology and human-machine interactions. With its seamless global communication capabilities, this model can facilitate effective cross-cultural communication and understanding. It also paves the way for more accessible coding education, enabling individuals from different linguistic backgrounds to learn and engage with programming languages more effectively.

Furthermore, Nemotron-4 15B enhances machine-human interactions across different languages and cultures, making technology more inclusive and effective on a global scale. It has the potential to improve the accuracy and efficiency of natural language processing tasks in various industries, such as customer support, translation services, and content generation.

Conclusion

NVIDIA’s Nemotron-4 15B represents a significant milestone in the development of multilingual language models. Its large parameter size, combined with meticulous training methodology, has enabled it to outshine other models in terms of multilingual capabilities and performance. The exceptional proficiency of Nemotron-4 15B in understanding and generating text across domains position it as the leading model in its class.

The introduction of Nemotron-4 15B opens up new opportunities for global communication, coding education, and machine-human interactions. With its groundbreaking capabilities, this model has the potential to reshape the way we interact with technology, making it more inclusive, efficient, and effective on a global scale.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰