Apple AI Research Introduces AIM: A Collection of Vision Models Pre-Trained with an Autoregressive Objective

In the rapidly evolving field of artificial intelligence, Apple AI Research has made a significant breakthrough by introducing AIM (Autoregressive Image Models). AIM is a collection of vision models that are pre-trained with an autoregressive objective. This innovative approach has the potential to revolutionize computer vision tasks and open up new possibilities in various domains.

Understanding Autoregressive Image Models

Autoregressive models have been widely used in natural language processing, where models generate text by predicting each word based on the previously generated words. Apple AI Research has extended this concept to the field of computer vision by applying it to image generation and understanding.

🔥 Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

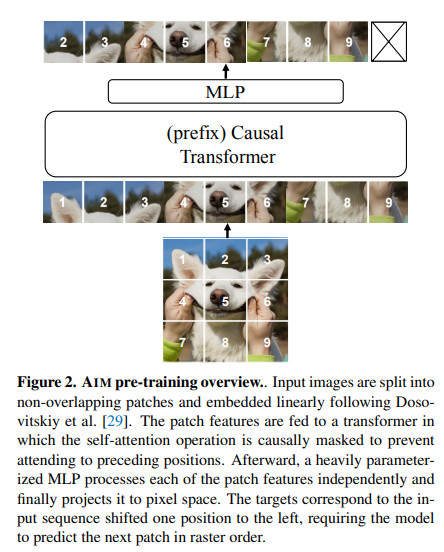

AIM models are trained using autoregressive objectives, which means that they predict each pixel or subregion of an image based on previously generated pixels or subregions. By training the models in this manner, AIM is capable of generating highly realistic and detailed images.

These pre-trained vision models are based on Transformer architectures, which have shown remarkable performance in natural language processing tasks. Transformers excel at capturing long-range dependencies and have become the backbone of several state-of-the-art AI models.

Key Advancements in AIM

Apple AI Research has made key advancements in AIM to improve its performance and scalability. The researchers have adopted prefix attention for bidirectional self-attention, which enhances the quality of generated features without significantly increasing training overhead.

Another important modification in AIM is the use of a heavily parameterized token-level prediction head inspired by contrastive learning. This further improves the quality of generated images and aligns AIM’s methodology with recent advancements in unsupervised learning approaches.

Additionally, AIM models utilize the Vision Transformer architecture, which prioritizes width expansion for model capacity scaling. This allows AIM to scale effortlessly to models with billions of parameters, making it highly scalable and adaptable to a wide range of computer vision tasks.

Scalability and Performance of AIM

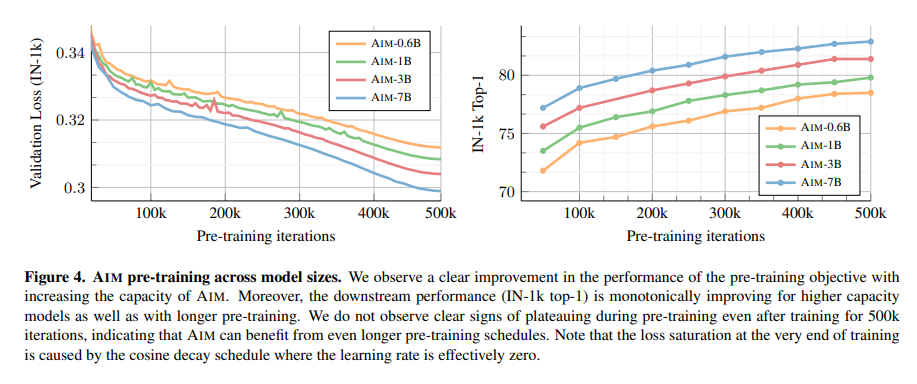

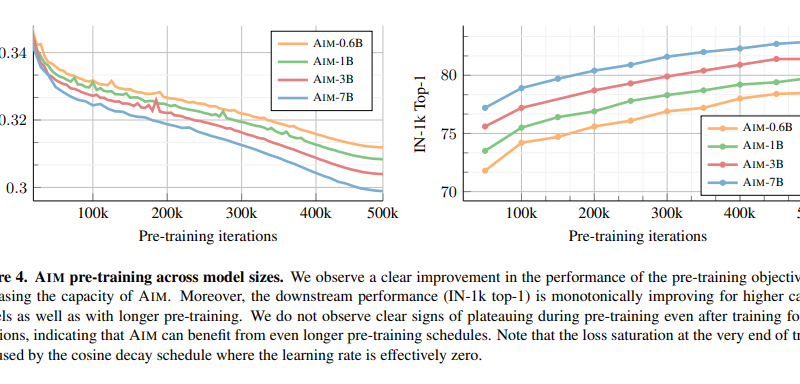

One of the key strengths of AIM is its scalability. The researchers at Apple AI Research have demonstrated that AIM can scale up to 7 billion parameters without the need for complex stability-inducing techniques. This scalability opens up possibilities for training larger models and achieving even better performance in various computer vision benchmarks.

AIM has been extensively evaluated across 15 diverse benchmarks, comparing its performance against state-of-the-art methods. In these evaluations, AIM has consistently outperformed generative counterparts such as BEiT and MAE-H. It has also surpassed MAE-2B, which is pre-trained on a private dataset.

Furthermore, AIM has demonstrated competitive performance when compared to joint embedding methods like DINO, iBOT, and DINOv2. These results highlight the effectiveness of AIM’s autoregressive pre-training approach and its ability to achieve high accuracy without relying on extensive tricks and techniques.

Implications and Future Research

The introduction of AIM by Apple AI Research has significant implications for the field of computer vision. AIM’s ability to generate highly realistic images and its impressive performance across various benchmarks make it a promising tool for applications such as image synthesis, object recognition, and image understanding.

Moreover, AIM’s scalability and adaptability to large models pave the way for future research in scalable vision models. It enables researchers to leverage uncurated datasets without bias and explore the potential of larger models and longer training schedules.

As the field of computer vision continues to evolve, AIM provides a solid foundation for further advancements and innovations. Apple AI Research’s commitment to pushing the boundaries of AI research has contributed to the development of AIM as a powerful tool for vision-based tasks.

In conclusion, Apple AI Research’s introduction of AIM, a collection of vision models pre-trained with an autoregressive objective, is a significant milestone in the field of computer vision. AIM’s adoption of autoregressive objectives, coupled with advancements in architecture and training methodologies, has resulted in highly scalable models with impressive performance across diverse benchmarks. The future of computer vision looks promising with AIM leading the way towards more sophisticated and intelligent vision models.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.