Transformers in Reinforcement Learning: Enhancing Memory and Overcoming Credit Assignment Challenges

Reinforcement learning (RL) has made significant advancements in recent years, thanks to the integration of Transformer architectures. Transformers are known for their ability to handle long-term dependencies in data and have been widely successful in domains like natural language processing and computer vision. Their application in RL aims to enhance memory capabilities, enabling algorithms to make informed decisions in complex and dynamic environments. However, while Transformers excel at enhancing memory, they face challenges in long-term credit assignments. In this article, we delve into the research conducted by experts from Université de Montréal and Princeton University to understand the impact of Transformers on memory and credit assignment in RL.

Understanding the Role of Memory and Credit Assignment in Reinforcement Learning

In RL, algorithms learn to make sequential decisions based on past observations and their impact on future outcomes. This process involves two crucial components: memory and credit assignment. Memory refers to the algorithm’s ability to understand and utilize past observations, while credit assignment involves discerning the impact of past actions on future outcomes.

🔥 Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

Memory plays a vital role in RL as it allows algorithms to learn from previous experiences and make informed decisions. For example, in a game-playing scenario, the algorithm must remember past moves and understand how these moves influence future game states. On the other hand, credit assignment focuses on attributing the consequences of actions to the correct decisions. It helps algorithms understand which actions led to favorable outcomes and which ones resulted in negative consequences.

Transformers as Enhancers of Memory in Reinforcement Learning

Transformers have gained prominence in RL due to their effectiveness in enhancing memory capabilities. Originally successful in domains like natural language processing and computer vision, Transformers have been adapted to RL to improve memory retention. They excel at capturing long-term dependencies in data and enable algorithms to utilize information from several steps in the past.

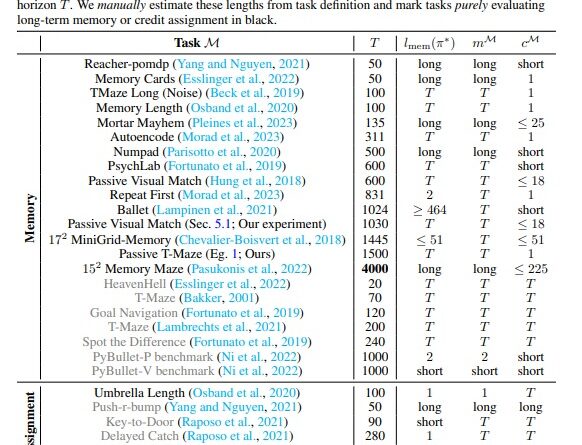

The research conducted by experts from Université de Montréal and Princeton University aimed to quantify the impact of Transformers on memory in RL. By introducing formal definitions for memory length, they were able to measure and compare the performance of Transformers against other memory-based RL algorithms, such as those utilizing Long Short-Term Memory (LSTM) architectures [1].

The study revealed that Transformers significantly enhance long-term memory in RL, allowing algorithms to utilize information from up to 1500 steps in the past. This capability enables RL algorithms to learn from a broader range of experiences and make more informed decisions. The enhanced memory retention offered by Transformers opens up new possibilities for RL applications in complex and dynamic environments.

The Challenge of Long-Term Credit Assignment

While Transformers excel at enhancing memory in RL, they face challenges in long-term credit assignments. Credit assignment involves understanding the delayed consequences of past actions and attributing them to the correct decisions. In simpler terms, it is the process of connecting past actions with future outcomes.

The research conducted by experts from Université de Montréal and Princeton University highlighted the limitations of Transformers in long-term credit assignments. Despite their ability to remember distant past events effectively, Transformers struggle to understand the delayed consequences of actions. This finding emphasizes the importance of a balanced approach in RL algorithms, where memory and credit assignment are given equal consideration.

Bridging the Gap: Research Insights and Assessment

To gain deeper insights into the impact of Transformers on memory and credit assignment in RL, the researchers devised configurable tasks specifically designed to test these aspects separately. By isolating memory and credit assignment capabilities, the study aimed to provide a clearer understanding of how Transformers affect RL algorithms’ performance.

The methodology involved evaluating memory-based RL algorithms, including both those utilizing Transformers and LSTMs, across various tasks with varying memory and credit assignment requirements. The tasks ranged from simple mazes to more complex environments with delayed rewards or actions. This approach allowed for a direct comparison of the two architectures’ abilities in different scenarios.

The research findings indicated that while Transformers enhance memory, they do not improve long-term credit assignment. In comparison, LSTMs, another memory-based RL architecture, demonstrate better performance in credit assignment tasks. This suggests that a combination of memory-based architectures, such as Transformers for memory enhancement and LSTMs for credit assignment, could yield more robust RL algorithms.

Implications for Reinforcement Learning Applications

The research conducted by experts from Université de Montréal and Princeton University has significant implications for RL applications. While Transformers excel at enhancing memory, their limitations in long-term credit assignments pose challenges in scenarios where delayed consequences play a crucial role.

However, this does not mean that Transformers have no place in RL. Their enhanced memory capabilities make them invaluable in environments where past experiences heavily influence future outcomes. By leveraging Transformers’ memory-enhancing abilities and combining them with architectures like LSTMs that excel in credit assignment, RL algorithms can achieve a more balanced and effective learning process.

Conclusion

Transformers have revolutionized various domains, including natural language processing and computer vision. In the realm of reinforcement learning, Transformers offer significant enhancements to memory capabilities, enabling algorithms to utilize information from distant past events. However, their challenges in long-term credit assignments highlight the need for a balanced approach in RL algorithms.

The research conducted by experts from Université de Montréal and Princeton University sheds light on the impact of Transformers on memory and credit assignment in RL. By isolating and quantifying each element’s capabilities, the study provides valuable insights into the strengths and limitations of Transformers in RL applications.

Moving forward, further research and exploration of hybrid architectures that combine memory enhancement from Transformers with better credit assignment capabilities will likely lead to more robust and effective RL algorithms.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰