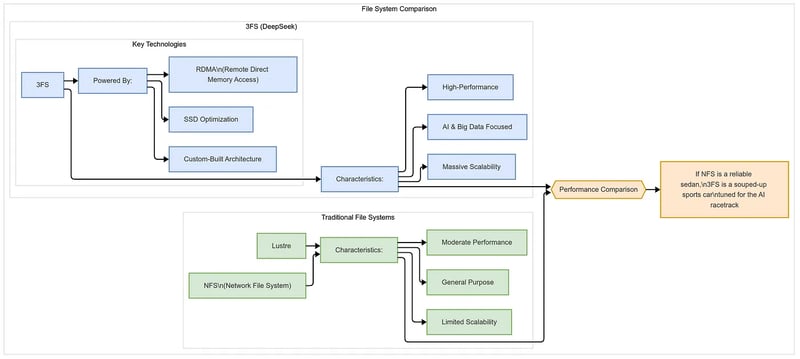

DeepSeek AI Unveils Fire-Flyer File System (3FS): A High-Performance Distributed File System for AI Workloads

Introduction: The Growing Storage Challenge in AI

With the rapid expansion of AI model training and inference workloads, the need for high-throughput, low-latency storage solutions has become more pressing than ever. Traditional file systems struggle to keep pace with the massive datasets and distributed training paradigms required by modern deep learning frameworks. The bottlenecks caused by slow disk access, network congestion, and inefficient metadata management significantly impact AI model performance.

To address these challenges, DeepSeek AI has introduced Fire-Flyer File System (3FS)—a high-performance, distributed file system optimized for AI workflows. Designed with scalability, efficiency, and reliability in mind, 3FS enables high-speed data access while reducing training and inference latency. This article explores how 3FS redefines AI storage architectures, making it a powerful alternative for large-scale AI training and inference.

🚀 Day 5 of #OpenSourceWeek: 3FS, Thruster for All DeepSeek Data Access

— DeepSeek (@deepseek_ai) February 28, 2025

Fire-Flyer File System (3FS) – a parallel file system that utilizes the full bandwidth of modern SSDs and RDMA networks.

⚡ 6.6 TiB/s aggregate read throughput in a 180-node cluster

⚡ 3.66 TiB/min…

Architecture and Key Features

The Fire-Flyer File System (3FS) is designed with disaggregated storage architecture, separating compute and storage resources to maximize flexibility and performance. This approach allows AI training nodes to access data without being constrained by traditional storage locality requirements.

Disaggregated Storage Model

Traditional file systems are often limited by data locality constraints, requiring compute nodes to store and retrieve data from specific locations. 3FS eliminates this restriction by aggregating the bandwidth of thousands of SSDs across a distributed network, ensuring that data is always available with minimal latency.

Consistency with Chain Replication and Apportioned Queries (CRAQ)

Many distributed file systems rely on eventual consistency, which can lead to inconsistencies in AI training and inference workflows. 3FS addresses this issue through Chain Replication with Apportioned Queries (CRAQ), which ensures strong consistency across the system while allowing highly concurrent data access. This mechanism reduces data conflicts and simplifies application development by providing real-time, consistent access to stored information.

Stateless Metadata Management with Transactional Key-Value Stores

Metadata management is a common bottleneck in distributed file systems. 3FS introduces a stateless metadata service, utilizing a transactional key-value store (such as FoundationDB) to improve metadata retrieval speed and system scalability. By decoupling metadata services from the core storage layer, 3FS ensures that metadata operations do not limit overall system performance.

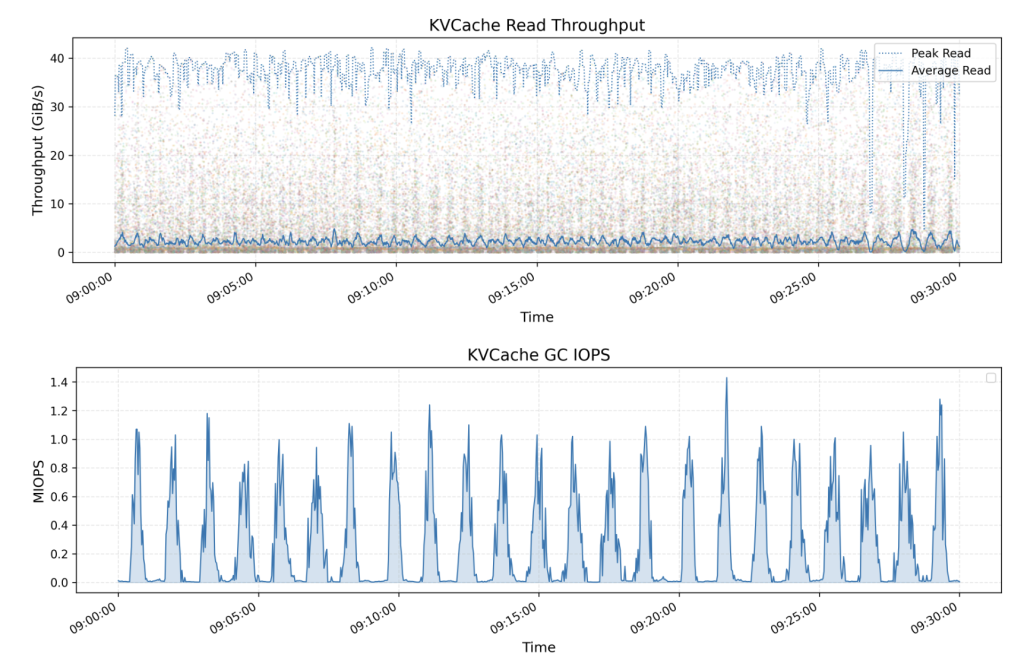

KVCache: Optimized Caching for AI Inference

For AI inference, 3FS introduces KVCache, a high-speed caching layer that enhances real-time data access. Unlike traditional DRAM-based caching, KVCache provides cost-effective high-throughput storage for frequently accessed data, optimizing inference workloads. The system automatically manages cache memory and reduces retrieval latency, making it particularly effective for language models and multimodal AI applications.

Performance and Benchmark Results

The performance of 3FS has been evaluated across multiple benchmarks, demonstrating significant improvements in training, sorting, and inference workloads.

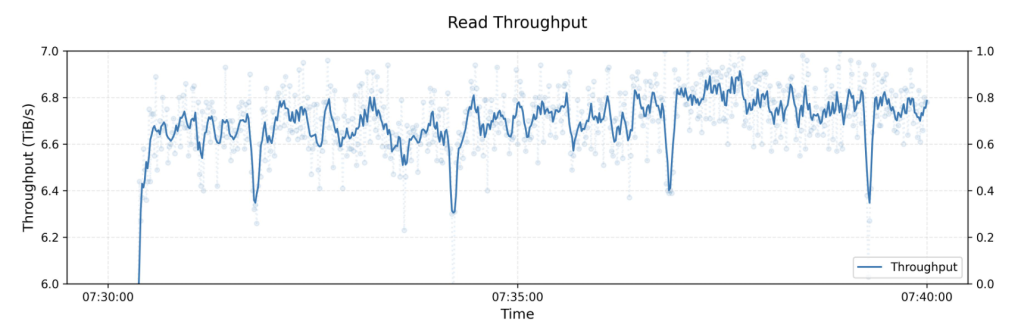

Training Performance on a 180-Node AI Cluster

- Achieved 6.6 TiB/s read throughput under real-world AI training conditions.

- Demonstrated efficient parallel data access, reducing storage-related bottlenecks.

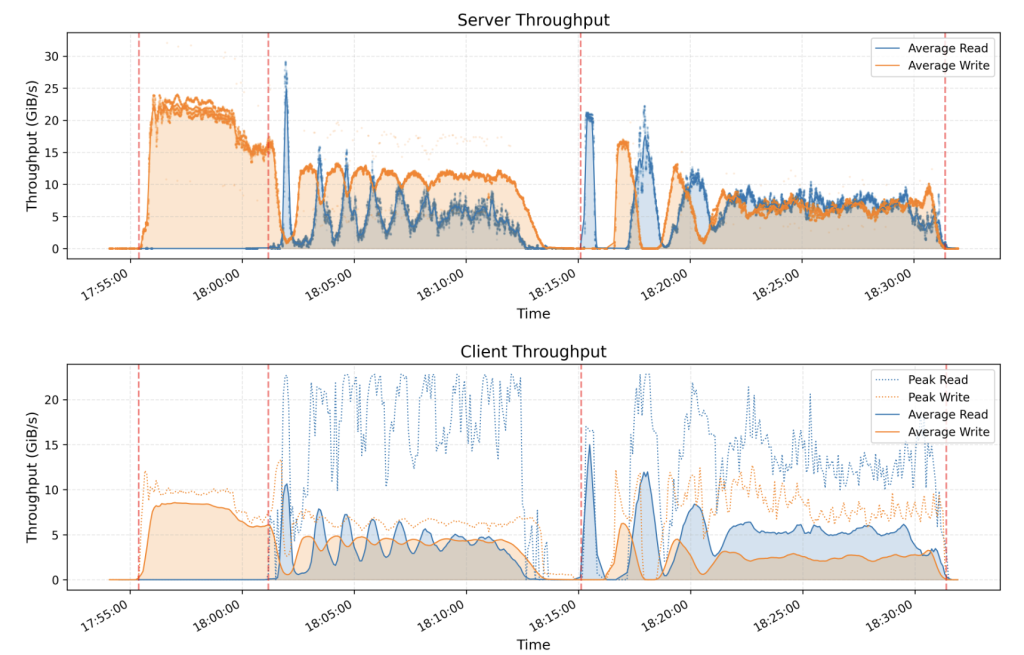

Sorting Performance (GraySort Benchmark)

- Processed 110.5 TiB of data across 8,192 partitions in 30 minutes.

- Achieved an average throughput of 3.66 TiB/min, surpassing traditional distributed file systems.

KVCache Performance for AI Inference

- Delivered 40 GiB/s read throughput, significantly improving AI model inference speeds.

- Managed dynamic cache memory allocation, optimizing performance in large-scale applications.

Applications and Use Cases

The Fire-Flyer File System (3FS) is designed to support a wide range of AI-driven applications, offering scalability and efficiency for various workloads.

Accelerating AI Model Training

With its high-throughput storage architecture, 3FS minimizes data transfer delays, reducing training times for large-scale AI models.

Enhancing Multi-Node Inference Workflows

By optimizing data retrieval with KVCache, 3FS improves response times in real-time AI applications, making it ideal for natural language processing, computer vision, and multimodal AI systems.

Scalability for AI Data Lakes

Designed for massive AI datasets, 3FS can efficiently scale across thousands of nodes, supporting next-generation AI architectures and large-scale machine learning pipelines.

Conclusion

DeepSeek AI’s Fire-Flyer File System (3FS) is a high-performance, AI-optimized distributed file system that addresses the storage challenges of modern AI applications. By leveraging disaggregated storage, strong consistency models, and high-speed caching mechanisms, 3FS significantly improves AI training and inference efficiency.

As AI models continue to evolve, the ability to efficiently store, retrieve, and process large datasets will be critical to advancing AI research and development. 3FS provides a scalable, high-performance solution, ensuring that AI applications can operate at full capacity without storage bottlenecks.

For organizations and researchers looking to enhance their AI infrastructure, 3FS presents a compelling alternative that balances performance, scalability, and reliability.

Check out the GitHub Repo. All credit for this research goes to the researchers of this project.

Do you have an incredible AI tool or app? Let’s make it shine! Contact us now to get featured and reach a wider audience.

Explore 4000+ latest AI tools at AI Toolhouse 🚀. Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Read our other blogs on LLMs 😁

If you like our work, you will love our Newsletter 📰