Google Research Introduces TimesFM: A Game-Changing Forecasting Model

Time series forecasting is a critical task in machine learning, with applications spanning various industries such as finance, manufacturing, healthcare, and natural sciences. To tackle this challenge, researchers from Google Research have introduced a groundbreaking forecasting model called TimesFM. This model is pre-trained on a massive time-series corpus comprising 100 billion real-world time-points, enabling it to learn temporal patterns and make accurate predictions on previously unseen datasets.

The Power of Pre-Training

TimesFM leverages the remarkable advancements in deep learning, particularly in large language models (LLMs) for Natural Language Processing (NLP). By applying these models to time series forecasting, researchers aim to explore the potential of pre-training models on massive amounts of time-series data to learn valuable temporal patterns for accurate predictions.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

Traditionally, methods like ARIMA and GARCH have been widely used for time series forecasting. However, recent developments in deep learning have shown promising results, outperforming traditional statistical methods. The introduction of large language models for time series forecasting, such as DeepAR, Temporal Convolutions, and NBEATS, has further revolutionized the field.

Architecture and Training

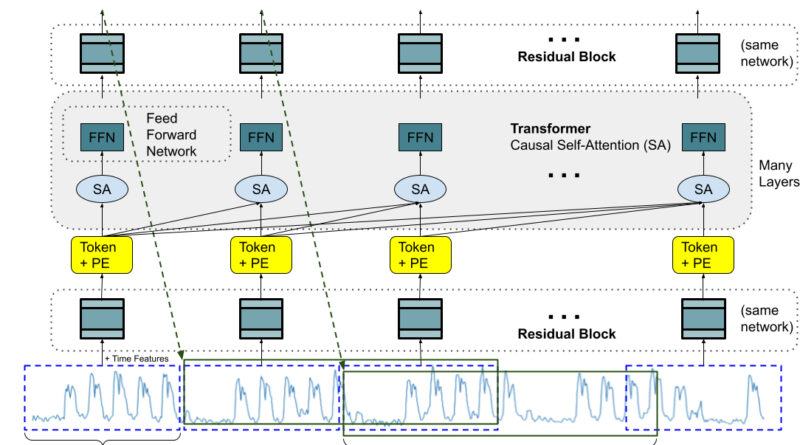

TimesFM’s architecture is based on a stacked transformer with a patched-decoder style attention mechanism. This mechanism draws inspiration from patch-based modeling in long-horizon forecasting. The model utilizes decoder-only training, enabling it to predict the future by analyzing different numbers of input patches in parallel. The training data for TimesFM consists of a combination of real-world and synthetic data. Real-world data is sourced from diverse platforms like Google Trends and Wiki Pageviews, while synthetic data is generated from statistical models such as ARIMA.

Impressive Zero-Shot Performance

Experiments conducted on TimesFM demonstrate its remarkable zero-shot forecasting performance. The model not only achieves impressive accuracy but also exhibits efficiency in terms of parameter size and pretraining data. In comparison to existing models, TimesFM outperforms specialized baselines and showcases its ability to generalize across multiple datasets from Darts, Monash, and Informer.

Advantages of TimesFM

The introduction of TimesFM represents a significant milestone in time series forecasting. Its unique architecture, featuring a patched-decoder attention mechanism and decoder-only training, contributes to its exceptional zero-shot forecasting performance. By training on a wide corpus of synthetic and real-world data, TimesFM demonstrates the potential of large pre-trained models for accurate and efficient time series forecasting.

The advantages of TimesFM can be summarized as follows:

- Accurate Predictions: TimesFM’s pre-training on a large time-series corpus enables it to capture valuable temporal patterns, leading to accurate predictions on previously unseen datasets.

- Efficiency: The model’s parameter size and pretraining data requirements are optimized, making it more efficient compared to existing models.

- Generalization: TimesFM outperforms specialized baselines across multiple datasets, showcasing its ability to generalize and adapt to diverse time series scenarios.

- Reduced Data Requirements: Leveraging pre-training on massive amounts of data reduces the need for extensive training data, making TimesFM a cost-effective solution.

Future Directions and Applications

The introduction of TimesFM opens up exciting possibilities for future research and applications in the field of time series forecasting. By leveraging the power of large pre-trained models, researchers and practitioners can explore novel approaches to tackle forecasting challenges in various domains.

Some potential future directions and applications include:

- Anomaly Detection: TimesFM can be utilized to detect anomalies in time series data, aiding in the identification of abnormal patterns and potential issues.

- Demand Forecasting: Industries such as retail and e-commerce can benefit from TimesFM’s accurate predictions to optimize inventory management and meet customer demands.

- Financial Predictions: Financial institutions can leverage TimesFM to forecast stock prices, exchange rates, and other financial indicators, assisting in informed decision-making and risk management.

- Energy Optimization: TimesFM’s forecasting capabilities can be applied to optimize energy consumption and production, enabling more efficient utilization of renewable energy sources.

In conclusion, Google Research’s introduction of TimesFM represents a groundbreaking advancement in time series forecasting. The model’s ability to learn temporal patterns from a large pre-training corpus of 100 billion real-world time-points sets a new standard for accuracy and efficiency. With its unique architecture and exceptional zero-shot performance, TimesFM paves the way for future innovations in time series forecasting, opening up new opportunities across various industries.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰