Harnessing Ray Diffusion for Enhanced 3D Reconstruction: CMU Researchers Unveil Groundbreaking AI Method for Camera Pose Estimation

Introduction

In the pursuit of high-fidelity 3D representations from sparse images, accurate camera pose estimation has always been a significant challenge. Traditional structure-from-motion methods often struggle with limited views, leading researchers to explore learning-based strategies that leverage the power of artificial intelligence. Recent breakthroughs in this field have uncovered innovative approaches that harness ray diffusion to enhance camera pose estimation and improve the quality of 3D reconstruction.

In this article, we will delve into the groundbreaking AI method unveiled by researchers at Carnegie Mellon University (CMU) that utilizes ray diffusion for enhanced 3D reconstruction. We will explore the underlying concepts, benefits, and potential applications of this cutting-edge technique.

The Limitations of Traditional Camera Pose Estimation

Traditional camera pose estimation methods typically rely on extrinsic camera matrices, which consist of rotation and translation components. While these methods have served as the foundation for 3D reconstruction, they often struggle when faced with sparse views, resulting in inaccurate pose estimation.

To overcome these limitations, CMU researchers have proposed a paradigm shift in camera parametrization and pose estimation techniques. Their innovative approach leverages the power of ray diffusion, enabling more precise and detailed camera pose estimation.

Introducing Ray Diffusion for Camera Pose Estimation

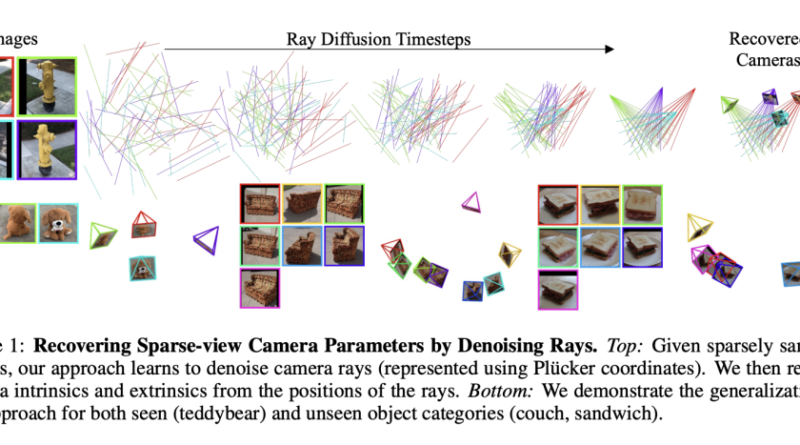

The CMU researchers’ method introduces a patch-wise ray prediction model that departs from the traditional global rotation and translation prediction. Instead, the model predicts individual rays for each image patch, allowing for a more granular and distributed representation of camera poses.

This patch-wise ray prediction model is particularly suitable for transformer-based models that process sets of features extracted from different patches. By analyzing these features, the model gains a deeper understanding of camera poses, leading to more accurate estimations.

Translating Rays into Extrinsic and Intrinsic Parameters

A key aspect of the proposed method is its ability to translate a collection of predicted rays into classical camera extrinsic and intrinsic parameters. This translation process accommodates non-perspective cameras as well, making the technique applicable to a wide range of scenarios.

The initial experiments conducted with a patch-based transformer model have already demonstrated a significant improvement over existing state-of-the-art pose prediction methods. The distributed ray representation offers a more detailed understanding of camera poses, leading to enhanced accuracy.

Addressing Ambiguities with Denoising Diffusion

To tackle inherent ambiguities in ray prediction, the CMU researchers incorporated a denoising diffusion-based probabilistic model into their approach. This additional layer of complexity allows the model to effectively distinguish between different distribution modes, further improving the accuracy of camera pose estimation.

By combining the power of ray diffusion with denoising diffusion, this innovative method excels at estimating camera poses, even in challenging sparse-view scenarios. The enhanced accuracy and robustness of the technique make it a potential game-changer in the field of 3D reconstruction.

Evaluating Performance on the CO3D Dataset

To validate the effectiveness of their method, the CMU researchers conducted rigorous evaluations on the CO3D dataset. This comprehensive analysis examined the performance of the technique across both familiar and novel categories, as well as its ability to generalize to completely unseen datasets.

The results of these evaluations confirmed the superior performance of the ray diffusion approach in accurately estimating camera poses. The technique showcased remarkable accuracy, particularly in challenging sparse-view scenarios where traditional methods often fall short.

The Implications of Ray Diffusion for 3D Reconstruction

The introduction of this revolutionary camera parametrization method opens up new possibilities for 3D representation and pose estimation. Moving away from global pose representation to a more detailed, ray-based model paves the way for future advancements in the field.

The success of the ray diffusion approach in surpassing traditional and learning-based pose prediction techniques highlights the potential of adopting more complex, distributed representations for neural learning. This breakthrough has profound implications for various applications in computer vision, robotics, augmented reality, and virtual reality.

Conclusion

The groundbreaking AI method unveiled by CMU researchers harnesses the power of ray diffusion for enhanced 3D reconstruction and camera pose estimation. By introducing a patch-wise ray prediction model and leveraging denoising diffusion, the technique achieves remarkable accuracy and robustness, even in challenging sparse-view scenarios.

This innovative approach represents a paradigm shift in camera parametrization, offering a more detailed and nuanced understanding of camera poses. The implications of this breakthrough extend beyond 3D reconstruction, opening up new avenues for exploration in computer vision and artificial intelligence.

As researchers continue to push the boundaries of AI and computer vision, the integration of ray diffusion into camera pose estimation will undoubtedly drive further advancements in this field. The potential applications are vast, and the future of 3D reconstruction looks promising.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰