How Microsoft’s VASA-1 AI App Animates Faces in Photos to Talk and Sing

The field of artificial intelligence (AI) has made significant advancements in recent years, particularly in the realm of generating lifelike talking faces. These AI-generated faces have the potential to revolutionize various industries and domains, including digital communication, education, healthcare, and accessibility. Among the notable advancements in this field is the introduction of VASA-1 by researchers at Microsoft.

Understanding the Importance of Talking Face Generation

Within multimedia and communication contexts, the human face serves as a dynamic medium capable of expressing emotions and fostering connections. AI-generated talking faces represent an advancement with potential implications across various domains. These include enhancing digital communication, improving accessibility for individuals with communicative impairments, revolutionizing education through AI tutoring, and offering therapeutic and social support in healthcare settings. This technology stands to enrich human-AI interactions and reshape diverse fields.

Current Limitations in Talking Face Generation

Numerous approaches have emerged for creating talking faces from audio, yet current techniques fall short of achieving the authenticity of natural speech. While lip synchronization accuracy has improved, expressive facial dynamics and lifelike nuances receive inadequate attention, resulting in rigid and unconvincing generated faces. Though some studies address realistic head motions, a significant disparity persists compared to human movement patterns. Also, generation efficiency is crucial for real-time applications, but computational demands hinder practicality.

Explore 3600+ latest AI tools at AI Toolhouse 🚀

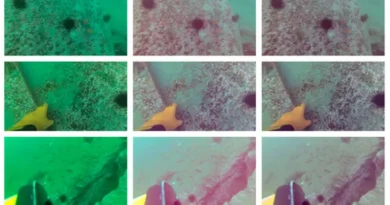

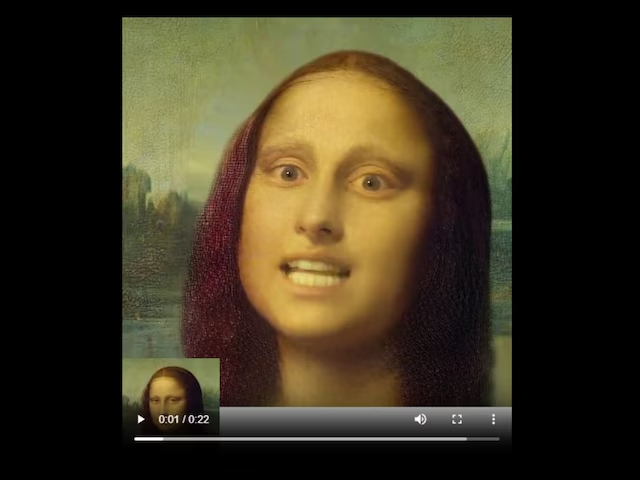

VASA-1: A Breakthrough in Talking Face Generation

Microsoft researchers introduce VASA, a framework for generating lifelike talking faces endowed with appealing visual affective skills (VAS) from a static image and a speech audio clip. Their premier model, VASA-1, achieves precise lip synchronization and captures a broad range of facial nuances and natural head movements, enhancing authenticity and liveliness. Key innovations include a diffusion-based model for holistic facial dynamics and head movement generation within a face latent space, developed using expressive and disentangled face latent space from videos.

How VASA-1 Works

VASA aims to generate lifelike videos of a given face speaking with provided audio. It emphasizes clear image frames, precise lip sync, expressive facial dynamics, and natural head poses. Optional control signals guide generation. Holistic facial dynamics and head motion are generated in a latent space conditioned on audio. A face latent space is constructed, and diffusion transformers are utilized for motion generation. Conditioning signals like audio features and gaze direction enhance controllability. At inference, appearance and identity features are extracted, and motion sequences are generated to produce the final video.

Advantages of VASA-1: A Comparative Analysis

The researchers compared VASA-1 with existing audio-driven talking face generation techniques such as MakeItTalk, Audio2Head, and SadTalker. Results demonstrate the superior performance of VASA-1 across metrics on VoxCeleb2 and OneMin-32 benchmarks. Their method achieved higher audio-lip synchronization, superior pose alignment, and lower Frechet Video Distance (FVD), indicating higher quality and realism than existing methods and even real videos.

Impact and Future Applications

The introduction of VASA-1 has significant implications for a wide range of applications. In the field of communication, this technology can revolutionize video calling by enabling lifelike avatars that accurately replicate facial expressions and lip-sync with audio. In education, AI-driven tutoring systems can utilize VASA-1 to create interactive virtual instructors that enhance the learning experience. In healthcare, lifelike talking faces can provide therapeutic and social support for patients, particularly those with communicative impairments. Furthermore, VASA-1 can greatly improve accessibility for individuals with disabilities by enabling better communication and interaction. These advancements point towards a future where AI-generated talking faces seamlessly merge with human-human and human-AI interactions, transforming the way we communicate and engage with technology.

Conclusion

Microsoft researchers have introduced VASA-1, a groundbreaking framework for generating lifelike talking faces with audio-driven innovation. This technology enables precise lip synchronization, expressive facial dynamics, and natural head movements from a single static image and audio input. VASA-1 surpasses existing methods in terms of both video quality and performance efficiency, showcasing promising visual affective skills in generated face videos. With its potential implications in communication, education, healthcare, and accessibility, VASA-1 paves the way for enhanced human-AI interactions and transformative advancements in various domains.

It is important to note that while the technology behind VASA-1 offers innovative possibilities, it also raises ethical concerns regarding the potential misuse of AI-generated content. Ethical considerations and responsible use of these advancements are crucial to ensure that they bring a positive impact and do not undermine trust and integrity in society. As researchers continue to push the boundaries of AI-generated talking faces, it is essential to strike a balance between innovation and ethical practices for a better future.

Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰