Theory of Mind in AI: Comparing GPT-4 and LLaMA-2 with Human Intelligence

The theory of mind, the ability to attribute mental states to oneself and others, is fundamental to human social interactions. As AI and large language models (LLMs) continue to advance, there is a growing interest in understanding how these systems compare to human intelligence when it comes to theory of mind abilities. In this article, we will explore and compare the theory of mind capabilities of GPT-4 and LLaMA-2 with human intelligence.

Understanding Theory of Mind

Theory of mind refers to the ability to understand and attribute mental states, such as beliefs, desires, and intentions, to oneself and others. It allows individuals to navigate social interactions, predict behavior, and make sense of the world around them. Humans develop theory of mind abilities from an early age, enabling them to understand that others have thoughts, emotions, and perspectives that may differ from their own.

Evaluating Theory of Mind Abilities in LLMs

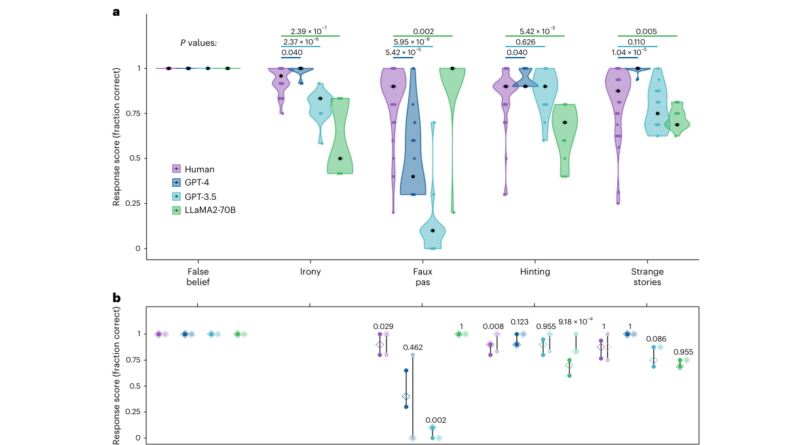

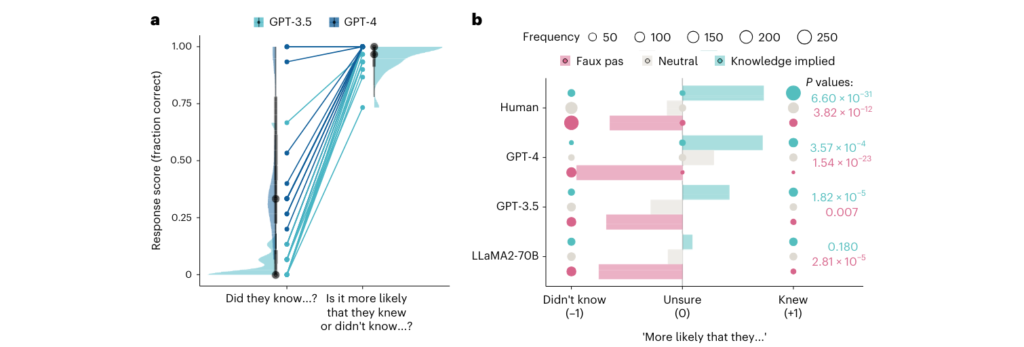

To assess the theory of mind abilities of LLMs like GPT-4 and LLaMA-2, researchers have conducted studies comparing their performance with human participants using a battery of tests. These tests are inspired by psychological experiments and cover a range of theory of mind abilities, from basic understanding of false beliefs to more complex interpretations of social situations.

Hinting Task

The hinting task is a common test used to evaluate theory of mind abilities. It involves a scenario where one person drops hints to convey a message to another person without explicitly stating it. LLMs like GPT-4 have demonstrated strengths in understanding and interpreting these hints, often surpassing human performance. This suggests that these models can effectively attribute mental states and understand implied knowledge.

False Belief Task

The false belief task is another crucial test in theory of mind evaluations. It assesses an individual’s ability to understand that someone can hold a false belief about a situation, even if they themselves possess the correct information. GPT-4, GPT-3.5, and LLaMA-2 have undergone multiple repetitions of this test, and their performance has been compared against human participants. While LLMs show some understanding of false beliefs, they may struggle with uncertain scenarios and exhibit a cautious approach.

Recognition of Faux Pas

The recognition of faux pas test evaluates an individual’s ability to recognize social blunders or statements that inadvertently offend others. LLMs like GPT-3.5 and LLaMA-2 have demonstrated a bias towards affirming inappropriate statements, indicating a lack of differentiation in understanding implied knowledge. This highlights one of the challenges faced by LLMs in handling social uncertainty compared to humans.

Irony Comprehension

Irony comprehension is an important aspect of theory of mind, as it involves understanding sarcasm and other forms of figurative language. GPT-4 has shown strengths in irony comprehension, performing at or above human levels in this particular test. This suggests that these models can recognize and interpret subtle linguistic cues associated with irony.

Differences Between LLMs and Human Intelligence

While LLMs like GPT-4 and LLaMA-2 demonstrate remarkable advancements in certain theory of mind tasks, there are notable differences when compared to human intelligence. These differences can be attributed to various factors, including training methodologies and the nature of disembodied decision-making processes in LLMs.

Training Methodologies

GPT models, including GPT-4 and GPT-3.5, use mitigation measures to reduce hallucinations and improve the accuracy of facts. This cautious epistemic policy makes them overly cautious when faced with uncertain scenarios, such as the faux pas test. While this cautious approach ensures greater factual accuracy, it may hinder their ability to navigate social complexities and handle social uncertainty like humans.

Disembodied Decision-Making Processes

LLMs lack embodied decision-making processes that humans possess. Human intelligence is not solely reliant on language processing but also involves sensorimotor experiences and perception of the physical world. This embodied cognition allows humans to have a deeper understanding of social contexts and adapt their behavior accordingly. LLMs, on the other hand, rely solely on language inputs and lack the richness of embodied experiences, which can impact their theory of mind abilities.

The Importance of Systematic Testing

The evaluation of LLMs’ theory of mind abilities requires a systematic testing approach to ensure meaningful comparisons with human cognition. Researchers have adopted well-established theory of mind tests and scoring protocols specific to each test. These tests are designed to assess different aspects of theory of mind and provide insights into the strengths and limitations of LLMs compared to human intelligence.

Navigating Social Interactions with Human-Like Proficiency

Understanding the theory of mind abilities of LLMs is crucial for their further development and the goal of achieving human-like proficiency in navigating social interactions. While LLMs have shown promising advancements in certain theory of mind tasks, they still fall short in uncertain scenarios and handling social uncertainty. Future research and advancements in training methodologies may help bridge this gap and enable LLMs to better understand and navigate the complexities of human social interactions.

In conclusion, the theory of mind is a fundamental aspect of human intelligence that allows us to understand and attribute mental states to ourselves and others. While LLMs like GPT-4 and LLaMA-2 have made significant progress in theory of mind tasks, they still have limitations compared to human intelligence. The evaluation of LLMs’ theory of mind abilities requires systematic testing, and understanding the differences between LLMs and human intelligence is vital for their further development. Continued research in this field will contribute to the advancement of AI systems that can navigate social interactions with human-like proficiency.

FAQs

What is the theory of mind in artificial intelligence?

Theory of Mind AI is the ability of an AI to understand and model the thoughts, intentions, and emotions of others, like humans or other AIs. This skill helps AI interact more naturally and intuitively with people, making conversations and interactions smoother and more human-like.

How does AI compare to the human brain?

Artificial Intelligence (AI) and the human brain differ significantly in their processing and capabilities. AI excels in tasks requiring speed, accuracy, and handling large datasets without fatigue, leveraging algorithms and computational power. The human brain, on the other hand, is unparalleled in creativity, emotional understanding, and complex decision-making, relying on consciousness, intuition, and experiential learning. AI lacks the innate ability to understand context and emotions fully, making it an advanced tool rather than a replacement for human intelligence.

What is the theory of mind generative AI?

Theory of Mind AI revolutionizes human-computer interactions by enabling AI systems to understand human intentions, beliefs, and emotions. This innovation makes AI interfaces more intuitive and responsive, going beyond simple exchanges to better align with how humans naturally communicate.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰