Zyphra Open-Sources BlackMamba: A Novel Architecture that Combines the Mamba SSM with MoE to Obtain the Benefits of Both

As the field of Natural Language Processing (NLP) continues to advance, researchers constantly strive to develop novel architectures that overcome the limitations of existing models. One such breakthrough comes from the team at Zyphra, who have introduced BlackMamba, a groundbreaking architecture that combines the Mamba State Space Model (SSM) with the Mixture-of-Experts (MoE) model. This fusion creates a hybrid model that leverages the benefits of both approaches, ultimately leading to improved performance and efficiency in processing linguistic data.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

The Challenge of Processing Extensive Sequences

Processing extensive sequences of linguistic data has been a significant challenge in NLP. Traditional transformer models, while highly effective, often struggle with the computational and memory demands of lengthy sequences. This limitation arises from the quadratic complexity of the attention mechanisms employed by these models, which do not scale well as the sequence length increases. Researchers have sought alternative solutions that address these challenges and pave the way for more efficient NLP models.

State Space Models (SSMs) and Mixture-of-Experts (MoE)

To overcome the limitations of traditional transformer models, researchers have explored the benefits of State Space Models (SSMs) and Mixture-of-Experts (MoE) models. SSMs offer a way to linearize computational complexity, while MoE models reduce the computational overhead of training and inference. However, each approach also comes with its own trade-offs. SSMs may not fully exploit the potential of attention mechanisms, and MoE models often require increased memory resources.

Introducing BlackMamba: A Fusion of Mamba SSM and MoE

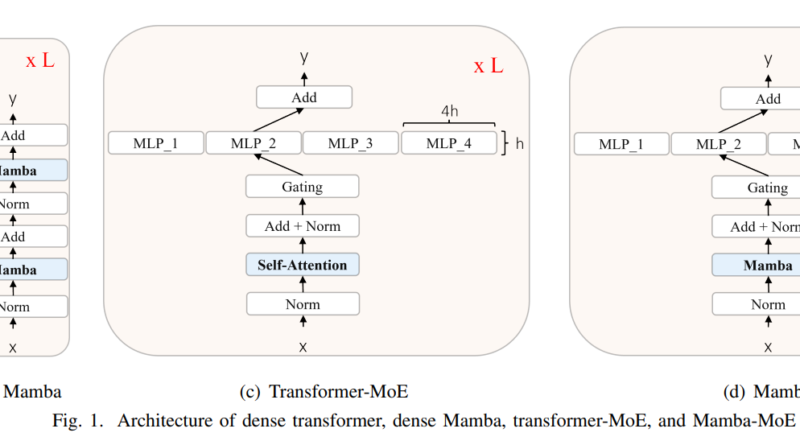

In this context, the introduction of BlackMamba by Zyphra researchers represents a significant advancement in NLP architecture. BlackMamba combines the Mamba SSM and MoE models in a novel way, allowing each approach to complement the other’s strengths. The architecture of BlackMamba stands out for its innovative combination of attention-free Mamba blocks and routed MLPs (Multi-Layer Perceptrons), resulting in a streamlined and efficient model for various language tasks.

Achieving Efficiency and Effectiveness

BlackMamba achieves a remarkable balance of efficiency and effectiveness by alternating between Mamba blocks and MoE blocks. Mamba blocks replace traditional attention mechanisms with a more streamlined approach, while MoE blocks selectively engage different expert components of the model based on the input. This combination allows BlackMamba to process extensive sequences more efficiently than traditional transformer models, without compromising on performance.

Superior Performance Metrics

The performance of BlackMamba has been rigorously evaluated against current benchmarks, demonstrating its superiority in handling long sequences while reducing the training FLOPs required to achieve comparable or even superior performance to dense transformer models. BlackMamba outperforms both SSM and MoE models in various NLP tasks, showcasing its potential to advance the field and offer a scalable and cost-effective solution for processing and understanding human language.

Open-Source Release and Collaboration

The release of BlackMamba as an open-source model demonstrates Zyphra’s commitment to transparency and collaboration in scientific research. By making the model and its training details publicly available, Zyphra encourages further exploration, experimentation, and innovation within the AI community. This open-source approach facilitates widespread adoption and adaptation of BlackMamba, setting a precedent for future developments in the field of NLP.

Conclusion

The introduction of BlackMamba by Zyphra researchers marks a significant milestone in the evolution of language models. By combining the Mamba SSM and MoE models, BlackMamba offers improved efficiency and performance in processing extensive sequences of linguistic data. Its innovative architecture and superior performance metrics make it a promising candidate for various NLP tasks. Furthermore, the open-source release of BlackMamba fosters collaboration and innovation, driving advancements in the field of AI and NLP.

BlackMamba represents a testament to the ongoing progress in NLP and serves as a stepping stone toward developing even more powerful and efficient language models. As researchers continue to explore new architectures and techniques, the future of NLP looks increasingly promising.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.