Language Bias, Be Gone! CroissantLLM’s Balanced Bilingual Approach is Here to Stay

In the realm of Natural Language Processing (NLP), language bias has long been a prevailing issue that hinders the development and deployment of inclusive language models. Many existing models are predominantly trained on English-centric data, resulting in a lack of proficiency and accuracy when it comes to processing other languages. However, a groundbreaking solution has emerged in the form of CroissantLLM, a balanced bilingual approach that aims to bridge the linguistic divide and foster a more inclusive NLP landscape.

Recognizing the Limitations

Traditional language models have largely focused on enhancing performance in English, neglecting the importance of multilingualism and the need for comprehensive understanding and generation of languages other than English. This English-centric bias limits the applicability of language models in diverse linguistic contexts, where the performance in non-English languages remains suboptimal.

The Birth of CroissantLLM

CroissantLLM is the result of collaboration between researchers from esteemed institutions and companies, including Illumina Technology, Unbabel, and INESC-ID Lisboa. The driving motivation behind CroissantLLM is to address the limitations imposed by an English-centric approach and create a truly bilingual model capable of understanding and generating multiple languages with equal proficiency.

A Truly Balanced Approach

CroissantLLM’s methodology stands out for its balanced training on English and French data. Unlike previous models that focused primarily on English, CroissantLLM adopts a 1:1 English-to-French pre-training data ratio. This approach ensures that the model is proficient in both languages, setting the stage for more inclusive and equitable language representation.

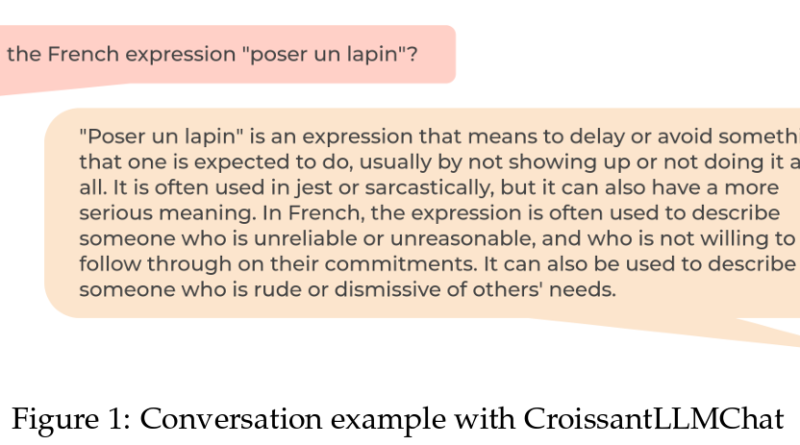

Custom Tokenizer and Bilingual Fine-Tuning

To further enhance its bilingual capabilities, CroissantLLM employs a custom tokenizer and bilingual fine-tuning datasets. This customization enables the model to effectively handle the nuances and intricacies of both English and French languages, resulting in improved performance and accuracy.

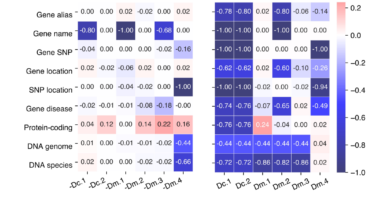

Performance Metrics and Benchmarks

CroissantLLM’s efficacy is demonstrated through its outstanding performance metrics. The model sets new benchmarks in bilingual language processing, outperforming existing monolingual and bilingual models. A novel benchmark, FrenchBench, validates the model’s superior performance, showcasing its ability to understand and generate English and French with exceptional accuracy and fluency.

Implications for NLP Applications

CroissantLLM’s development carries significant implications for the field of NLP. By addressing language bias and fostering a more inclusive approach, CroissantLLM paves the way for the development of language models that cater to a wider range of languages and cultures. This breakthrough not only enriches the NLP landscape but also contributes to our understanding of multilingualism and the importance of equitable language representation.

Openness and Collaboration

One of the key strengths of CroissantLLM lies in its transparency and openness. The research team behind CroissantLLM has released codebases and dozens of checkpoints, allowing for further research and innovation in large language models. This collaborative approach encourages the NLP community to build upon the foundations laid by CroissantLLM and push the boundaries of inclusive language technologies.

A New Era in Bilingual Language Model Training

CroissantLLM represents a new era in bilingual language model training, embodying the principles of diversity and inclusivity. Its balanced approach to English and French training, coupled with the availability of a comprehensive training dataset and performance benchmarks, showcases the potential of bilingual models in bridging linguistic divides. As we move forward, the insights gained from the development and evaluation of CroissantLLM will undoubtedly inspire future endeavors in multilingual NLP, propelling us towards more globally accessible and equitable language technologies.

Conclusion

Language bias has long plagued the field of NLP, hindering the development of inclusive language models. However, with the introduction of CroissantLLM and its balanced bilingual approach, we are witnessing a significant stride towards a more inclusive and equitable NLP landscape. By recognizing the limitations of English-centric models and emphasizing the importance of multilingualism, CroissantLLM sets a new standard for bilingual language processing. With its exceptional performance metrics and commitment to openness and collaboration, CroissantLLM inspires further research and innovation in the pursuit of globally accessible and equitable language technologies. Language bias, be gone!

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰