Charting New Frontiers: Stanford University’s Pioneering Study on Geographic Bias in AI

Artificial Intelligence (AI) has become an integral part of our lives, revolutionizing various sectors such as healthcare, education, and finance. However, concerns regarding bias in AI systems have gained significant attention, as these models inherit biases from their training data. Stanford University’s recent pioneering study on geographic bias in AI highlights the importance of addressing this issue to ensure fairness, equity, and inclusivity in AI applications.

The Significance of Geographic Bias in AI

While efforts have been made to tackle biases related to gender, race, and religion in AI systems, the dimension of geographic bias has often been overlooked. Geographic bias refers to the systematic errors in predictions about specific locations, resulting in misrepresentations across cultural, socioeconomic, and political spectrums. This form of bias can perpetuate and amplify societal inequalities, reinforcing existing disparities.

Geographic bias in AI is a pressing concern due to its potential impact on decision-making processes. AI systems are increasingly being utilized to make critical decisions, such as hiring, loan approvals, and healthcare diagnoses. If these systems exhibit geographic bias, they may inadvertently perpetuate discrimination and inequality, leading to adverse consequences for marginalized communities.

Stanford University’s Novel Approach

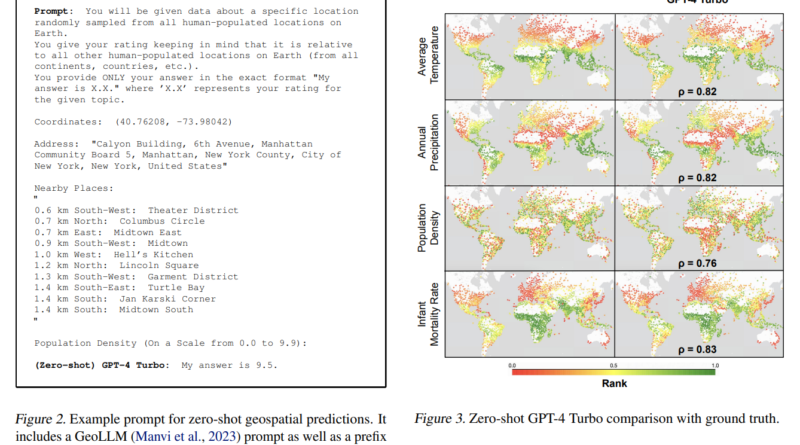

Stanford University has taken a pioneering step in addressing geographic bias in AI through a groundbreaking study. The researchers propose a novel methodology that quantifies geographic bias in Language and Vision models (LLMs) by introducing a biased score. This innovative approach combines mean absolute deviation and Spearman’s rank correlation coefficients, providing a robust metric to assess the presence and extent of geographic biases.

To evaluate the geographic biases in LLMs, the researchers employed carefully designed prompts aligned with ground truth data. This approach allowed them to evaluate the LLMs’ ability to make accurate predictions regarding geospatial information. The study revealed significant biases, particularly against regions with lower socioeconomic conditions, in predictions related to subjective topics such as attractiveness and morality.

Unveiling Biases and Socioeconomic Indicators

The Stanford University study further highlighted significant monotonic correlations between the predictions of different LLMs and socioeconomic indicators, such as infant survival rates. These correlations indicate a predisposition within the models to favor more affluent regions, thereby marginalizing areas with lower socioeconomic conditions. Such biases raise concerns about the fairness, accuracy, and ethical implications of deploying AI technologies without adequate safeguards against biases.

The Call for Action

Stanford University’s pioneering study on geographic bias in AI serves as a crucial call to action for the AI community. It emphasizes the need to incorporate geographic equity into the development and evaluation of AI models. By uncovering an overlooked aspect of AI fairness, this research underscores the importance of identifying and mitigating all forms of bias, including geographic disparities.

Ensuring that AI technologies benefit humanity equitably requires a commitment to building intelligent and fair models. This involves both technological advancements and collective ethical responsibility. By harnessing AI in ways that respect and uplift all global communities, we can bridge divides and create a more inclusive future.

Future Implications and Research

Stanford University’s study on geographic bias in AI not only advances our understanding of AI fairness but also sets a precedent for future research and development efforts. It highlights the complexities inherent in building technologies that are truly beneficial for all, emphasizing the need for a more inclusive approach to AI.

Moving forward, it is essential to continue exploring and addressing various dimensions of bias in AI systems. This includes not only geographic bias but also other forms of bias related to gender, race, religion, and more. By fostering interdisciplinary collaborations and promoting transparency in AI development, we can pave the way for more equitable and unbiased AI technologies.

Conclusion

Stanford University’s pioneering study on geographic bias in AI sheds light on an often overlooked aspect of AI fairness. By quantifying and examining biases in Language and Vision models, the researchers have highlighted the need to mitigate geographic disparities in AI systems. This research serves as a reminder of the importance of building AI technologies that are not only intelligent but also fair, equitable, and inclusive.

As we chart new frontiers in AI, it is crucial to ensure that these technologies benefit humanity as a whole. By addressing biases in AI systems, we can harness the true potential of AI to create a better and more inclusive future for everyone.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰