How Google DeepMind’s AI Harnesses the Power of Chain-of-Thought Decoding to Bypass Traditional Limits

In the fascinating realm of artificial intelligence, researchers are constantly pushing the boundaries of what machines can accomplish. One area that has seen significant advancements is the enhancement of reasoning capabilities in large language models (LLMs). Traditionally, LLMs relied on external prompts and instructions to tackle complex reasoning tasks. However, Google DeepMind has introduced a groundbreaking method called Chain-of-Thought (CoT) decoding, which leverages the inherent reasoning capabilities of LLMs without the need for explicit prompting. This article explores the power of CoT decoding and its ability to bypass traditional limits, opening up new possibilities in the field of artificial intelligence.

The Limitations of Traditional Prompting Techniques

Prompting techniques have long been the bedrock of applying LLMs to reasoning tasks. These techniques involve providing specific instructions or logic patterns for the model to follow. While effective, this approach has its limitations. It requires manual intervention and intricate prompt engineering, which can be time-consuming and restrict the model’s natural reasoning abilities. This prompted the researchers at Google DeepMind to explore alternative methods that tap into the latent reasoning capabilities of LLMs.

Introducing Chain-of-Thought (CoT) Decoding

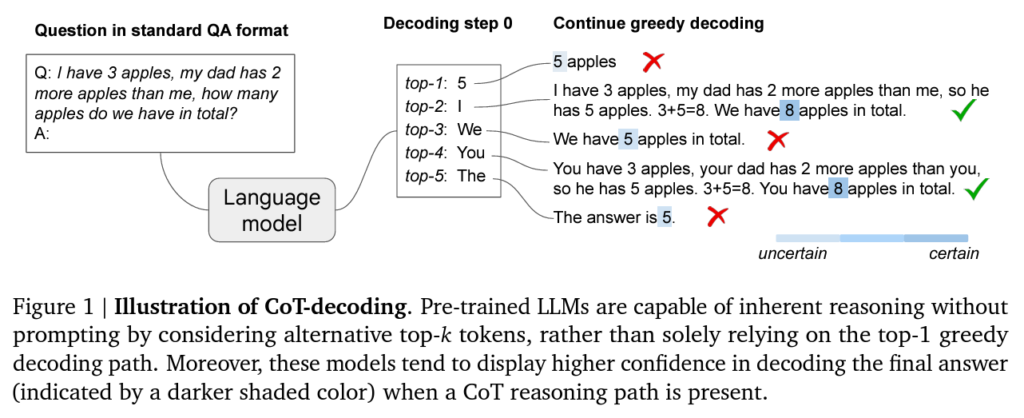

The concept of CoT decoding is centered around navigating the vast knowledge base encoded within pre-trained LLMs and uncovering latent reasoning paths. Unlike traditional prompting techniques, CoT decoding does not rely on external prompts to elicit reasoning processes. Instead, it allows the model to explore alternative paths during the decoding process, generating coherent and logical chains of thought akin to a human’s problem-solving process.

Unveiling Hidden Reasoning Sequences

CoT decoding empowers LLMs to generate reasoning paths by inspecting alternative top-k tokens during the decoding process. This exploration of alternative paths reveals hidden reasoning sequences that lead to logical conclusions. By venturing beyond the well-trodden paths, CoT decoding harnesses the full potential of LLMs and reduces the need for manual prompt engineering.

CoT Decoding vs. Traditional Decoding Methods

Empirical evidence from the study conducted by the DeepMind team demonstrates the superiority of CoT decoding over traditional greedy decoding methods. CoT decoding not only enhances the model’s reasoning capabilities but also instills a higher confidence level in the answers generated.

For instance, when applied to the PaLM-2 Large model in mathematical reasoning tasks such as the Grade-School Math (GSM8K) benchmark, CoT decoding achieved a remarkable +26.7% absolute accuracy improvement over traditional methods. This significant leap in performance highlights the potential of leveraging inherent reasoning paths within LLMs to solve complex problems more effectively.

The Implications of CoT Decoding

The research conducted by Google DeepMind has far-reaching implications for the evolution of artificial intelligence systems. By demonstrating that LLMs possess intrinsic reasoning capabilities that can be elicited without explicit prompting, CoT decoding paves the way for more autonomous and versatile AI systems. These systems can tackle various reasoning tasks, from solving intricate mathematical problems to navigating the subtleties of natural language reasoning, without the need for labor-intensive prompt engineering.

CoT decoding represents a paradigm shift in enhancing the reasoning abilities of large language models. It challenges the conventional reliance on prompting techniques and offers a glimpse into a future where machines can independently reason and solve complex tasks. This research marks a significant milestone in creating more intelligent and autonomous artificial intelligence systems.

Conclusion

Google DeepMind’s exploration of Chain-of-Thought decoding has revolutionized the field of artificial intelligence by harnessing the untapped reasoning capabilities of large language models. CoT decoding bypasses the limitations of traditional prompting techniques and enables LLMs to reason autonomously and more effectively across a wide range of complex tasks. Through CoT decoding, artificial intelligence systems can transcend traditional limits, opening up new possibilities for the future of AI research and development.

As we continue to unlock the potential of AI, the power of Chain-of-Thought decoding stands as a testament to the remarkable capabilities of machines in understanding and solving complex problems. With each advancement, we inch closer to a world where AI systems emulate human reasoning and contribute to solving the most pressing challenges of our time.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰