NuMind’s Advanced NER Models Set New Standards in Few-Shot Learning and Efficiency

Named Entity Recognition (NER) is a critical task in natural language processing, with applications ranging from medical coding and financial analysis to legal document parsing. Traditionally, custom NER models have been created using transformer encoders pre-trained on self-supervised tasks like masked language modeling (MLM). However, recent advancements in large language models (LLMs) have opened up new possibilities for NER.

NuMind, a leading AI research company, has recently released three state-of-the-art (SOTA) NER models that outperform similar-sized foundation models in the few-shot regime and even compete with much larger LLMs. These models have the potential to revolutionize the field of NER and offer improved performance and efficiency.

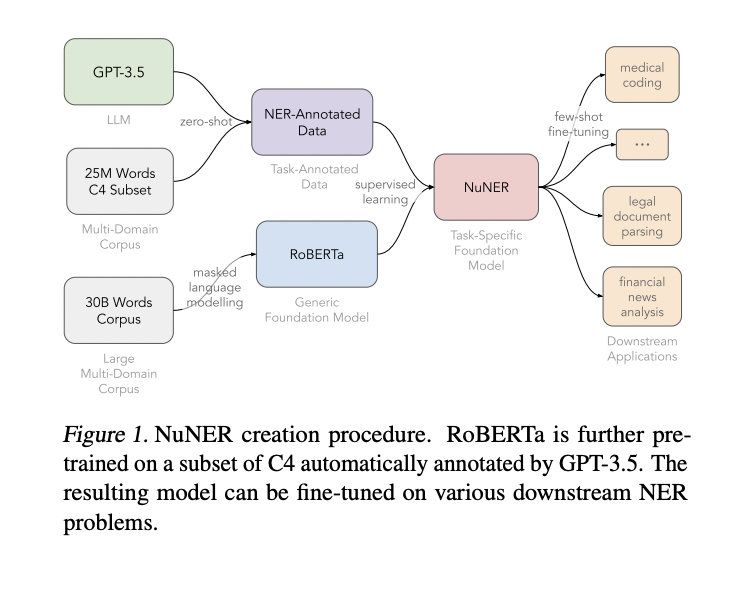

The NuMind Approach

The NuMind team has introduced an innovative approach to NER that leverages the power of LLMs while minimizing the need for human annotations. Instead of annotating a single-domain dataset for a specific NER task, NuMind utilizes an LLM to annotate a diverse, multi-domain dataset that covers various NER problems. This annotated dataset is then used to pre-train a smaller foundation model like BERT. The pre-trained model can be fine-tuned for any downstream NER task, resulting in highly effective and efficient NER models.

The Three SOTA NER Models

NuMind’s release includes three SOTA NER models, each with its own unique features and capabilities:

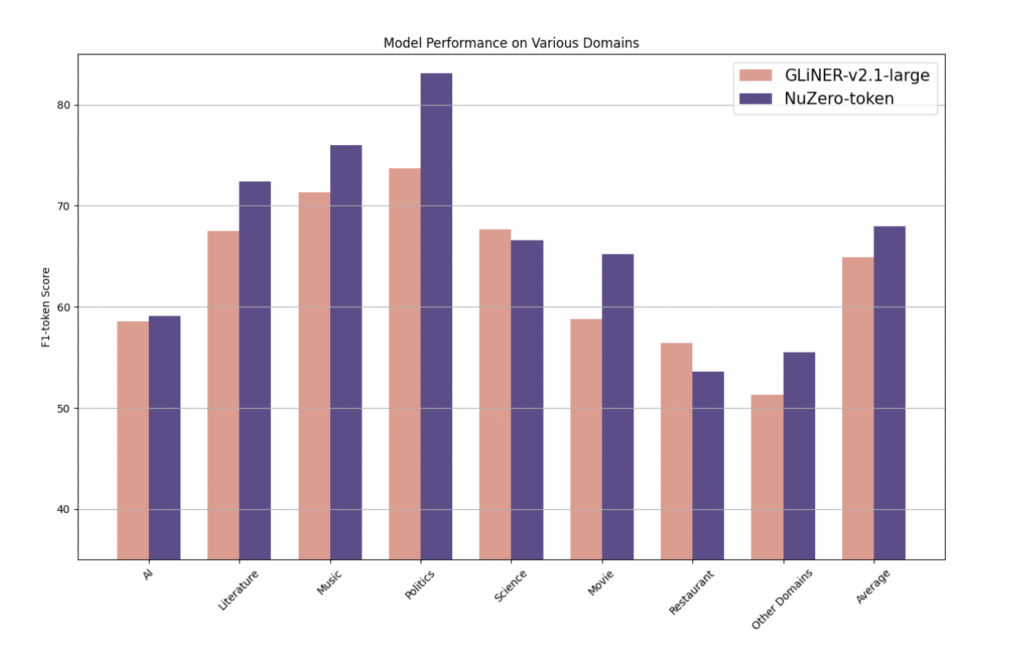

1. NuNER Zero

NuNER Zero is a compact zero-shot NER model that achieves remarkable performance in the few-shot regime. This model demonstrates the power of leveraging pre-training on a diverse, multi-domain dataset annotated by an LLM. Despite its small size, NuNER Zero outperforms similar-sized foundation models and even competes with much larger LLMs in terms of NER accuracy.

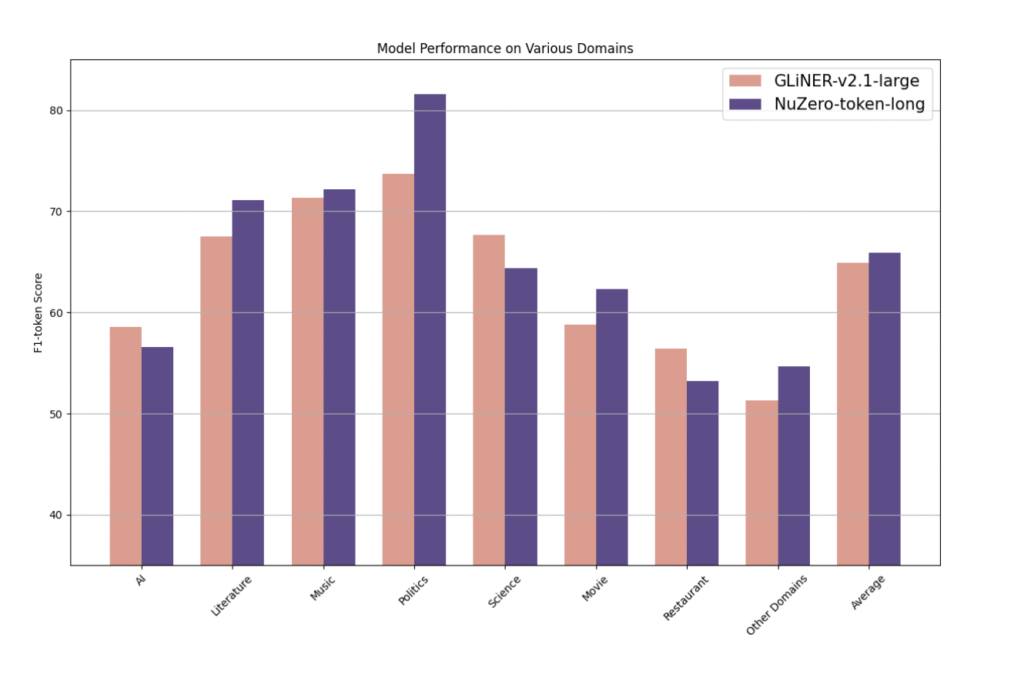

2. NuNER Zero 4k

NuNER Zero 4k is an enhanced version of NuNER Zero that focuses on processing longer contexts. This model is specifically designed to handle NER tasks that require a broader context window. By incorporating a longer context window, NuNER Zero 4k is able to capture more relevant information and improve the accuracy of NER predictions.

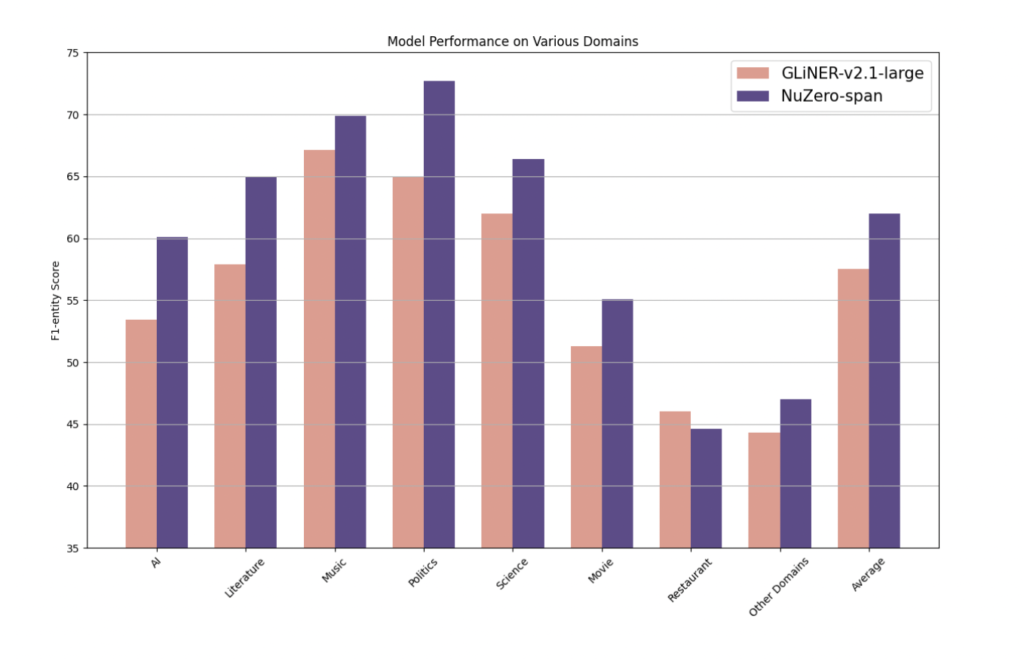

3. NuNER Zero-span

NuNER Zero-span is another variant of NuNER Zero that prioritizes span prediction. This model is optimized for tasks where identifying the exact boundaries of named entities is crucial. NuNER Zero-span achieves slight performance enhancements in terms of span prediction accuracy while remaining limited to entities under 12 tokens.

Advantages of NuMind’s NER Models

The release of NuMind’s three SOTA NER models brings several advantages to the field of NER:

1. Improved Performance

NuMind’s NER models outperform similar-sized foundation models in the few-shot regime. By leveraging the power of LLMs in the annotation process, these models achieve higher accuracy and deliver more reliable NER results. This breakthrough in performance opens up new possibilities for NER applications across various industries.

2. Efficient Few-shot Learning

Traditionally, few-shot learning in NER requires a significant amount of human annotations. However, NuMind’s approach minimizes the need for human annotations by utilizing LLMs to annotate a diverse dataset. This reduces the time and cost associated with manual annotations, making few-shot learning more efficient and accessible.

3. Competitive with Larger LLMs

One notable advantage of NuMind’s NER models is their ability to compete with much larger LLMs in terms of performance. While larger LLMs have traditionally dominated the field, NuMind’s models demonstrate that comparable accuracy can be achieved with smaller, more efficient models. This opens up possibilities for resource-constrained environments where deploying massive LLMs may not be feasible.

Conclusion

The release of NuMind’s three SOTA NER models marks a significant advancement in the field of named entity recognition. By leveraging the power of LLMs in the annotation process, these models outperform similar-sized foundation models in the few-shot regime and compete with much larger LLMs in terms of accuracy. The innovative approach introduced by NuMind reduces the reliance on human annotations and offers more efficient and accessible few-shot learning.

The NuMind NER models, including NuNER Zero, NuNER Zero 4k, and NuNER Zero-span, bring improved performance and efficiency to NER tasks. These models have the potential to revolutionize various industries that rely on accurate entity recognition, such as healthcare, finance, and legal services.

With NuMind’s groundbreaking research and continued advancements in NER, we can expect further developments in natural language processing and the broader field of artificial intelligence.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰