Breaking Barriers in Language Understanding: How Microsoft AI’s LongRoPE Extends Large Language Models to a 2048k Token Context Window

Language understanding is a fundamental challenge in the field of artificial intelligence (AI). The ability to comprehend and generate human-like responses is crucial for AI systems to interact effectively with users. Large language models (LLMs) have made significant strides in this domain, transforming the way we interact with AI. However, a key limitation of these models has been their context window size, which determines the amount of text they can consider in a single instance. Microsoft AI’s LongRoPE is a groundbreaking approach that addresses this limitation, extending the context window of LLMs to an impressive 2048k tokens.

The Limitation of Context Window Size in LLMs

LLMs such as OpenAI’s GPT-3 have revolutionized natural language processing tasks, including language translation, text generation, and sentiment analysis. These models employ deep learning techniques to learn patterns and relationships in vast amounts of textual data. However, LLMs have a fixed context window, meaning they can only consider a limited number of tokens at a time. This limitation restricts their ability to understand and generate responses for longer documents or conversations.

Introducing LongRoPE: Extending the Context Window

Recognizing the need to overcome the context window limitation, researchers at Microsoft Research have developed LongRoPE. This innovative approach extends the context window of pre-trained LLMs to an impressive 2048k tokens. LongRoPE is built upon three key strategies that enable LLMs to process and understand longer texts:

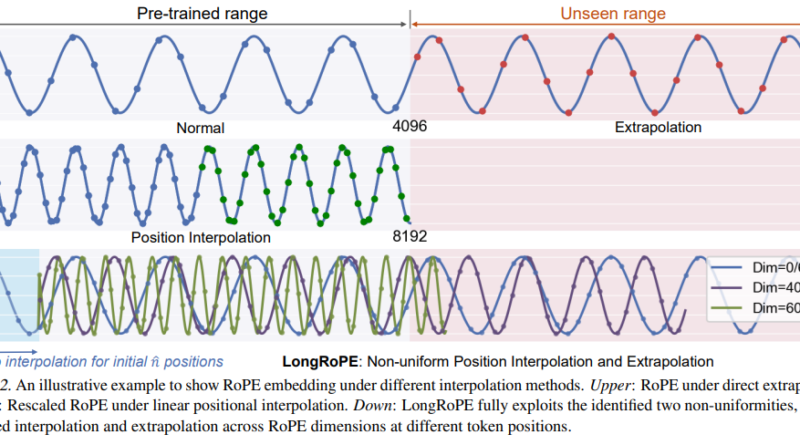

1. Identifying and Leveraging Non-Uniformities in Positional Interpolation

LongRoPE leverages non-uniformities in positional interpolation to optimize the extension of the context window. By strategically identifying the most informative tokens within the extended context, the model can make more accurate predictions and generate coherent responses. This technique allows LLMs to process longer texts without sacrificing accuracy or coherence.

2. Introducing a Progressive Extension Strategy

To overcome the challenges of training on long texts, LongRoPE employs a progressive extension strategy. This approach gradually extends the context window, ensuring that the model maintains its performance while processing longer documents. By incrementally increasing the context window size, LongRoPE optimizes the model’s ability to understand and generate responses for extended contexts.

3. Readjusting LongRoPE for Performance Recovery in Shorter Context Windows

While LongRoPE excels in processing longer texts, it also considers the importance of shorter context windows. The researchers have implemented mechanisms to readjust LongRoPE’s performance in shorter contexts, ensuring that the model retains its original accuracy. This adaptability allows the model to handle a range of context window sizes, making it versatile for various applications.

Optimizing LongRoPE through Evolutionary Search Algorithms

One of the key challenges in extending the context window of LLMs is the computational cost associated with processing longer texts. Long texts are relatively scarce, and training models on them can be resource-intensive. To overcome this challenge, LongRoPE utilizes evolutionary search algorithms to optimize positional interpolation. This optimization technique enables LongRoPE to extend the context window of LLMs by up to eight times without the need for fine-tuning on extra-long texts. This efficiency makes LongRoPE a practical solution for processing extended contexts and reduces the computational burden associated with training on long texts.

Validating LongRoPE’s Performance

Extensive testing of LongRoPE has been conducted across various LLMs and tasks, demonstrating its effectiveness in maintaining low perplexity and high accuracy even in extended contexts. The performance of LongRoPE retains the original model’s accuracy within the conventional short context window, while significantly reducing perplexity in extended contexts up to 2048k tokens. This capability opens up new possibilities for LLM applications, enabling them to process and analyze long documents or books in their entirety without losing coherence or accuracy.

For instance, LongRoPE has been applied to LLaMA2 and Mistral models, showcasing superior performance in standard benchmarks and tasks like passkey retrieval from extensive texts. These results highlight the potential of LongRoPE to revolutionize leveraging LLMs for complex text analysis and generation tasks.

The Implications of LongRoPE in Language Understanding

The development of LongRoPE represents a significant leap forward in the field of LLMs, addressing a critical limitation in context window size. By enabling LLMs to process and understand texts of up to 2048k tokens, LongRoPE paves the way for more sophisticated and nuanced AI applications. The extended context window opens up opportunities for LLMs to analyze and generate responses for longer documents, enabling more comprehensive language understanding.

This innovation not only enhances the capabilities of existing models but also sets a new benchmark for future developments in large language models. The ability to process and understand longer texts without sacrificing accuracy or coherence has the potential to transform various domains, including customer support, content generation, and language translation.

In conclusion, Microsoft AI’s LongRoPE extends the context window of large language models, breaking barriers in language understanding. With its innovative strategies and optimization techniques, LongRoPE enables LLMs to process and analyze longer texts, opening new avenues for AI applications. As AI continues to advance, the advancements in language understanding brought by LongRoPE will play a vital role in enhancing user interactions and enabling more sophisticated AI systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰