Introducing Many-Shot ICL

Artificial Intelligence (AI) has revolutionized numerous industries, from healthcare to finance, by enabling machines to perform complex tasks with astounding precision and efficiency. One of the most significant advancements in AI research comes from the highly esteemed organization, Google DeepMind. Their recent paper introduces enhanced learning capabilities with many-shot in-context learning (ICL), opening up new frontiers for AI applications.

Many-Shot In-Context Learning: Pushing the Boundaries

In-context learning (ICL) in large language models (LLMs) refers to the ability to learn from input-output examples without altering the model’s underlying architecture. This technique empowers models to adapt to new tasks with minimal effort. However, traditional few-shot ICL has limitations when it comes to handling intricate and complex tasks that require a deeper understanding.

DeepMind researchers recognized this limitation and addressed it by introducing many-shot ICL, which builds upon the foundations of few-shot learning but with significantly increased input examples and model adaptability. This breakthrough has the potential to unlock new possibilities in AI applications by enhancing the models’ learning and decision-making capabilities.

The Evolution of ICL: From Few-Shot to Many-Shot

Before delving into the specifics of many-shot ICL, it’s essential to understand the evolution of this framework. Previous research in the field of ICL focused primarily on few-shot learning, where the models adapted to new tasks with a limited set of examples. However, experiments using small context windows revealed constraints in task complexity and scalability.

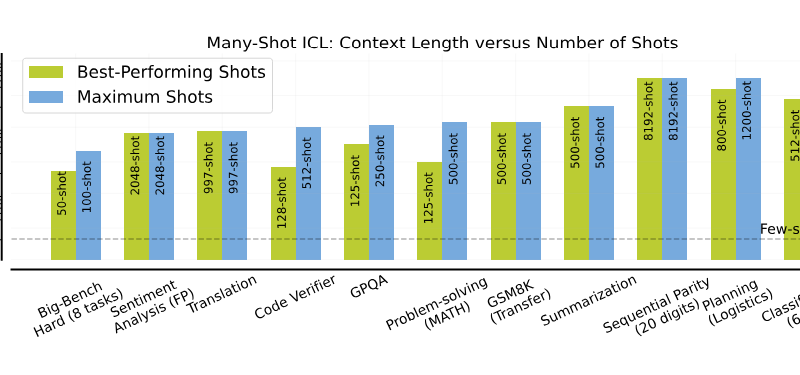

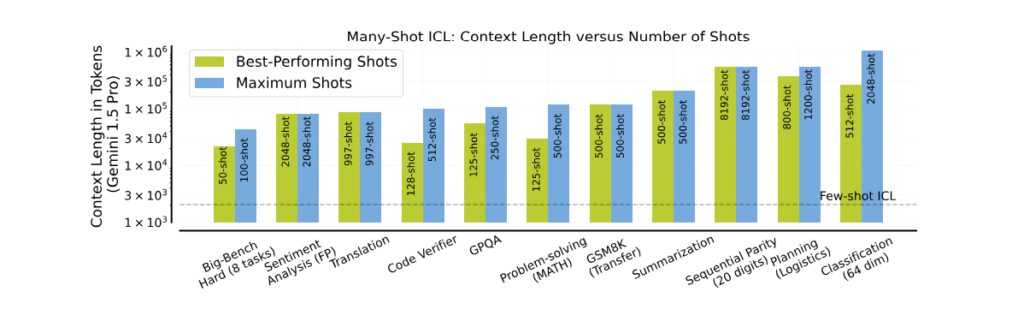

To overcome these limitations, Google DeepMind developed models with larger context windows, such as Gemini 1.5 Pro, that support up to 1 million tokens. This significant expansion facilitated the exploration of many-shot ICL, bolstering the models’ ability to process and learn from a vast dataset.

Bridging the Gap: Reinforced and Unsupervised ICL

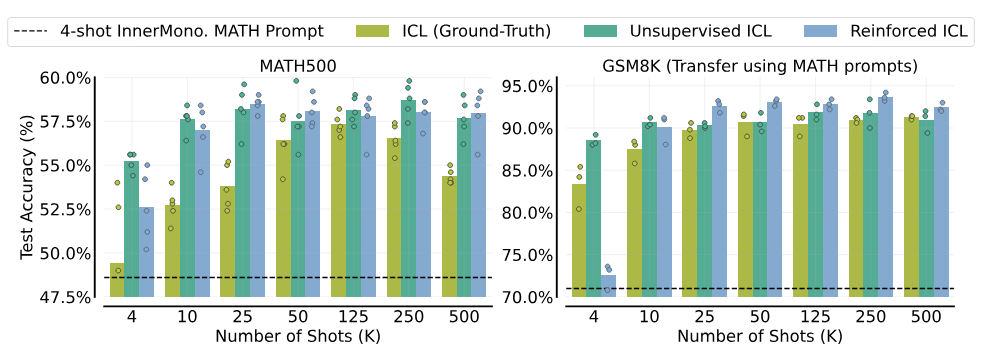

The transition from few-shot to many-shot ICL represents a paradigm shift in AI research. One key aspect of this methodology lies in the integration of Reinforced ICL and Unsupervised ICL. These innovative techniques reduce reliance on human-generated content by employing model-generated data and domain-specific inputs alone.

Reinforced ICL involves the model generating and evaluating its own rationales for correctness. This iterative process strengthens the model’s ability to make informed decisions based on the generated data alone. On the other hand, Unsupervised ICL challenges the model to operate without explicit rationales, pushing the boundaries of AI autonomy.

Unleashing the Full Potential: Many-Shot ICL in Action

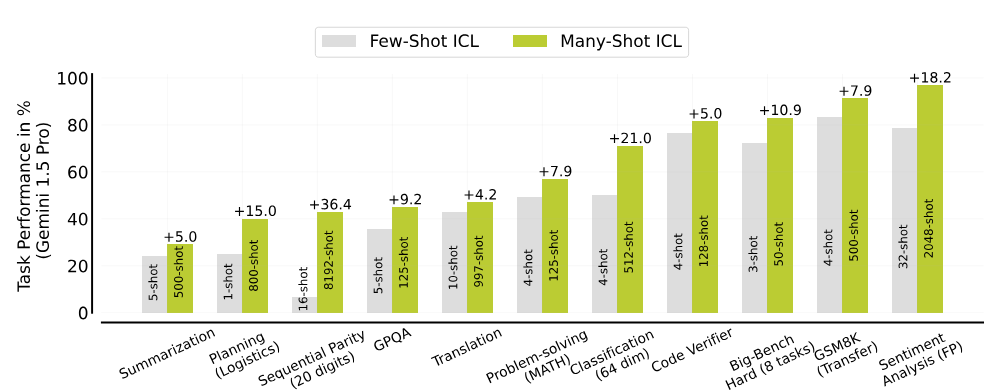

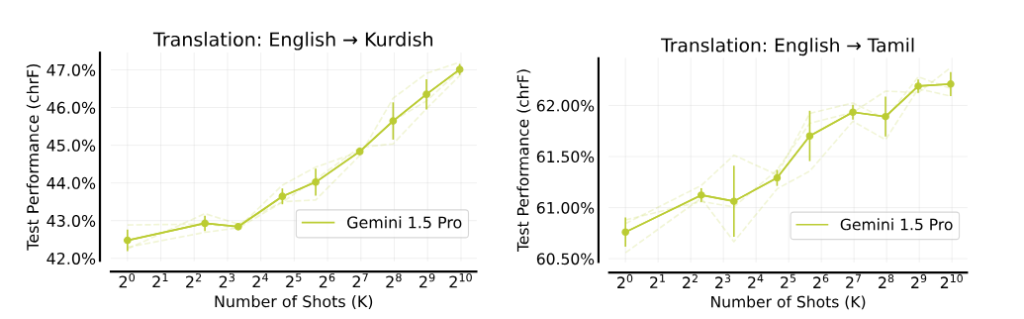

To validate the effectiveness of many-shot ICL, DeepMind researchers conducted experiments across diverse domains, including machine translation, summarization, and complex reasoning tasks. They utilized datasets like MATH for mathematical problem-solving and FLORES for machine translation tasks. The results showcased significant improvements in model adaptability and accuracy across various cognitive tasks.

In machine translation tasks, the Gemini 1.5 Pro model surpassed previous benchmarks, achieving a 4.5% increase in accuracy for Kurdish translations and a 1.5% increase for Tamil translations compared to earlier models.

Mathematical problem-solving using the MATH dataset witnessed a 35% improvement in solution accuracy when employing many-shot ICL settings. These remarkable quantitative outcomes demonstrate the transformative potential of many-shot ICL in enhancing the performance of large language models.

Unlocking New Horizons: Applications of Many-Shot ICL

The introduction of many-shot ICL with increased learning capabilities empowers AI to excel in applications that demand extensive data analysis and decision-making. Areas such as advanced reasoning, language translation, and knowledge synthesis stand to benefit greatly from the flexibility and adaptability offered by this breakthrough.

Many-shot ICL allows AI models to process and learn from larger datasets, facilitating a deeper understanding of complex problems. Consequently, this enhanced learning capability paves the way for more sophisticated applications in AI, unlocking new horizons and pushing the boundaries of what AI can achieve.

Conclusion

The AI paper from Google DeepMind showcasing enhanced learning capabilities with many-shot in-context learning represents a significant milestone in AI research. By transitioning from few-shot to many-shot ICL and leveraging larger models like Gemini 1.5 Pro, the researchers have effectively improved model performance across various tasks.

Many-shot ICL’s ability to process and learn from extensive datasets has wide-ranging implications for fields such as machine translation, summarization, and complex reasoning. As AI continues to evolve, many-shot ICL opens doors to new possibilities and applications that were previously out of reach.

The future of AI is undoubtedly bright, and the advancements achieved in many-shot ICL by the Google DeepMind team mark another step forward in unlocking the true potential of artificial intelligence.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

Read our other blogs on AI Tools 😁

If you like our work, you will love our Newsletter 📰