Researchers at Apple Introduce ‘pfl-research’: A Fast, Easy-to-Use, and Modular Python Framework for Simulating Federated Learning

Federated learning (FL) has emerged as a transformative paradigm in the realm of artificial intelligence, promising collaborative model training while upholding data privacy. However, the journey of FL research has been fraught with challenges, particularly in simulating large-scale scenarios effectively. Enter “pfl-research,” a groundbreaking Python framework developed by researchers at Apple, poised to revolutionize Private Federated Learning (PFL) research.

Understanding Federated Learning

Before delving into the intricacies of pfl-research, let’s grasp the essence of federated learning. FL represents a decentralized approach to model training, where instead of aggregating data in a central server, models are trained locally on edge devices. Only model updates, rather than raw data, are transmitted to a central server, preserving user privacy.

The Challenge of Simulating Federated Learning

Simulating FL scenarios accurately is crucial for advancing research in this domain. However, existing tools have struggled to keep pace with the demands of modern FL research, often lacking in speed and scalability.

Introducing pfl-research

Pioneered by Apple researchers, pfl-research emerges as a game-changer in the realm of FL simulation frameworks. Designed to accelerate PFL research efforts, this Python framework boasts remarkable speed, modularity, and user-friendliness, empowering researchers to explore new ideas without computational constraints.

Key Features of pfl-research

1. Versatility

One of the standout features of pfl-research is its versatility. It supports multiple machine learning frameworks, including TensorFlow and PyTorch, as well as traditional non-neural network models. This versatility enables researchers to seamlessly integrate pfl-research into their existing workflows.

2. Privacy Preservation

Privacy is paramount in FL, and pfl-research ensures robust privacy preservation mechanisms. By incorporating the latest privacy algorithms, researchers can conduct experiments with confidence, knowing that sensitive data remains secure.

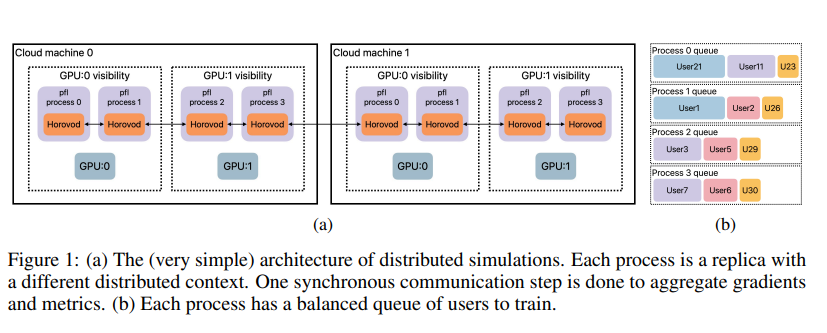

3. Modular Architecture

pfl-research adopts a modular approach, akin to a sophisticated Lego set for researchers. With components like Dataset, Model, Algorithm, and Aggregator, researchers can customize simulations tailored to their specific requirements. Whether it’s experimenting with novel algorithms or analyzing diverse datasets, pfl-research offers unparalleled flexibility.

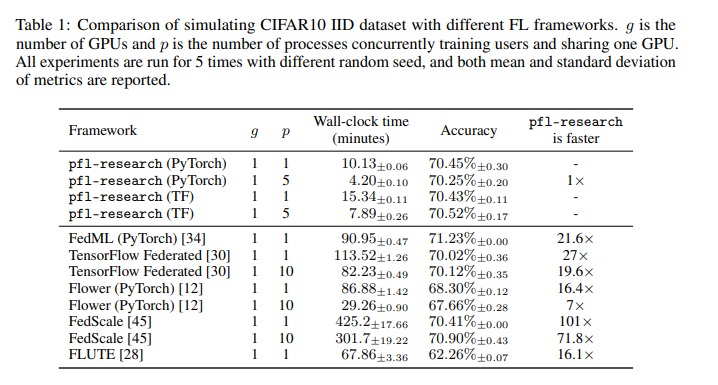

Performance Benchmarking

In comparative tests against other FL simulators, pfl-research emerges as the frontrunner, boasting simulation times up to 72 times faster. This remarkable performance ensures researchers can tackle complex FL scenarios efficiently, without compromising on research quality.

Future Developments

The pfl-research team remains committed to advancing this groundbreaking framework further. Future developments include continuous support for new algorithms, datasets, and cross-silo simulations, paving the way for federated learning across diverse organizations and institutions. Additionally, exploration into cutting-edge simulation architectures aims to enhance scalability and versatility, ensuring pfl-research remains at the forefront of FL research.

Conclusion

In conclusion, pfl-research stands as a testament to the relentless pursuit of innovation in federated learning research. With its unparalleled speed, modularity, and privacy-preserving capabilities, this Python framework is poised to propel FL research into new frontiers. As researchers harness the power of pfl-research to tackle real-world challenges, the potential for transformative discoveries in AI grows ever brighter.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰