Unveiling a New Approach to Tackling Noise in Federated Hyperparameter Tuning

Machine learning is progressing at a rapid pace, and with it comes the need for optimization techniques to ensure the best performance of models. Federated Learning, in particular, presents unique challenges when it comes to hyperparameter tuning. The intricate interplay of data heterogeneity, system diversity, and stringent privacy constraints introduces significant noise during the tuning process, questioning the efficacy of traditional methods. However, a recent AI paper from CMU unveils a new approach that addresses these challenges and opens up exciting possibilities for hyperparameter tuning in Federated Learning.

The Challenges of Hyperparameter Tuning in Federated Learning

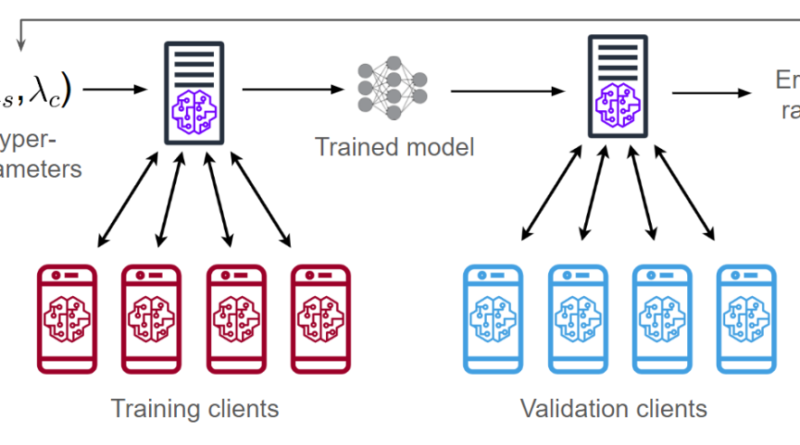

In Federated Learning, the training process is distributed across multiple devices or edge nodes, with each node holding its own dataset. This decentralized nature introduces data heterogeneity, as each node may have different distributions and characteristics. Additionally, privacy concerns necessitate keeping the data on the edge nodes rather than centralizing it for training. These factors complicate the hyperparameter tuning process, as traditional techniques may not be optimal in such scenarios.

Prominent techniques like Random Search (RS), Hyperband (HB), Tree-structured Parzen Estimator (TPE), and Bayesian Optimization HyperBand (BOHB) have been the go-to choices for hyperparameter tuning in Federated Learning. However, CMU researchers have identified vulnerabilities in these methods when dealing with noisy evaluations [1]. The presence of noise in evaluations can lead to suboptimal hyperparameter configurations, undermining the performance of the trained models.

The One-shot Proxy RS Method: A Paradigm Shift in Federated Hyperparameter Tuning

To tackle the challenges of noise in Federated Hyperparameter Tuning, the CMU researchers propose a novel approach called the one-shot proxy RS method. This method introduces a strategic paradigm shift in hyperparameter optimization for Federated Learning, leveraging the potential of proxy data to enhance the effectiveness of tuning [1].

At its core, the one-shot proxy RS method involves training and evaluating hyperparameters using proxy data as a buffer against noisy evaluations. Proxy data refers to a subset of data obtained from the edge nodes, minimizing privacy concerns and enabling more efficient hyperparameter exploration. By judiciously leveraging this underutilized resource, the method mitigates the impact of noise and provides a stable foundation for optimization.

The Resilience of the One-shot Proxy RS Method in the Face of Noise

The CMU research team extensively explores the intricacies of the one-shot proxy RS method and highlights its adaptability and robust performance. In scenarios where traditional methods falter due to heightened noise in evaluations and privacy constraints, this method emerges as a potential tool within Federated Learning.

The research team substantiates their findings with a comprehensive performance analysis across various Federated Learning datasets. The results showcase the efficacy of the one-shot proxy RS method in reshaping hyperparameter tuning dynamics and improving model performance. By recalibrating the approach and leveraging proxy data, the method ensures robust optimization even in the presence of noisy evaluations.

An Innovation in Federated Hyperparameter Tuning Landscape

The one-shot proxy RS method presents an innovative approach to hyperparameter tuning in Federated Learning. Its unique utilization of proxy data addresses the challenges posed by data heterogeneity and privacy concerns, two critical aspects of the Federated Learning paradigm.

The research conducted by CMU provides valuable insights into the dynamics of Federated Learning and introduces a tool that holds the potential to overcome hurdles in hyperparameter tuning. By acknowledging and leveraging the opportunities presented by proxy data, the one-shot proxy RS method redefines the trajectory of optimization in Federated Learning.

Conclusion

In the pursuit of refining machine learning models through hyperparameter tuning, Federated Learning introduces unique challenges due to data heterogeneity and privacy constraints. Traditional methods may falter in the face of noise in evaluations, undermining the performance of models.

However, the recent AI paper from CMU unveils the one-shot proxy RS method as a promising solution to these challenges. By leveraging proxy data and recalibrating the approach to hyperparameter optimization, the method provides a stable foundation for robust tuning in Federated Learning settings.

This approach opens up exciting possibilities for researchers and practitioners working on Federated Learning, offering a new perspective on hyperparameter tuning dynamics and the associated noise. The CMU research team’s commitment to exploring and understanding the method’s inner workings contributes significantly to the advancement of the field.

In conclusion, the one-shot proxy RS method is a beacon of innovation in Federated Hyperparameter Tuning, paving the way for improved model performance and enabling the realization of the full potential of Federated Learning.