Unveiling Fourier Features: A New Mathematical Theory in Neural Networks

Artificial neural networks (ANNs) have demonstrated remarkable capabilities in learning and pattern recognition tasks. These systems, inspired by the human brain, are capable of learning complex representations from data without explicit programming. However, the underlying mechanisms that drive the emergence of certain features in neural networks, such as Fourier features, have remained a topic of interest and investigation.

Researchers from KTH, Redwood Center for Theoretical Neuroscience, and UC Santa Barbara have recently introduced a mathematical theory to explain the rise of Fourier features in learning systems like neural networks. This theory provides insights into the fundamental principles that govern the emergence of these features and sheds light on their significance in machine learning.

But what are Fourier features, and why do they play a crucial role in learning systems like neural networks? Let’s dive deeper into the harmonics of learning and understand the mathematical theory behind the rise of Fourier features.

Understanding Fourier Features

Fourier features refer to localized or non-localized versions of canonical 2D Fourier basis functions observed in image models and neural networks. These features resemble Gabor filters or wavelets and have been found to emerge in the initial layers of vision models trained on various tasks like efficient coding, classification, and temporal coherence.

In addition to localized Fourier features, non-localized Fourier features have been observed in networks trained on tasks involving cyclic wraparound, such as modular arithmetic or invariance to cyclic translations.

The Mathematical Theory Behind Fourier Features

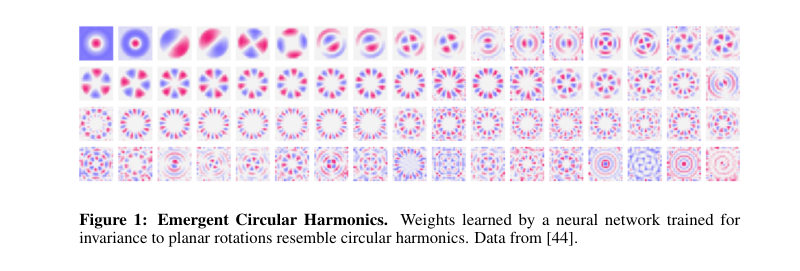

The mathematical theory proposed by the researchers aims to provide a deeper understanding of the emergence of Fourier features in learning systems. The theory revolves around the concept of invariance, which refers to the learner’s insensitivity to certain transformations, such as planar translation or rotation.

The researchers argue that the downstream invariance of a learning system leads to the rise of Fourier features. They derive theoretical guarantees for Fourier features in invariant learners, enabling their application in different machine learning models. This derivation is based on the observation that natural data exhibit symmetries, and invariance can be injected implicitly or explicitly into learning systems to capture these symmetries.

The standard discrete Fourier transform, which is a special case of more general Fourier transforms on groups, plays a crucial role in the theory. By replacing the basis of harmonics with different unitary group representations, the theory covers various situations and neural network architectures, laying the foundation for a learning theory of representations in both artificial and biological neural systems.

The Informal Theorems

The researchers present two informal theorems that support their theory. The first theorem states that if a parametric function of a specific kind is invariant to the action of a finite group G, then each component of its weights coincides with a harmonic of G up to a linear transformation. This implies that the weights of an invariant model are closely related to the Fourier transform on the group G.

The second theorem states that if a parametric function is almost invariant to G according to certain functional bounds and the weights are orthonormal, the multiplicative table of G can be recovered from the weights. This theorem highlights the possibility of recovering the algebraic structure of an unknown group from an invariant model.

Practical Implications and Future Directions

The mathematical theory for the rise of Fourier features in learning systems like neural networks has significant practical implications. Understanding the emergence and properties of Fourier features can help researchers design more efficient and effective neural network architectures. These features offer insights into the underlying mechanisms of learning systems and can aid in tasks such as image recognition, classification, and generative modeling.

Future work in this field includes the study of analogs of the proposed theory on real numbers. Exploring the application of Fourier features and invariance principles in continuous domains is an interesting area that aligns with current practices in the field.

Conclusion

The rise of Fourier features in learning systems like neural networks has been a subject of interest in the machine learning community. The mathematical theory proposed by researchers provides a deeper understanding of the emergence of these features and their significance in learning systems. By leveraging the concepts of invariance and the Fourier transform on groups, the theory explains the relationship between invariance, neural network weights, and the algebraic structure of underlying groups.

This mathematical theory opens up new opportunities for designing more powerful and efficient neural network architectures. The insights gained from understanding Fourier features can revolutionize the field of machine learning and contribute to advancements in tasks such as image recognition, classification, and generative modeling.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰