Exploring bGPT: A Deep Learning Model with Next-Byte Prediction

In the rapidly advancing field of artificial intelligence (AI), researchers are constantly pushing the boundaries to develop models that can understand and simulate the digital world. One such model that has recently gained attention is bGPT, a deep-learning model with next-byte prediction capabilities. This groundbreaking model has the potential to revolutionize various fields, from cybersecurity to software diagnostics, by offering a deeper understanding of binary data. In this article, we will delve into the intricacies of bGPT and explore its capabilities in simulating the digital landscape.

Understanding Byte Models and their Potential

Byte models have emerged as powerful tools for processing binary data, which constitutes a significant portion of the digital world. While traditional deep learning models focus on human-centric data, such as text and images, byte models offer a versatile and privacy-preserving solution to handle diverse data types. They have shown promise in tasks such as malware detection, program analysis, and language processing.

However, current research tends to limit the potential of byte models by focusing on specific tasks. This narrow approach hinders the exploration of byte models’ broader potential to predict, simulate, and diagnose the behavior of algorithms or hardware in the digital world.

Introducing bGPT: A Novel Approach

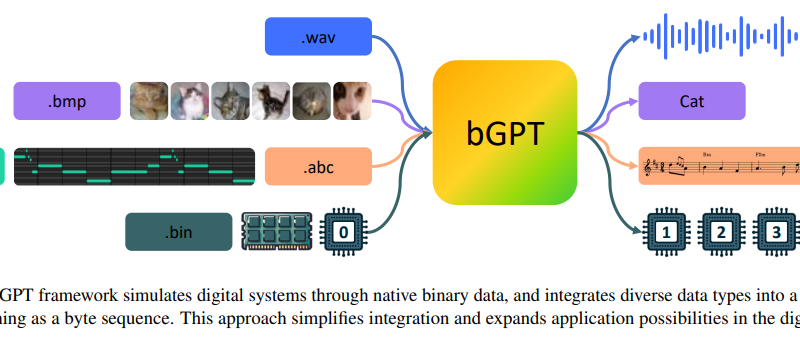

A team of researchers from Microsoft Research, Tsinghua University, and the Central Conservatory of Music, China, has introduced a novel model named bGPT. Unlike traditional models, bGPT goes beyond tokenizing text or analyzing visual and auditory data. It delves deep into the core of digital information bytes, unraveling the intricate patterns of the digital realm.

The architecture of bGPT is built upon a hierarchical transformer framework, which efficiently processes digital data. Byte sequences are segmented into manageable patches and transformed into dense vectors through a linear projection layer. The model includes a patch-level decoder for predicting subsequent patch features and a byte-level decoder for reconstructing the byte sequence within each patch.

bGPT’s training objectives focus on generative modeling, with an emphasis on next-byte prediction and classification tasks. This training approach enables bGPT to excel in digital media processing and algorithm simulation. The model has been evaluated using datasets such as Wikipedia, AG News, ImageNet, and CPU States, with computational costs benchmarked on NVIDIA V100 GPUs.

Unparalleled Proficiency in Digital Media Processing

One of the notable achievements of bGPT is its exceptional understanding of algorithms and its ability to process digital media. In tasks such as converting symbolic music data into binary MIDI format, bGPT demonstrates a low error rate of just 0.0011 bits per byte. This level of accuracy showcases the model’s deep comprehension of the underlying algorithm.

Furthermore, in simulating CPU behavior, bGPT has surpassed expectations by achieving an accuracy exceeding 99.99% in executing various operations. This capability opens up possibilities for improving cybersecurity measures and enhancing software diagnostics.

The Implications of bGPT’s Capabilities

The capabilities of bGPT extend far beyond academic curiosity. The ability to simulate and understand the inner workings of digital systems offers invaluable insights and opportunities for various fields. Let’s explore some of the key implications of bGPT’s capabilities:

1. Cybersecurity: By gaining a deeper understanding of binary data, bGPT can help enhance cybersecurity measures. It can detect patterns and anomalies in binary files, leading to more effective malware detection and threat analysis.

2. Software Diagnostics: bGPT’s proficiency in simulating CPU behavior and understanding algorithms can significantly improve software diagnostics. It can assist in identifying performance bottlenecks, optimizing code, and troubleshooting complex software issues.

3. Algorithm Development and Optimization: With its ability to predict and simulate algorithm behavior, bGPT can aid in the development and optimization of algorithms. It can analyze the performance of different algorithmic approaches and provide insights for improving efficiency and accuracy.

4. Hardware Design and Optimization: bGPT’s deep understanding of binary data can be leveraged in hardware design and optimization. It can simulate hardware behavior, predict system performance, and aid in the design of more efficient and reliable hardware components.

5. Data Compression and Encoding: By analyzing byte-level patterns, bGPT can contribute to advancements in data compression and encoding techniques. It can identify redundancies in binary data and propose more efficient compression algorithms.

The Future of bGPT and Byte Models

The advent of bGPT marks a transformative moment in deep learning and AI. By bridging the gap between human-interpretable data and the vast expanse of binary information, bGPT paves the way for a new era of digital simulation. Its achievements in accurately modeling and predicting the behavior of digital systems underscore the immense potential of byte models.

As researchers continue to explore the capabilities of bGPT and refine byte models, we can expect further advancements in fields such as cybersecurity, software diagnostics, algorithm development, and hardware optimization. The mysteries of the digital universe are gradually being unraveled, thanks to the remarkable progress in deep learning and models like bGPT.

In conclusion, bGPT represents a significant leap forward in our ability to simulate and understand the digital world. Its next-byte prediction capabilities and deep comprehension of binary data offer new opportunities for innovation and problem-solving. As we harness the power of bGPT and byte models, we move closer to unlocking the full potential of AI in shaping the future of technology.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.