AI Hallucination Detection with KnowHalu

Artificial Intelligence (AI) has made significant advancements in recent years, particularly with the development of Large Language Models (LLMs). These models have the ability to generate coherent and contextually appropriate text, making them immensely valuable in various applications. However, there is a growing concern surrounding the issue of hallucinations in the text generated by LLMs. Hallucinations refer to instances where the AI models produce information that appears accurate but is, in fact, incorrect or irrelevant.

The problem of hallucinations is especially critical in fields that require high factual accuracy, such as medical or financial applications. Imagine relying on AI-generated information for making important healthcare decisions, only to discover that the information provided is misleading or completely fabricated. To ensure the reliability of AI-generated content, there is a pressing need to effectively detect and manage these hallucinations.

Existing Approaches

Several methods have been developed to address the challenge of hallucinations in text generated by LLMs. Initially, techniques focused on internal consistency checks, where responses from the AI were tested against each other to spot contradictions. While this approach was useful to some extent, it had limitations as it solely relied on the information stored within the AI model itself.

Later approaches utilized the AI’s hidden states or output probabilities to identify potential errors. These methods aimed to uncover discrepancies between the AI’s internal representation of the world and the actual world. However, these approaches also had their limitations, as they were limited by the comprehensiveness and up-to-dateness of the information stored within the AI model.

To overcome these limitations, some researchers turned to post-hoc fact-checking, which involved incorporating external data sources to improve accuracy. By leveraging external knowledge bases, these methods could verify the correctness of the AI-generated content. However, they often required assistance for complex queries and intricate factual details.

Introducing KnowHalu: A Novel AI Approach

Recognizing the limitations of existing methods, a team of researchers from the University of Illinois Urbana-Champaign, UChicago, and UC Berkeley has developed a cutting-edge method named KnowHalu. This novel approach is specifically designed to detect hallucinations in AI-generated texts and significantly enhance accuracy.

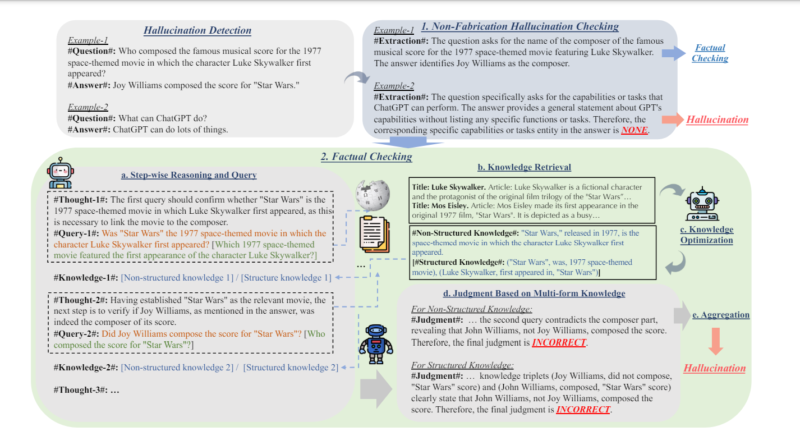

KnowHalu operates through a two-phase process, combining internal and external analysis to identify hallucinations effectively. In the first phase, it checks for non-fabrication hallucinations, which are technically accurate responses that do not adequately address the query. This initial check ensures that the AI-generated content is relevant and contextually appropriate.

The second phase of KnowHalu’s approach is where it truly shines. It incorporates a detailed and robust process that utilizes structured and unstructured external knowledge sources for a deeper factual analysis. This multi-step process involves breaking down the original query into simpler sub-queries, allowing for targeted retrieval of relevant information from various knowledge bases. Each piece of information is then optimized and evaluated through a comprehensive judgment mechanism that considers different forms of knowledge, including semantic sentences and knowledge triplets.

By analyzing knowledge from multiple sources, KnowHalu provides a thorough factual validation, enhancing the AI’s reasoning capabilities and leading to more accurate output. This innovative approach significantly improves the detection of hallucinations in AI-generated texts.

The Effectiveness of KnowHalu

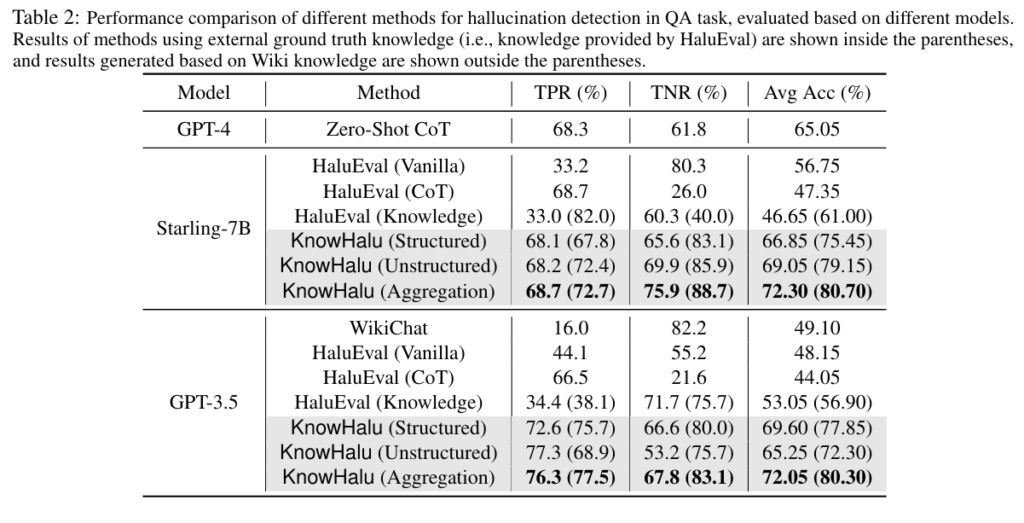

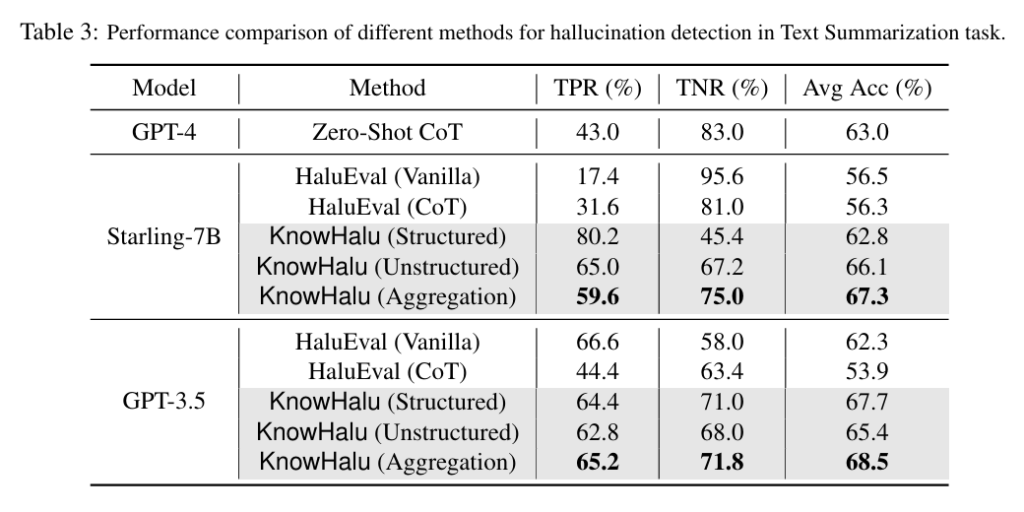

To assess the effectiveness of KnowHalu, rigorous testing was conducted across different tasks, including question-answering and text summarization. The results were compared against existing state-of-the-art methods, and KnowHalu demonstrated remarkable improvements in detecting hallucinations.

In question-answering tasks, KnowHalu achieved a 15.65% improvement in accuracy compared to previous techniques. This improvement is crucial as it ensures that the AI-generated responses not only appear accurate but also provide factual and reliable information.

Similarly, in text summarization tasks, KnowHalu outperformed existing methods by achieving a 5.50% increase in accuracy. This enhancement is significant as it ensures that the AI-generated summaries are concise, relevant, and free from hallucinations.

With its proven effectiveness, KnowHalu sets a new standard for verifying and trusting AI-generated content. It broadens the potential use of LLMs in critical and information-sensitive fields, where accuracy and reliability are of utmost importance.

Conclusion

The development of LLMs has revolutionized the field of artificial intelligence, enabling machines to generate coherent and contextually appropriate text. However, the issue of hallucinations in AI-generated content poses a significant challenge to the reliability and trustworthiness of these models.

Fortunately, researchers have been actively working on addressing this problem. KnowHalu, a novel AI approach, has emerged as a powerful solution to detect hallucinations in text generated by LLMs. Through its multi-phase process and incorporation of external knowledge sources, KnowHalu significantly enhances accuracy and ensures the provision of factual and reliable information.

The introduction of KnowHalu represents a significant advancement in the field of artificial intelligence. It paves the way for safer and more dependable AI interactions in various domains, such as healthcare, finance, and information-sensitive applications.

As AI continues to evolve, it is crucial to prioritize the development of methods like KnowHalu that enhance the accuracy and reliability of AI-generated content. By doing so, we can fully embrace the potential of AI while also ensuring that the information it produces is trustworthy and beneficial to society.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

Read our other blogs on AI Tools 😁

If you like our work, you will love our Newsletter 📰