CinePile: A Novel Dataset and Benchmark for Long-Form Video Understanding

Video understanding is a rapidly evolving field in artificial intelligence (AI) research, focusing on enabling machines to comprehend and analyze visual content. From autonomous driving to surveillance and entertainment industries, advancements in video understanding have crucial applications in various domains. However, the complexity of interpreting dynamic and multi-faceted visual information has posed significant challenges in developing robust video comprehension systems. Traditional models struggle to accurately analyze temporal aspects, object interactions, and plot progression within scenes. To address these limitations, researchers have introduced CinePile, a novel dataset and benchmark specifically designed for authentic long-form video understanding.

The Challenges of Video Understanding

Video understanding involves tasks such as object recognition, human action understanding, and event interpretation within videos. These tasks require AI models to process and comprehend visual information, which is inherently complex and diverse. Traditional approaches often rely on large multi-modal models that integrate visual and textual information. However, generating annotated datasets for training these models is a labor-intensive process that is prone to errors, making it less scalable and unreliable.

Existing benchmarks, such as MovieQA and TVQA, offer valuable insights into video understanding. However, they do not cover the full spectrum of video comprehension, particularly in handling complex interactions and events within scenes. There is a need for comprehensive datasets and benchmarks that can challenge AI models’ understanding and reasoning capabilities.

Introducing CinePile: A Comprehensive Video Understanding Benchmark

To bridge the gap between human performance and current AI models, researchers from the University of Maryland and Weizmann Institute of Science have developed CinePile. This novel dataset and benchmark leverage automated question template generation to create a large-scale, long-video understanding benchmark. By integrating visual and textual data, CinePile aims to provide a comprehensive dataset that pushes the boundaries of AI models’ understanding and reasoning capabilities.

Dataset Curation Process

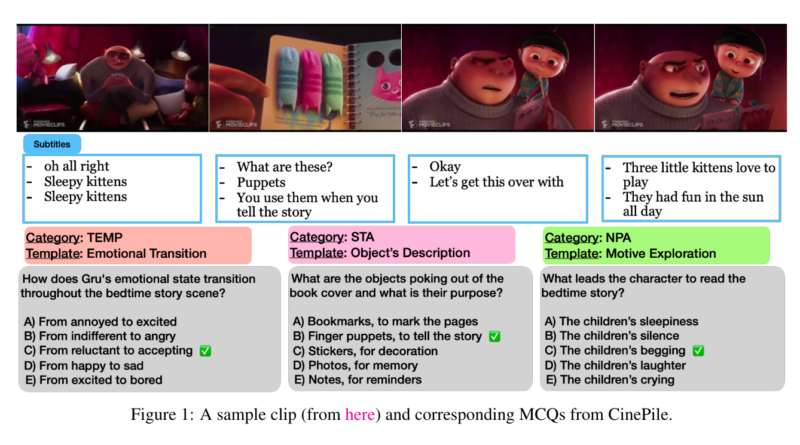

The creation of the CinePile dataset involves a multi-stage process that ensures the dataset’s comprehensiveness and diversity. Initially, raw video clips are collected and annotated with scene descriptions. A binary classification model distinguishes between dialogue and visual descriptions. These annotations are used to generate question templates through a language model, which are then applied to the video scenes to create comprehensive question-answer pairs.

The dataset curation process also involves shot detection algorithms to identify and annotate important frames using the Gemini Vision API. The concatenated text descriptions produce a visual summary of each scene. This summary then generates long-form questions and answers, focusing on various aspects such as character dynamics, plot analysis, thematic exploration, and technical details.

Evaluating AI Models with CinePile

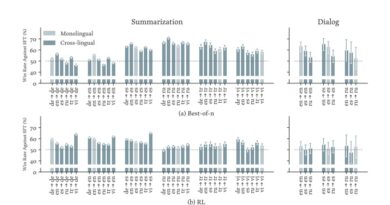

The CinePile benchmark consists of approximately 300,000 questions in the training set and about 5,000 questions in the test split. The evaluation of current video-centric AI models, both open-source and proprietary, has revealed that even state-of-the-art systems need improvement to catch up to human performance. For example, models often produce verbose responses instead of concise answers, indicating the need for better adherence to instructions.

Open-source models like Llava 1.5-13B, OtterHD, mPlug-Owl, and MinGPT-4 have shown high fidelity in image captioning but struggle with hallucinations and unnecessary text snippets. These challenges highlight the complexity and intricacies involved in video understanding tasks, emphasizing the need for more sophisticated models and evaluation methods.

Implications and Future Research

The introduction of CinePile addresses a critical gap in video understanding by providing a comprehensive dataset and benchmark. This innovative approach enhances the ability to generate diverse and contextually rich questions about videos, paving the way for more advanced and scalable video comprehension models. By integrating multi-modal data and automated processes, CinePile sets a new standard for evaluating video-centric AI models.

The availability of the CinePile dataset and benchmark encourages researchers and developers to explore novel approaches to long-form video understanding. Future research may focus on developing more effective models for temporal analysis, object interactions, and plot progression within scenes. Additionally, advancements in natural language processing and computer vision techniques can further enhance AI models’ ability to comprehend and reason about video content.

Conclusion

CinePile, a novel dataset and benchmark specifically designed for authentic long-form video understanding, addresses the challenges faced by traditional models in comprehending dynamic visual information. By leveraging automated question template generation and multi-modal data integration, CinePile provides a comprehensive dataset that challenges AI models’ understanding and reasoning capabilities. The availability of this benchmark sets a new standard for evaluating video-centric AI models and drives future research and development in the field of video understanding.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰