Project ALPINE Reveals New Frontiers in AI Planning with Transformers

Large Language Models (LLMs) have gained significant attention due to their impressive capabilities in various tasks, such as language processing, reasoning, coding, and planning. These models, based on the Transformer neural network architecture, have demonstrated remarkable performance in autoregressive learning, where they predict the next word in a sequence. The exceptional abilities of LLMs have sparked researchers’ interest in understanding the underlying mechanisms that enable such high levels of intelligence.

One particular aspect of human intelligence that has been the focus of recent research is planning. Planning plays a vital role in tasks like project organization, travel planning, and mathematical theorem proof. Researchers aim to bridge the gap between basic next-word prediction and more sophisticated intelligent behaviors by studying how LLMs perform planning tasks.

Project ALPINE: Autoregressive Learning for Planning In NEtworks

In a recent study, researchers presented the findings of Project ALPINE, which stands for “Autoregressive Learning for Planning In NEtworks.” The project delves into the autoregressive learning mechanisms of Transformer-based language models and their role in developing planning capabilities. The researchers’ objective is to identify potential shortcomings in the planning capabilities of these models and uncover insights that can further enhance their performance.

Understanding Planning in LLMs

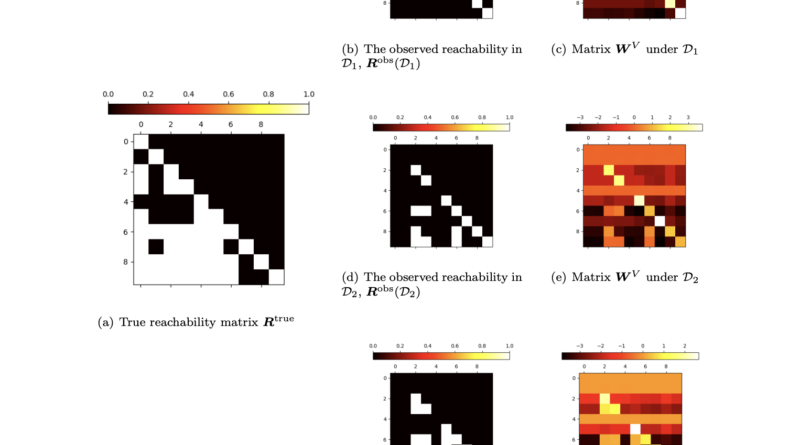

To explore the planning capabilities of LLMs, the research team defined planning as a network path-finding task. The goal is to create a valid path from a given source node to a target node. The researchers found that Transformers, by incorporating adjacency and reachability matrices within their weights, can successfully perform path-finding tasks.

The team conducted theoretical investigations into the gradient-based learning dynamics of Transformers. They discovered that these models can learn both a condensed version of the reachability matrix and the adjacency matrix. Experimental validations of these theoretical findings further supported the team’s conclusions, highlighting the practical applicability of the proposed methodology.

In addition to theoretical investigations, the researchers applied their methodology to a real-world planning benchmark called Blocksworld. The outcomes of these experiments reinforced their primary conclusions and demonstrated the potential of Transformers in handling complex planning jobs across various industries.

Limitations of Transformers in Path-Finding

While Transformers exhibit promising planning capabilities, the study also identified a potential drawback. Transformers are unable to recognize reachability links through transitivity, which means they struggle with creating complete paths that involve connections spanning multiple intermediate nodes. This limitation implies that Transformers may not produce the correct path if it requires an awareness of connections that extend beyond immediate neighbors.

Implications and Future Directions

The research on ALPINE and autoregressive learning for planning in networks sheds light on the fundamental workings of LLMs. It deepens our understanding of the planning capacities of Transformer models and opens avenues for the development of more sophisticated AI systems capable of handling complex planning tasks.

The findings of Project ALPINE can have significant implications for network design and planning in various fields. By leveraging the autoregressive learning capabilities of LLMs, researchers and practitioners can enhance network planning processes and tackle complex planning challenges more effectively.

Further research in this area could focus on addressing the limitations identified in the study. Finding ways to enable Transformers to recognize reachability links through transitivity would be a crucial step toward improving their path-finding abilities. Additionally, exploring alternative architectures or incorporating external knowledge sources could contribute to the development of more powerful planning models.

Conclusion

The ALPINE project, which investigates autoregressive learning for planning in networks, provides valuable insights into the planning capabilities of large language models based on the Transformer architecture. By understanding how these models perform planning tasks, researchers can advance the development of more sophisticated AI systems that excel in complex planning jobs.

The research highlights the potential of Transformers in network design and planning, while also identifying limitations that need to be addressed. Overall, ALPINE contributes to our knowledge of autoregressive learning and its role in enabling intelligent planning behaviors in language models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰