Meet Graph-Mamba: A Novel Graph Model that Leverages State Space Models (SSM) for Efficient Data-Dependent Context Selection

Graph modeling has proven to be a challenging task, especially when it comes to capturing long-range dependencies and handling scalability issues. Traditional graph neural networks (GNNs) have made significant progress in addressing these challenges. However, high computational costs and the need for more efficient graph models have led researchers to explore novel approaches.

In this article, we will introduce you to Graph-Mamba, a groundbreaking graph model that leverages state space models (SSMs) to enhance long-range context modeling and efficiently select data-dependent contexts. We will delve into the key features, advantages, and applications of Graph-Mamba, highlighting its potential to revolutionize graph modeling.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

The Challenge of Long-Range Dependency Modeling in Graphs

Graphs are widely used to represent complex relationships and dependencies in various domains such as social networks, molecular structures, and recommendation systems. Modeling long-range dependencies in graphs is crucial for capturing important context information and making accurate predictions.

Traditional GNNs, such as Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs), have been successful in capturing local dependencies within a fixed neighborhood. However, they struggle to effectively capture long-range dependencies that span across distant nodes in the graph. This limitation can hinder their performance in tasks that require a broader context.

To address this challenge, researchers have turned to state space models (SSMs) like Mamba. SSMs have proven to be effective in modeling long-range dependencies in sequential data, but extending them to non-sequential graph data is not straightforward.

Introducing Graph-Mamba: Enhancing Long-Range Context Modeling

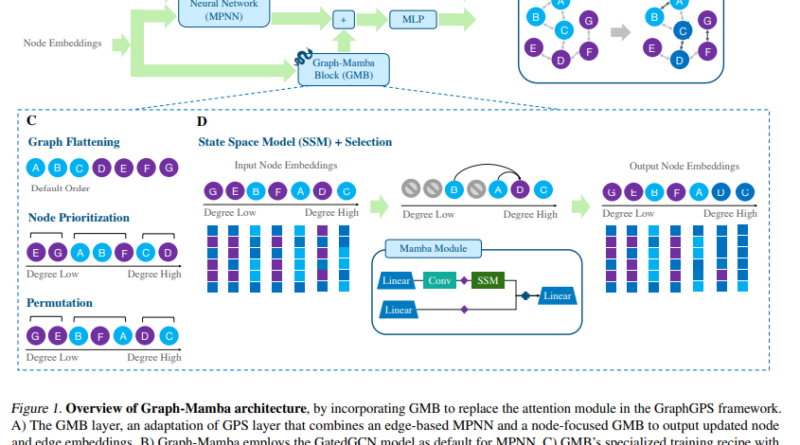

In a recent breakthrough, a team of researchers introduced Graph-Mamba. Graph-Mamba is a novel graph model that integrates a selective SSM into the GraphGPS framework, offering an efficient solution to input-dependent graph sparsification challenges.

The core of Graph-Mamba is the Graph-Mamba block (GMB), which combines the selection mechanism of the Mamba module with a node prioritization approach. This unique combination enables advanced sparsification and ensures linear-time complexity, making it a powerful alternative to traditional dense graph attention mechanisms.

Advantages and Features of Graph-Mamba

Graph-Mamba brings several advantages and features that make it stand out in the field of graph modeling:

1. Efficient Context Selection

Graph-Mamba leverages SSMs to adaptively select relevant context information and prioritize crucial nodes. By doing so, it achieves nuanced context-aware sparsification, reducing computational demands while maintaining high performance. This capability is particularly valuable in scenarios where computational resources are limited.

2. Improved Scalability

One of the major challenges in graph modeling is scalability. Graph-Mamba addresses this by significantly reducing computational costs compared to traditional dense graph attention models. With its innovative permutation and node prioritization strategies, it outperforms sparse attention methods and rivals dense attention Transformers in terms of both training and inference efficiency.

3. Superior Performance

Graph-Mamba has been extensively evaluated across diverse datasets, including image classification, synthetic graph datasets, and 3D molecular structures. In these evaluations, Graph-Mamba consistently demonstrates superior performance and efficiency, showcasing its ability to handle various graph sizes and complexities.

4. Reduced Memory Consumption

Efficient memory utilization is crucial in graph modeling, especially for large-scale graphs. Graph-Mamba excels in this aspect, achieving a remarkable 74% reduction in GPU memory consumption on the Peptides-func dataset. This reduction in memory requirements further enhances its practicality and usability.

Applications of Graph-Mamba

Graph-Mamba’s innovative approach to graph modeling opens up new possibilities and applications across various domains. Some of the potential applications of Graph-Mamba include:

1. Social Network Analysis

Social networks often exhibit complex relationships and dependencies that span across multiple individuals. Graph-Mamba’s ability to capture long-range dependencies efficiently makes it an ideal choice for social network analysis tasks such as community detection, link prediction, and influence analysis.

2. Drug Discovery and Molecular Analysis

In the field of drug discovery and molecular analysis, understanding the interactions between atoms and molecules is crucial. Graph-Mamba’s superior performance in handling 3D molecular structures makes it a valuable tool for tasks like molecular property prediction, drug-target interaction prediction, and molecular synthesis planning.

3. Recommendation Systems

Recommendation systems rely on understanding user preferences and item relationships. Graph-Mamba’s ability to model long-range dependencies in recommendation graphs can greatly improve the accuracy and relevance of recommendations, leading to enhanced user experiences.

Conclusion

Graph-Mamba represents a significant advancement in the field of graph modeling. By leveraging state space models (SSMs) and innovative sparsification techniques, Graph-Mamba addresses the challenges of long-range dependency recognition and scalability. Its efficient context selection, improved scalability, and superior performance make it a promising solution for a wide range of graph modeling tasks.

As the field of graph modeling continues to evolve, Graph-Mamba paves the way for more efficient and effective approaches. Its integration of SSMs into the graph modeling framework opens up new avenues for research and application, promising to reshape the future of computational graph analysis.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰