Falcon 2-11B: Harnessing the Power of 5.5T Tokens in AI Development

The Technology Innovation Institute (TII) in Abu Dhabi has recently unveiled Falcon 2-11B, the first artificial intelligence (AI) model of the Falcon 2 family. This cutting-edge language model is trained on an impressive 5.5 trillion tokens and boasts 11 billion parameters, making it one of the largest AI models to date. With a vision language model integrated into its architecture, Falcon 2-11B represents a significant advancement in AI research and development.

Falcon 2-11B: A Language Model with Unprecedented Scale

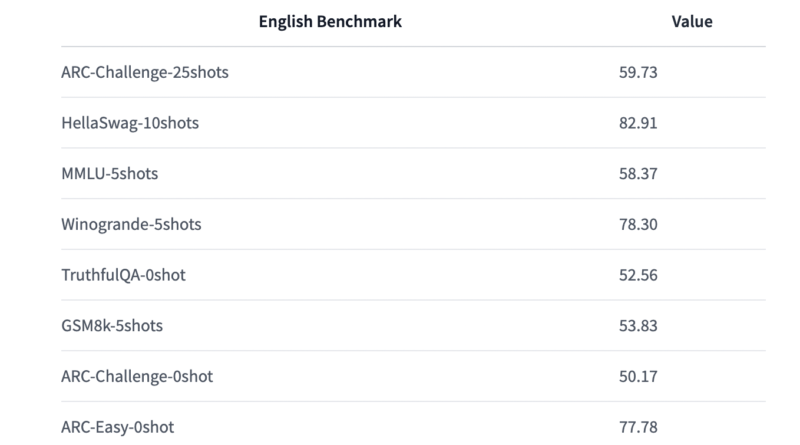

The Falcon 2-11B model, developed by TII, is a causal decoder-only model equipped with an astounding 11 billion parameters. It has been meticulously trained on a vast corpus of 5.5 trillion tokens, combining RefinedWeb data with carefully curated corpora. This extensive training enables Falcon 2-11B to excel in a wide range of natural language processing tasks.

Unleashing the Power of Vision Language Models

What sets Falcon 2-11B apart from other AI models is its integration of a vision language model. This visionary approach combines the power of natural language processing with image understanding and analysis. By incorporating visual information into the model, Falcon 2-11B gains a deeper understanding of the context and meaning behind textual data. This integration opens up new possibilities for innovative applications across various industries.

The Falcon 2 Family: Advancing Language Models on Multiple Fronts

Falcon 2-11B is part of the Falcon 2 family, a collection of state-of-the-art language models introduced by TII. Alongside Falcon 2-11B, the family includes Falcon-40B and Falcon-7B. Falcon-40B has already made its mark as a leading model on the Open LLM Leaderboard, showcasing its exceptional capabilities. With Falcon-7B, TII offers a more accessible option that requires only around 15GB of GPU memory, making it suitable for inference and fine-tuning even on consumer hardware.

Unveiling the Architectural Innovations

The Falcon models, including Falcon 2-11B, build upon the foundation of the GPT-3 architecture while introducing several architectural innovations. These enhancements include rotary positional embeddings, multiquery attention, FlashAttention-2, and parallel attention/MLP decoder-blocks. These architectural breakthroughs distinguish Falcon models from their predecessors and contribute to their superior performance in various language understanding tasks.

Responsible AI Usage: An Essential Consideration

As AI models continue to grow in scale and complexity, responsible usage becomes an essential consideration. Falcon 2-11B, while trained on an extensive corpus and exhibiting impressive capabilities, may not generalize well beyond the languages it was trained on. Additionally, like any AI model, Falcon 2-11B may have inherited biases from the web data it was trained on. Therefore, it is crucial to exercise caution and conduct thorough risk assessments when utilizing these models.

To address potential biases and maximize performance, it is recommended to fine-tune the Falcon models for specific tasks. Fine-tuning allows practitioners to adapt the models to their particular use cases and mitigate any biases or limitations. Implementing safeguards and ethical guidelines during the production use of AI models like Falcon 2-11B ensures responsible deployment across industries.

The Promising Future of Falcon Models

The release of Falcon 2-11B and the Falcon 2 family marks a groundbreaking advancement in the field of language models. These models offer unprecedented scale, incorporating vision language models and architectural innovations that push the boundaries of AI research. With their impressive capabilities and potential applications, Falcon models are poised to reshape numerous domains.

However, it is important to approach these models with responsibility and vigilance. Thorough risk assessments, fine-tuning for specific tasks, and ongoing monitoring of biases are crucial steps in ensuring their ethical and effective deployment. By embracing these principles, the AI community can harness the transformative power of Falcon models while safeguarding against potential risks.

In conclusion, the release of Falcon 2-11B by the Technology Innovation Institute represents a significant milestone in AI research. This model, trained on an unprecedented scale and incorporating vision language models, paves the way for new possibilities in natural language processing and image understanding. As the Falcon 2 family continues to evolve, responsible usage and ethical considerations remain vital to unlock the full potential of these powerful language models.

Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰