Innovating Video Generation: The Mora Framework’s Impact on Advanced AI Agents

Video generation technology has witnessed significant advancements in recent years, with remarkable progress made in image and text synthesis. However, video generation, particularly for long-duration videos, remains relatively unexplored. While existing models like Pika and Gen-2 have shown promising results, they still face limitations in producing extended videos and lack the comprehensive capabilities showcased by closed-source models like Sora developed by OpenAI.

To bridge this gap, researchers from Lehigh University and Microsoft have introduced a groundbreaking multi-agent framework called Mora. Mora aims to replicate and extend the capabilities of Sora, offering a structured and collaborative approach to generalist video generation. By incorporating several advanced visual AI agents, Mora revolutionizes the landscape of video generation, opening up new possibilities for innovation and application.

The Limitations of Existing Models

Before delving into the details of Mora, it is essential to understand the limitations of existing video generation models. While models like Pika and Gen-2 have shown considerable promise, they struggle to produce long-duration videos exceeding 10 seconds. This limitation hampers their practical utility in various fields, including entertainment, marketing, and media production.

Moreover, closed-source models like Sora present a barrier to innovation and replication within the academic community. Without open access to the model’s architecture and training techniques, researchers face challenges in building upon or improving these models. This limitation inhibits the progress of video generation technology and limits the democratization of AI advancements.

Introducing Mora: A Collaborative Framework

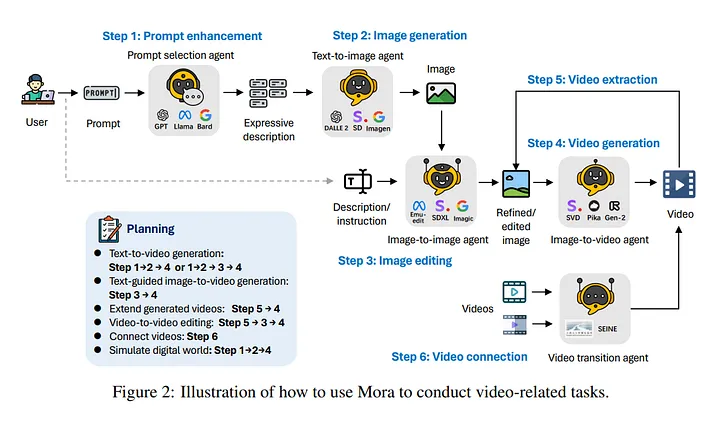

Mora addresses these limitations by leveraging collaboration among advanced visual AI agents. The framework decomposes video generation into several subtasks, each assigned to a specialized agent. These agents include prompt selection, text-to-image generation, image-to-video generation, and video-to-video editing.

By designing the collaboration of these agents, Mora aims to replicate and extend the video generation capabilities demonstrated by Sora. This multi-agent approach allows Mora to tackle diverse video generation tasks, including text-to-video generation, text-conditional image-to-video generation, extending generated videos, video-to-video editing, connecting videos, and simulating digital worlds.

Advantages of the Mora Framework

Mora’s multi-agent architecture offers several advantages over existing models. Firstly, it enables a structured yet flexible approach to video generation. Each AI agent is responsible for a specific input-output transformation, ensuring coherent and high-quality video outputs. This collaborative framework not only enhances the overall performance of video generation but also allows for modular improvements and innovations in individual agents.

Secondly, Mora distinguishes itself through its open-source nature. Unlike closed-source models like Sora, Mora’s architecture and training techniques are accessible to the academic community and researchers worldwide. This openness fosters collaboration, replication, and improvement, propelling the advancement of video generation technology.

Furthermore, Mora’s open-source nature promotes extensibility. Researchers can build upon the framework, incorporating new techniques and algorithms to further enhance video generation capabilities. This extensibility encourages the development of a vibrant ecosystem of AI agents and paves the way for the democratization of AI advancements in the field of video generation.

Performance and Future Potential

Experimental results demonstrate Mora’s competitive performance in video generation tasks, with metrics indicating its proficiency in generating videos closely resembling those produced by Sora. While there may exist a performance gap between Mora and Sora, particularly in holistic assessments, Mora’s open-source nature and multi-agent architecture offer significant advantages in terms of accessibility, extensibility, and innovation potential.

However, it is worth noting that Mora is still a relatively new framework, and further refinement and optimization may be required to bridge the performance gap with Sora comprehensively. Ongoing research and the collaborative efforts of the academic and AI communities are expected to push Mora’s capabilities even further, unlocking new frontiers in video generation technology.

Conclusion

The introduction of Mora, a multi-agent framework that incorporates several advanced visual AI agents, marks a paradigm shift in video generation technology. By replicating and extending the capabilities of leading models like Sora, Mora addresses the challenge of generating long-duration videos and offers a collaborative approach to video synthesis.

Mora’s multi-agent architecture, open-source nature, and focus on extensibility present new opportunities for researchers to innovate and improve video generation technology. With ongoing research and collaboration, Mora is poised to revolutionize various fields, including entertainment, marketing, and media production, by enabling the creation of high-quality videos that were previously challenging or impossible to generate.

As the journey towards more advanced video generation continues, Mora stands at the forefront, driving progress and democratizing the power of AI in visual synthesis.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰