Introducing MARKLLM: The Open-Source Toolkit for LLM Watermarking

LLM watermarking has emerged as a crucial technology in the fight against misinformation and the protection of intellectual property in the age of artificial intelligence. By embedding subtle, detectable signals in AI-generated text, LLM watermarking enables the identification of the origin of the content. This innovative approach addresses concerns such as impersonation, ghostwriting, and the proliferation of fake news. However, the complexity of watermarking algorithms and the lack of standardized evaluation methods have hindered the widespread adoption and understanding of this technology.

To overcome these challenges and empower researchers and developers, a group of experts from Tsinghua University, Shanghai Jiao Tong University, The University of Sydney, UC Santa Barbara, the CUHK, and the HKUST have collaborated to create MARKLLM, an open-source toolkit for LLM watermarking. This revolutionary toolkit offers a unified and extensible framework for implementing watermarking algorithms, providing user-friendly interfaces and advanced evaluation tools.

A Comprehensive Framework for LLM Watermarking

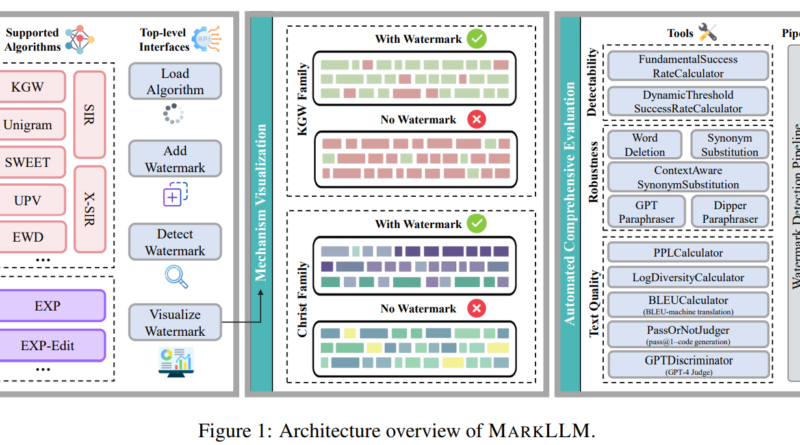

MARKLLM offers a comprehensive set of features for LLM watermarking, making it an invaluable resource for researchers and developers in the field. The toolkit supports nine specific watermarking methods from two major algorithm families, namely the KGW Family and the Christ Family. These algorithms modify LLM logits or use pseudo-random sequences to guide token sampling, enabling the embedding and detection of watermarks in AI-generated text.

One of the primary advantages of MARKLLM is its user-friendly design, which includes intuitive interfaces for algorithm loading, text watermarking, detection, and data visualization. This ease of use allows researchers to experiment with different algorithms and evaluate their performance effectively. The toolkit also includes 12 evaluation tools and two automated pipelines for assessing the detectability, robustness, and impact on text quality of watermarking algorithms.

Evaluating Watermarking Algorithms MARKLLM

Evaluating the effectiveness of watermarking algorithms is crucial for the advancement of LLM watermarking technology. MARKLLM provides researchers with a unified framework to address the lack of standardization and uniformity in the field. The toolkit allows for easy invocation and switching between algorithms, offering a well-designed class structure that enhances scalability and flexibility.

MARKLLM includes a visualization module that highlights token preferences and correlations for KGW and Christ family algorithms. This feature enables researchers to gain insights into the inner workings of the watermarking methods and make informed decisions about their implementation. The toolkit also supports flexible configurations, making it possible to conduct thorough and automated evaluations of watermarking algorithms using various metrics and attack scenarios.

Assessing Detectability, Robustness, and Text Quality

The key aspects of evaluating watermarking algorithms include detectability, robustness against tampering, and impact on text quality. MARKLLM provides researchers with a comprehensive set of evaluation tools and customizable pipelines to assess these critical factors. The toolkit supports various metrics, such as perplexity, log diversity, BLEU, pass@1, and GPT-4 Judge, allowing for a thorough analysis of the performance of watermarking algorithms.

Using MARKLLM, researchers have evaluated nine watermarking algorithms for detectability, robustness, and impact on text quality. The evaluations were performed on different datasets, including general text generation, machine translation, and code generation. The results showed high detection accuracy, algorithm-specific strengths, and varying performance depending on the specific metrics and attack scenarios employed.

The Future of MARKLLM

MARKLLM represents a significant step forward in the field of LLM watermarking, providing researchers and developers with a powerful toolkit to advance the technology. While the current version of MARKLLM supports a subset of watermarking methods, future contributions are expected to expand its capabilities. The modular design of the toolkit ensures scalability and flexibility, allowing for the integration of new algorithms and evaluation methods.

In conclusion, MARKLLM is an open-source toolkit that empowers researchers and developers in the field of LLM watermarking. With its unified framework, user-friendly interfaces, and advanced evaluation tools, MARKLLM facilitates the implementation, evaluation, and understanding of watermarking algorithms. By utilizing this toolkit, the research community can continue to improve the detectability, robustness, and text quality impact of LLM watermarking, ensuring the integrity of AI-generated content in the digital age.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰