MoD-SLAM: Revolutionizing Monocular Mapping and 3D Reconstruction in Unbounded Scenes

Introduction

Simultaneous Localization And Mapping (SLAM) systems have revolutionized the field of robotics and computer vision by enabling machines to understand their environment and navigate autonomously. One of the key challenges in SLAM is achieving real-time, accurate, and scalable dense mapping, especially in unbounded scenes. Traditional SLAM methods often rely on RGB-D input, which can lead to scale drift or inaccurate reconstruction in large-scale scenes.

To overcome these limitations, researchers have introduced a groundbreaking method called MoD-SLAM (Monocular Dense Mapping for Unbounded 3D Reconstruction). MoD-SLAM leverages monocular depth estimation, loop closure detection, and neural radiance fields (NeRF) to achieve detailed and precise 3D reconstruction in real-time, without the need for RGB-D input. In this article, we will explore the key components and advantages of MoD-SLAM in revolutionizing monocular mapping and 3D reconstruction in unbounded scenes.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

The Limitations of Existing SLAM Systems

Traditional SLAM systems have proven to be effective in many scenarios but often face challenges in real-time dense mapping in unbounded scenes or require RGB-D input to achieve accurate reconstruction. These limitations restrict their scalability, versatility, and accuracy in large-scale scenes. Existing neural SLAM methods that utilize RGB-D input can suffer from inaccurate scale reconstruction or scale drift, leading to suboptimal mapping results.

Introducing MoD-SLAM: Monocular Dense Mapping for Unbounded 3D Reconstruction

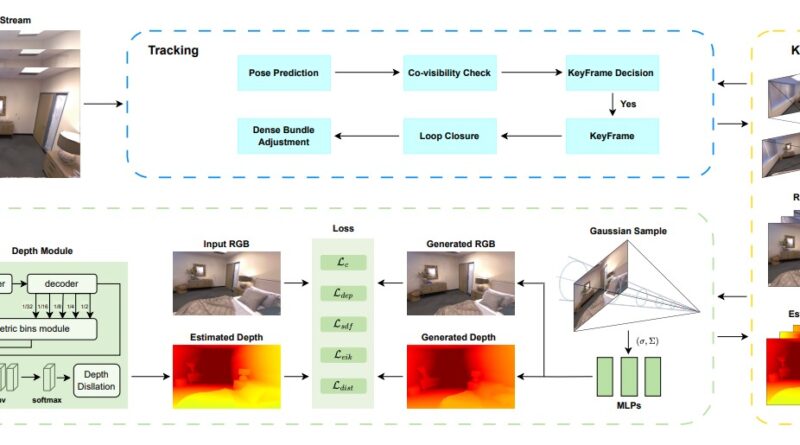

MoD-SLAM addresses the limitations of traditional SLAM systems and presents a novel approach to monocular dense mapping in unbounded scenes using only RGB images. The key components of MoD-SLAM include:

1. Depth Estimation Module

Instead of relying on RGB-D input, MoD-SLAM incorporates a depth estimation module that accurately generates depth maps from RGB images [2]. This module leverages deep learning techniques to estimate depth information, enabling precise reconstruction without the need for additional sensors. The depth estimation module significantly reduces scale drift and enhances the accuracy of the 3D reconstruction process.

2. Depth Distillation

To further refine the depth maps generated by the depth estimation module, MoD-SLAM employs a depth distillation process. This process refines the depth maps by leveraging geometric constraints and local consistency, resulting in more accurate and detailed depth information for the 3D reconstruction process. By distilling the depth information, MoD-SLAM achieves superior reconstruction fidelity in unbounded scenes.

3. Multivariate Gaussian Encoding

Traditional SLAM methods often struggle with scenes that lack defined boundaries. MoD-SLAM addresses this challenge by utilizing multivariate Gaussian encoding. This encoding technique captures detailed spatial information and ensures stability in the mapping process, even in unbounded scenes. The use of multivariate Gaussian encoding enhances the robustness and accuracy of MoD-SLAM, allowing it to handle complex and challenging environments.

4. Loop Closure Detection

Loop closure detection is a critical component in SLAM systems, as it helps eliminate scale drift and improve the accuracy of the mapping process. MoD-SLAM incorporates loop closure detection techniques to identify previously visited locations and update camera poses. By leveraging loop closure detection, MoD-SLAM achieves enhanced tracking accuracy and precise reconstruction, even in large-scale unbounded scenes.

Advantages of MoD-SLAM

MoD-SLAM offers several advantages over existing SLAM systems, making it the future of monocular mapping and 3D reconstruction in unbounded scenes:

1. Real-time Dense Mapping

MoD-SLAM achieves real-time dense mapping, even in unbounded scenes, which is a significant advancement compared to traditional SLAM methods. By leveraging monocular depth estimation and the depth distillation process, MoD-SLAM generates accurate and detailed 3D reconstructions in real-time, enabling faster decision-making and navigation for robotic systems.

2. Scalability and Versatility

Unlike existing neural SLAM methods that rely on RGB-D input, MoD-SLAM utilizes only RGB images, making it more scalable and versatile. This eliminates the need for additional sensors or hardware, reducing costs and simplifying the implementation of SLAM systems in various applications.

3. Enhanced Reconstruction Fidelity

MoD-SLAM achieves superior reconstruction fidelity, especially in large-scale unbounded scenes. The depth estimation module, depth distillation process, multivariate Gaussian encoding, and loop closure detection work in synergy to deliver detailed and precise 3D reconstructions. This enhanced fidelity opens up new possibilities for applications such as augmented reality, virtual reality, and autonomous navigation.

4. State-of-the-Art Performance

Experiments conducted on both synthetic and real-world datasets have demonstrated the superior performance of MoD-SLAM compared to existing neural SLAM systems. MoD-SLAM consistently outperforms state-of-the-art methods like NICE-SLAM and GO-SLAM in terms of tracking accuracy and reconstruction fidelity, further validating its effectiveness and potential.

Conclusion

MoD-SLAM represents a significant leap in the field of monocular mapping and 3D reconstruction in unbounded scenes. By introducing novel techniques for depth estimation, depth distillation, multivariate Gaussian encoding, and loop closure detection, MoD-SLAM overcomes the limitations of traditional SLAM systems and existing neural SLAM methods. With real-time dense mapping, enhanced scalability, and superior reconstruction fidelity, MoD-SLAM opens up new possibilities for applications in robotics, computer vision, and beyond. As research in this field continues to evolve, we can expect further advancements and refinements to MoD-SLAM, shaping the future of SLAM systems and autonomous navigation.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰