Researchers from the University of York and Université Paris-Saclay Introduce DeepKnowledge for Generalisation-Driven Deep Learning Testing

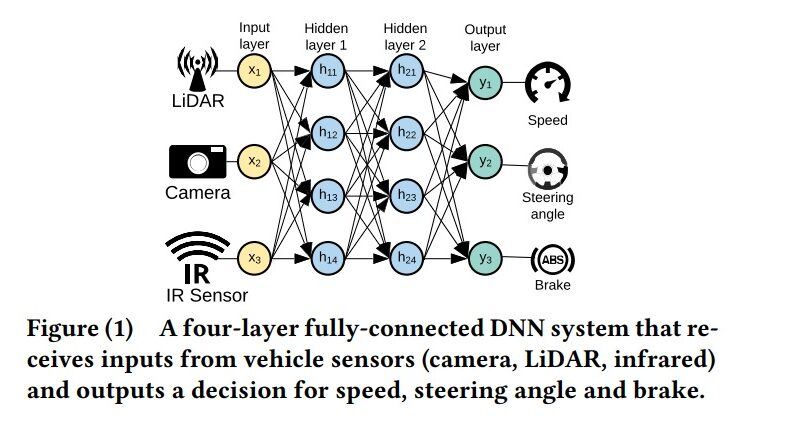

Deep Neural Networks (DNNs) have made significant strides in various complex tasks, often surpassing human performance. As a result, they have found extensive applications in critical domains such as autonomous driving, healthcare, and flight control systems. However, the reliability and consistency of DNN models remain a concern, especially when faced with unexpected changes in input data. To address this issue, researchers from the University of York and Université Paris-Saclay have introduced DeepKnowledge, a novel approach for testing DNNs that focuses on generalization-driven deep learning testing.

Explore 3600+ latest AI tools at AI Toolhouse 🚀

The Challenge of DNN Generalization

Despite their remarkable capabilities, DNN models exhibit instability and suffer from performance degradation when encountering even slight variations in input data. This lack of consistency raises doubts about the reliability of DNNs for safety-critical applications. Industrial studies have shown that real-world operational data often deviates significantly from the data distribution assumed during training, leading to a decline in DNN performance. Therefore, ensuring the resilience of DNN models in the face of domain shifts and adversarial attacks is crucial.

Traditional testing methodologies for DNNs, which rely on black-box approaches, are insufficient to guarantee the trustworthiness of these models. To overcome this challenge, the researchers from the University of York and Université Paris-Saclay propose DeepKnowledge, a knowledge-driven test sufficiency criterion for DNN systems. This approach leverages the principle of out-of-distribution generalization to gain insights into how models make decisions and improve their generalization capacity.

Analyzing Generalization Behavior at the Neuron Level

DeepKnowledge focuses on understanding the generalization behavior of DNN models at the neuron level. By analyzing the generalizability of individual neurons, the researchers aim to identify transfer knowledge (TK) neurons that contribute to the overall performance of the model. These TK neurons possess the ability to generalize information learned from training inputs to new domain variables.

To assess the model’s capacity for generalization in the face of different domain distributions, the researchers employ ZeroShot learning. This technique enables the DNN model to generate predictions for classes not present in the training dataset. By evaluating the transfer knowledge neurons’ effective learning capacity, DeepKnowledge establishes a causal relationship between these neurons and the DNN model’s decision-making process.

DeepKnowledge’s Test Adequacy Criterion

DeepKnowledge introduces a test adequacy criterion based on the ratio of combinations of transfer knowledge neuron clusters covered by a given input set. This criterion measures the appropriateness of an input set in terms of testing the DNN’s generalization behavior. By allocating a larger portion of the testing budget to the transfer knowledge neurons, DeepKnowledge ensures their importance in maintaining proper DNN behavior.

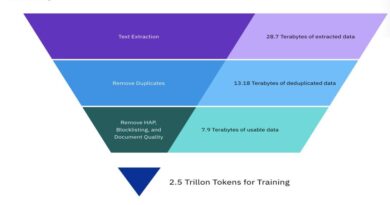

The researchers conducted a large-scale evaluation using publicly available datasets such as SVHN, GTSRB, CIFAR-10, CIFAR-100, and MNIST, along with various DNN models for image recognition tasks. Through a comparison of the coverage between the original test set and adversarial data inputs, the results demonstrate a strong correlation between the diversity and capacity of the test suite, DeepKnowledge’s test adequacy criterion, and the ability to uncover DNN problems effectively.

Open-Source DeepKnowledge Tool and Future Development

To facilitate further research in this area, the researchers have made their project webpage accessible to the public. It provides a repository of case studies and an open-source prototype of the DeepKnowledge tool. This resource aims to encourage researchers to delve deeper into the field of DNN testing and contribute to its advancement.

Looking ahead, the team outlined a comprehensive roadmap for the future development of DeepKnowledge. Their plans include incorporating support for object detection models and the TKC test adequacy criterion, automating data augmentation to reduce data creation and labeling costs, and enhancing DeepKnowledge to enable model pruning. These future developments reflect the team’s commitment to improving the reliability, accuracy, and trustworthiness of DNN systems through advanced testing methodologies.

Conclusion

DeepKnowledge, introduced by researchers from the University of York and Université Paris-Saclay, offers a novel approach to address the challenges of DNN generalization and testing. By analyzing the generalization behavior at the neuron level and identifying transfer knowledge neurons, DeepKnowledge enhances the understanding of how DNN models make decisions and improves their capacity to generalize to new domains. The test adequacy criterion implemented by DeepKnowledge ensures the appropriateness of input sets for comprehensive testing. With an open-source tool and future development plans, the research team aims to drive further advancements in DNN testing and enhance the reliability of these models in critical applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰