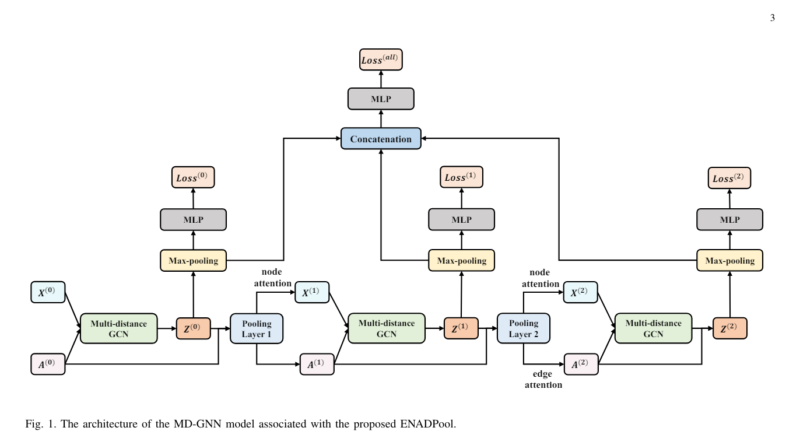

Revolutionizing Graph Neural Networks with ENADPool and MD-GNNs

Graph Neural Networks (GNNs) have emerged as powerful tools for graph classification, enabling the analysis of complex data structures such as social networks, molecular structures, and knowledge graphs. GNNs leverage neighborhood aggregation to iteratively update node representations, capturing local and global graph structure and facilitating tasks like node classification and link prediction. However, effective graph pooling is essential for downsizing and learning representations, and recent advancements in this area have led to the development of novel techniques such as Edge-Node Attention-based Differentiable Pooling (ENADPool) and Multi-Distance Graph Neural Networks (MD-GNNs). In this article, we will explore these innovative approaches to enhance graph classification.

The Importance of Graph Pooling

Graph pooling is a critical operation in GNNs that reduces the size of the graph while preserving important structural information. Traditional pooling methods, such as global pooling, treat all nodes equally, resulting in a loss of fine-grained details. Hierarchical pooling techniques, on the other hand, aim to retain structural features by clustering nodes or using attention mechanisms to assign different importance weights to nodes.

Hierarchical pooling methods have shown promising results in addressing the limitations of global pooling. However, they still face challenges like potential information loss and over-smoothing. Over-smoothing occurs when the aggregation process dampens the node representations, making them indistinguishable for different nodes in the graph. This issue hampers the discriminative power of the model, leading to reduced classification performance.

To overcome these challenges, researchers have proposed innovative techniques that incorporate attention mechanisms and multi-distance information in the pooling process. Let’s delve into the details of ENADPool and MD-GNNs to understand their contributions and benefits.

Edge-Node Attention-based Differentiable Pooling (ENADPool)

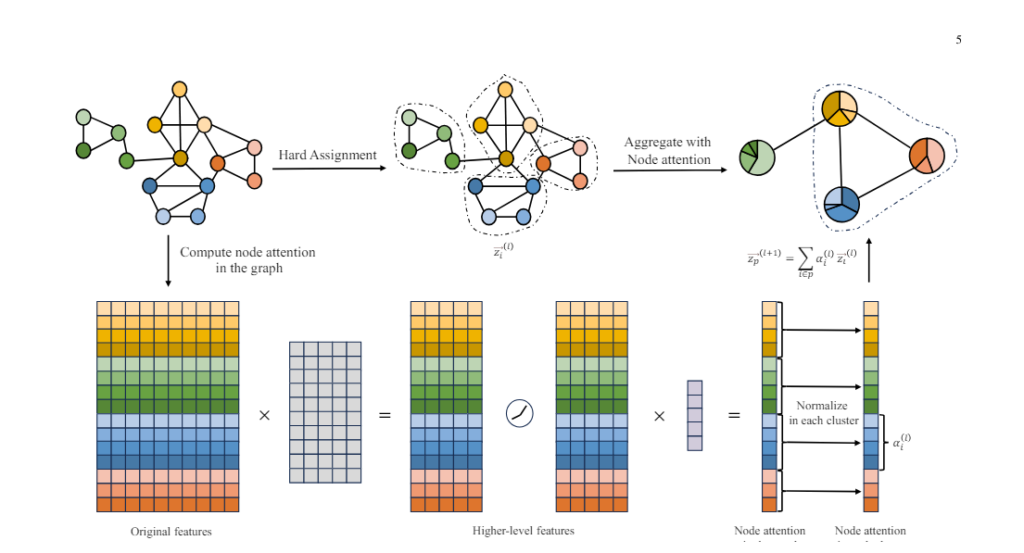

ENADPool is a hierarchical pooling method introduced by researchers from Beijing Normal University, Central University of Finance and Economics, Zhejiang Normal University, and the University of York. Unlike traditional pooling methods, ENADPool employs hard clustering and attention mechanisms to compress node features and edge strengths, addressing issues associated with uniform aggregation.

The ENADPool process involves three steps:

- Hard Node Assignment: ENADPool performs hard clustering to assign nodes to unique clusters based on their similarity. This step ensures that nodes with similar characteristics are grouped together, preserving local structural information.

- Node-based Attention: ENADPool calculates the importance of each node within its assigned cluster using attention mechanisms. This attention mechanism allows the model to focus on the most informative nodes and assign higher weights to them during the aggregation process.

- Edge-based Attention: ENADPool also considers the edge strengths between nodes when compressing node features. By incorporating edge-based attention, the model can capture the importance of inter-node relationships, further enhancing the representation power of the pooled graph.

The combination of hard clustering, node-based attention, and edge-based attention in ENADPool leads to weighted compressed node features and adjacency matrices, effectively capturing the structural details of the graph.

Multi-Distance Graph Neural Networks (MD-GNNs)

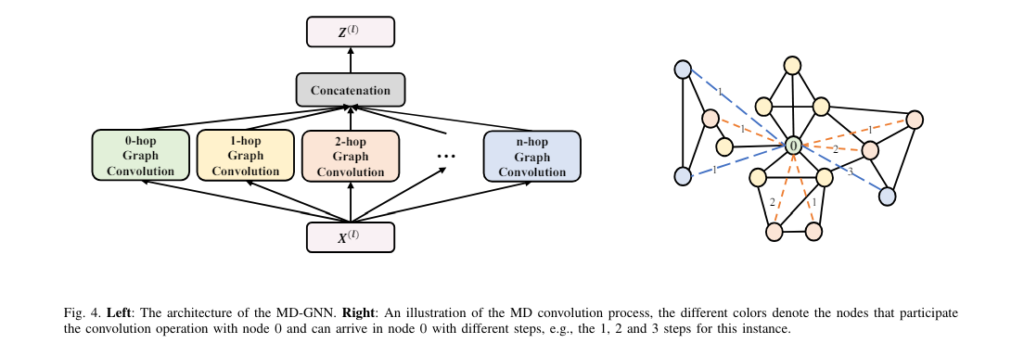

Over-smoothing is a common issue in GNNs, especially when multiple aggregation layers are used. To address this problem, researchers have introduced MD-GNNs, which allow nodes to receive information from neighbors at different distances.

MD-GNNs mitigate over-smoothing by aggregating node information at various distances, capturing comprehensive structural details. This approach enables the model to retain both local and global information, thereby enhancing the effectiveness of the pooling method.

The MD-GNN model reconstructs the graph topology by considering multiple distances between nodes. By incorporating information from different distances, MD-GNNs can capture fine-grained details while maintaining a broader perspective of the graph structure. This multi-distance approach ensures that the model does not overly smooth the node representations, leading to improved graph classification performance.

Advantages and Evaluation

The combination of ENADPool and MD-GNNs offers several advantages over traditional pooling methods. By using hard clustering and attention mechanisms, ENADPool assigns nodes to unique clusters based on their characteristics, capturing local structural details with improved interpretability. The incorporation of edge-based attention further enhances the representation power of the pooled graph.

MD-GNNs address the over-smoothing issue by enabling nodes to receive information from neighbors at multiple distances, striking a balance between local and global information. This approach ensures that the model retains important structural features while avoiding the loss of discriminative power.

To evaluate the performance of ENADPool and MD-GNNs, researchers compared them against other graph deep learning methods using benchmark datasets like D&D, PROTEINS, NCI1/NCI109, FRANKENSTEIN, and REDDIT-B. The experiments involved 10-fold cross-validation, with accuracy and standard deviation as the evaluation metrics.

The results demonstrated that the combination of ENADPool and MD-GNNs outperformed other state-of-the-art graph deep learning methods. The architecture of the models involved two pooling layers with MD-GNNs for embeddings and node assignments, optimized with activation functions like ReLU, dropout regularization, and auxiliary classifiers during training. The effectiveness of the approach can be attributed to its ability to address issues like uniform feature aggregation, over-smoothing, and lack of interpretability.

Conclusion

Graph classification is a challenging task that requires effective graph pooling techniques. Traditional pooling methods often fail to capture important structural details and suffer from over-smoothing issues. However, recent advancements in GNNs have led to the development of innovative techniques like ENADPool and MD-GNNs.

ENADPool leverages hard clustering and attention mechanisms to compress node features and edge connectivity, effectively capturing local structural information. MD-GNNs, on the other hand, allow nodes to receive information from neighbors at different distances, addressing the over-smoothing problem.

The combination of ENADPool and MD-GNNs offers a powerful solution for enhancing graph classification, providing interpretable representations and improved performance. These techniques have the potential to revolutionize the field of graph analysis and enable applications in various domains, including social network analysis, bioinformatics, and recommendation systems.

Whether it’s analyzing complex social networks or understanding intricate molecular structures, the integration of ENADPool and MD-GNNs opens up new possibilities for graph classification and paves the way for more accurate and interpretable models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰