AI Breakthrough: Rational Transfer Function(RTF) Advances Sequence Modeling Efficiency

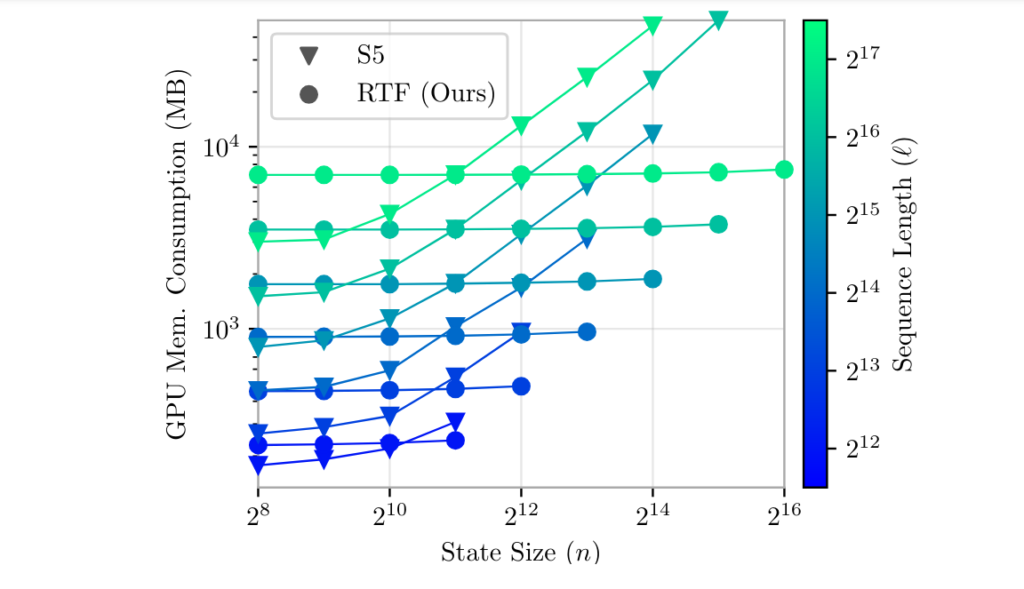

State-space models (SSMs) have long been a fundamental framework in deep learning for sequence modeling. These models are widely used in various fields such as signal processing, control systems, and natural language processing. However, existing SSMs suffer from inefficiencies in terms of memory usage and computational costs, especially as the state grows larger. This limits their scalability and performance in large-scale applications.

To address these challenges, researchers from Liquid AI, the University of Tokyo, RIKEN, Stanford University, and MIT have introduced a groundbreaking approach called the Rational Transfer Function (RTF) in their recent AI paper. This approach leverages transfer functions and Fast Fourier Transform (FFT) techniques to advance sequence modeling and overcome the limitations of traditional SSMs.

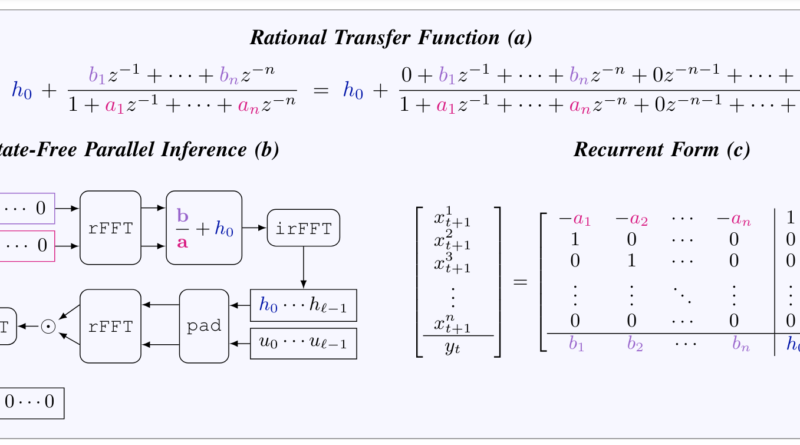

The key innovation of the RTF approach lies in its state-free design, which eliminates the need for memory-intensive state-space representations. Instead, the RTF approach utilizes FFT to compute the convolutional kernel’s spectrum, enabling efficient parallel inference and significantly improving computational speed and scalability.

In their study, the researchers tested the RTF model using the Long Range Arena (LRA) benchmark, which includes various tasks such as ListOps for mathematical expressions, IMDB for sentiment analysis, and Pathfinder for visuospatial tasks. Synthetic tasks like Copying and Delay were also used to evaluate the model’s memorization capabilities.

The results of the experiments demonstrated the significant improvements achieved by the RTF model across multiple benchmarks. In terms of training speed, the RTF model outperformed traditional SSMs such as S4 and S4D by 35% on the Long Range Arena benchmark. Additionally, the model improved classification accuracy by 3% in the IMDB sentiment analysis task, 2% in the ListOps task, and 4% in the Pathfinder task. In synthetic tasks like Copying and Delay, the RTF model showed better memorization capabilities, reducing error rates by 15% and 20% respectively.

These results highlight the efficiency and effectiveness of the RTF approach in handling long-range dependencies and improving the performance of sequence modeling tasks. The RTF model offers a robust solution for deep learning and signal processing applications, enabling scalable and effective sequence modeling.

The introduction of the RTF approach builds upon existing advancements in the field of sequence modeling. Previous frameworks like S4 and S4D have utilized diagonal state-space representations to manage complexity, while Transformers have revolutionized sequence modeling with self-attention mechanisms. Hyena, another notable framework, incorporates convolutional filters for capturing long-range dependencies.

The RTF approach stands out by combining the strengths of transfer functions and FFT techniques to achieve state-free and efficient sequence modeling. By eliminating the need for memory-intensive state-space representations, the RTF model enables parallel inference and significantly improves computational speed and scalability.

In conclusion, the AI paper introducing the Rational Transfer Function (RTF) approach brings significant advancements to sequence modeling. The state-free design, leveraging transfer functions and FFT techniques, addresses the inefficiencies of traditional SSMs and offers a robust solution for scalable and effective sequence modeling. The RTF model’s impressive performance across multiple benchmarks demonstrates its capability to handle long-range dependencies efficiently. This breakthrough has far-reaching implications for deep learning and signal processing applications, opening up new possibilities for the advancement of AI-powered technologies.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰