Revolutionizing Machine Translation with ALMA-R: A Game-Changing Approach

Machine translation has made significant strides in recent years, enabling people around the world to communicate and understand each other more easily. However, achieving near-perfect translations remains a challenge. Traditional methods often rely on large datasets and supervised fine-tuning, which limits the quality of the output.

In a groundbreaking AI paper, researchers from Johns Hopkins and Microsoft have revolutionized machine translation with ALMA-R, a smaller-sized LLM model that outperforms even the highly acclaimed GPT-4. This innovative approach, called Contrastive Preference Optimization (CPO), pushes the boundaries of translation quality and opens up new possibilities for language models.

The Limitations of Traditional Machine Translation Methods

Traditional machine translation methods have relied on large datasets and supervised fine-tuning to improve translation quality. While these techniques have been effective to some extent, they often fall short of producing near-perfect translations. This is primarily due to the limitations imposed by the reliance on extensive training data and the challenges of supervised fine-tuning.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

One of the key limitations of traditional methods is that they tend to align model outputs with gold-standard references, focusing on adequacy rather than perfection. As a result, the quality of translations is constrained, and the output often lacks the fluency and accuracy required for natural and meaningful communication.

Introducing Contrastive Preference Optimization (CPO)

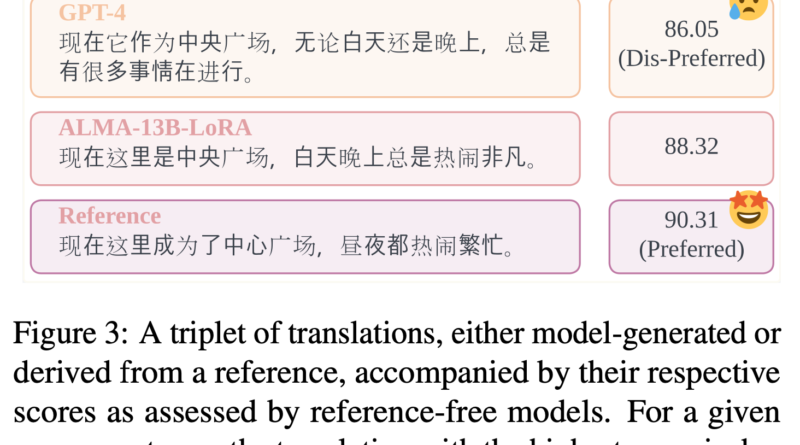

To overcome the limitations of traditional machine translation methods, researchers from Johns Hopkins and Microsoft have introduced Contrastive Preference Optimization (CPO). This game-changing approach diverges from supervised fine-tuning by training models to distinguish between “adequate” and “near-perfect” translations.

CPO employs a contrastive learning strategy that utilizes hard negative examples, challenging the model to generate superior translations while learning to reject high-quality but not flawless ones. By focusing on the quality of translations rather than the quantity of training data, CPO aims to achieve unparalleled translation accuracy.

The Mechanics of CPO: A Shift in Training Methodologies

The mechanics of CPO are intriguing and mark a shift in training methodologies for machine translation. Instead of minimizing cross-entropy loss, CPO trains models to develop a preference for generating superior translations. This approach allows the model to push the boundaries of translation quality and strive for near-perfection.

Contrastive learning, a key aspect of CPO, involves presenting the model with hard negative examples. These examples challenge the model to differentiate between translations that are merely adequate and those that are near-perfect. By learning from these examples, the model gains a deeper understanding of what constitutes high-quality translations.

The Remarkable Results of ALMA-R

Implementing CPO with ALMA models has yielded remarkable results. The enhanced model, ALMA-R, has demonstrated performance that matches or surpasses that of leading models in the field, including GPT-4. What makes ALMA-R truly exceptional is that it achieves this level of performance with a significantly smaller size, requiring minimal resource investment.

A detailed examination of ALMA-R’s performance reveals its superiority over existing methods. The model excels in various test datasets, including those from the WMT competitions, setting new translation accuracy and quality standards. ALMA-R’s performance showcases the transformative power of CPO in machine translation and challenges traditional assumptions about training methodologies.

The Potential of CPO in Machine Translation

The introduction of Contrastive Preference Optimization marks a significant advancement in the field of neural machine translation. By focusing on the quality of translations rather than the quantity of training data, CPO offers a new direction for improving language models.

CPO has the potential to revolutionize machine translation by enabling models to produce near-perfect translations. This has implications not only for everyday communication but also for industries such as e-commerce, customer support, and content localization. With near-perfect translations, businesses can engage with customers around the world more effectively and create meaningful connections across language barriers.

Future Research and Development

The groundbreaking AI paper from Johns Hopkins and Microsoft opens up exciting possibilities for future research and development in the field of machine translation. The success of ALMA-R and CPO encourages further exploration of innovative training methodologies that prioritize translation quality.

Researchers can build upon the findings of this paper to refine and improve machine translation models. By continuing to push the boundaries of translation accuracy and quality, the field can make significant progress in bridging language gaps and facilitating global communication.

In conclusion, the AI paper from Johns Hopkins and Microsoft has revolutionized machine translation with ALMA-R and Contrastive Preference Optimization (CPO). This game-changing approach challenges traditional training methodologies and pushes the boundaries of translation quality. ALMA-R’s performance surpasses that of leading models like GPT-4, achieving near-perfect translations with minimal resource investment. The introduction of CPO opens up new possibilities for more efficient and accurate language models, transforming the field of machine translation and paving the way for future advancements.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰