Revolutionizing Uncertainty Quantification in Deep Neural Networks Using Cycle Consistency

Deep neural networks have revolutionized the field of artificial intelligence, enabling breakthroughs in various domains such as computer vision, natural language processing, and data mining. These powerful models have the potential to make accurate predictions and solve complex problems. However, they cannot often quantify and account for uncertainties in their predictions. This limitation hinders their reliability in real-world applications and can lead to unexpected outcomes.

To address this challenge, researchers at the University of California, Los Angeles (UCLA), have proposed a groundbreaking approach that revolutionizes uncertainty quantification in deep neural networks using cycle consistency. This new technique enhances the reliability and confidence level of deep learning models, enabling them to handle anomalous data, identify distribution shifts, and improve their overall performance.

The Need for Uncertainty Quantification in Deep Neural Networks

Deep neural networks are characterized by their ability to learn complex patterns and make predictions based on a large amount of training data. However, they often struggle when encountering data that is significantly different from what they were trained on. This can lead to unreliable predictions and potentially catastrophic consequences in critical applications such as healthcare, finance, and autonomous driving.

Uncertainty quantification (UQ) plays a crucial role in addressing these challenges. By providing a measure of confidence in the model’s predictions, UQ enables the identification of out-of-distribution data, detects data corruption, and highlights potential issues in the model’s performance. It helps decision-makers understand the reliability of the predictions and make informed decisions based on the level of uncertainty.

Introducing Cycle Consistency for Uncertainty Quantification

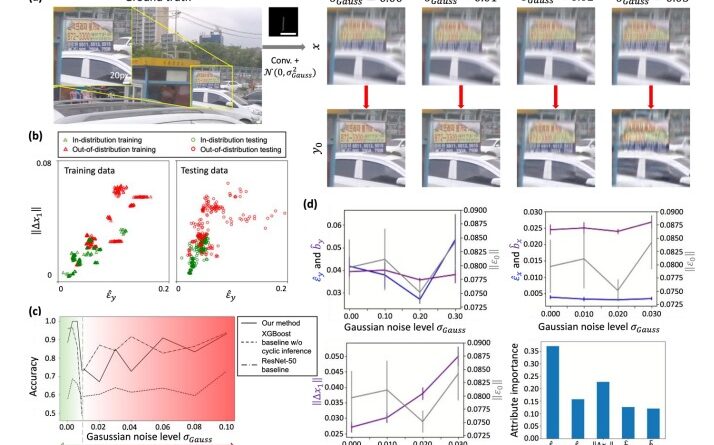

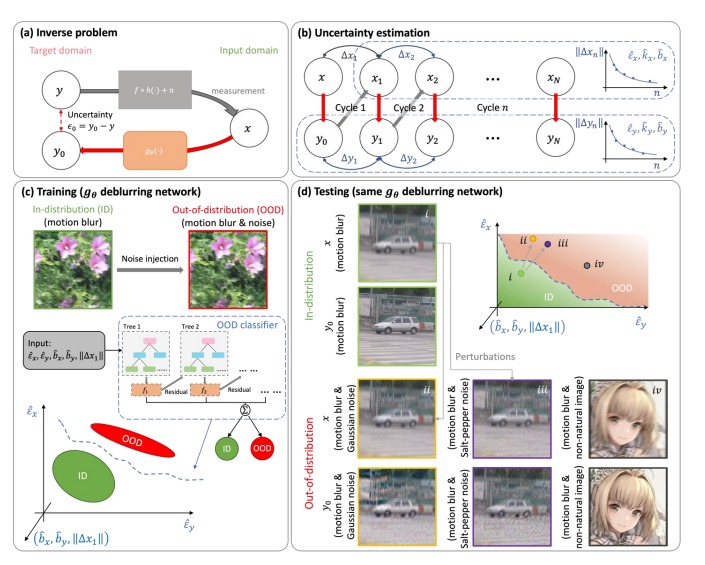

The researchers at UCLA have proposed a novel method that leverages cycle consistency to revolutionize uncertainty quantification in deep neural networks. This approach combines a physical forward model with an iterative-trained neural network to estimate the uncertainty of the network’s outputs.

The core idea behind cycle consistency is to execute forward-backward cycles using the forward model and neural network. By iterative cycling between input and output data, the model accumulates uncertainty and estimates it based on the underlying processes. This approach allows for the quantification of uncertainty even when the ground truth is unknown.

To establish the link between cycle consistency and output uncertainty, the researchers derived upper and lower limits for cycle consistency. These limits provide a quantitative measure of the network’s uncertainty and enable the identification of potential issues or data distribution shifts. By setting these limits, the researchers have paved the way for more reliable uncertainty quantification in deep neural networks.

Enhancing Neural Network Reliability in Inverse Imaging Problems

One of the key applications of this cycle of consistency-based uncertainty quantification is in inverse imaging problems. Inverse imaging refers to tasks such as image denoising and super-resolution imaging, where high-quality images are generated from raw or noisy data. These tasks often rely on deep neural networks to produce accurate and visually appealing results.

However, deep neural networks are prone to uncertainties in inverse imaging problems. The cycle consistency-based UQ method developed by UCLA researchers addresses this issue by providing robust uncertainty estimates and detecting data distribution mismatches. This enhances the reliability of neural networks in inverse imaging tasks and improves the overall quality of the generated images.

Detecting Out-of-Distribution Images through Cycle Consistency

Another significant application of the cycle consistency-based UQ method is in the identification of out-of-distribution (OOD) images. OOD images refer to data points that significantly differ from the distribution of the training data. These images can lead to unpredictable outcomes and pose a challenge for deep neural networks.

To tackle this problem, the researchers conducted experiments using different categories of low-resolution images, including animé, microscopy, and human faces. They trained separate super-resolution neural networks for each image category and evaluated their performance using the cycle consistency-based uncertainty quantification.

The results were impressive. The machine learning model developed by the researchers successfully identified OOD instances when the trained networks were applied to images from different categories. This capability is crucial in real-world applications where the identification of OOD images is essential for reliable decision-making and accurate predictions.

The Impact and Future Directions

The cycle consistency-based uncertainty quantification method developed by the researchers at UCLA has the potential to revolutionize the field of deep learning. By providing robust uncertainty estimates and detecting distribution shifts, this method enhances the reliability and trustworthiness of deep neural networks.

The applications of this technique extend beyond inverse imaging problems. Uncertainty quantification is crucial in various domains, including healthcare, finance, and autonomous systems. By accurately quantifying uncertainties, deep neural networks can be deployed more confidently in real-world applications, leading to safer and more reliable systems.

Future research directions could focus on further improving the computational efficiency of the cycle consistency-based uncertainty quantification method. Additionally, exploring its applicability in different domains and addressing specific challenges in those areas would be valuable.

In conclusion, uncertainty quantification is a critical aspect of deep neural networks’ reliability and trustworthiness. The cycle consistency-based UQ method developed by researchers at UCLA represents a significant step forward in addressing this challenge. By leveraging cycle consistency and robust uncertainty estimation, deep neural networks can be more trusted and deployed with confidence in a wide range of applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰