OA-CNNs: Enhancing the Adaptivity of Sparse Convolutional Neural Networks at Minimal Computational Cost

The field of computer vision has made significant strides in recent years, with Convolutional Neural Networks (CNNs) playing a pivotal role in enabling machines to understand and interpret visual data. CNNs have proven to be highly effective in various computer vision tasks, including image classification, object detection, and semantic segmentation. However, when it comes to processing 3D point clouds, CNNs face unique challenges due to the irregular and scattered nature of the data.

To address these challenges, researchers have developed a family of networks called OA-CNNs (Omni-Adaptive Convolutional Neural Networks). OA-CNNs integrate a lightweight module that greatly enhances the adaptivity of sparse CNNs while minimizing computational cost. This article explores the concept of OA-CNNs and their impact on the performance of sparse CNNs.

Explore 3600+ latest AI tools at AI Toolhouse 🚀

Sparse Convolutional Neural Networks (CNNs)

Before delving into OA-CNNs, let’s first understand the challenges faced by sparse CNNs in processing 3D point clouds. Unlike traditional 2D images, 3D point clouds are irregular and do not have a structured grid-like arrangement of pixels. This irregularity poses difficulties in applying standard CNN architectures, which are designed for grid-like data structures.

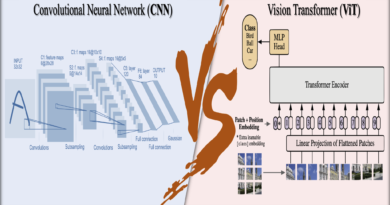

Sparse CNNs address this challenge by converting irregular point clouds into voxels during data preprocessing, leveraging locally structured benefits. However, sparse CNNs often exhibit inferior accuracy compared to their transformer-based counterparts, particularly in 3D scene semantic segmentation tasks. The researchers behind OA-CNNs aimed to understand the underlying reasons for this performance gap and develop a solution to enhance the adaptivity of sparse CNNs.

The Key to Adaptivity: Dynamic Receptive Fields and Adaptive Relation Mapping

The researchers identified adaptivity as the key factor that differentiates sparse CNNs from point transformers. While point transformers can flexibly adapt to individual contexts, sparse CNNs typically rely on static perception, limiting their ability to capture nuanced information across diverse scenes. To bridge this gap, the researchers proposed OA-CNNs, which incorporate dynamic receptive fields and adaptive relation mapping.

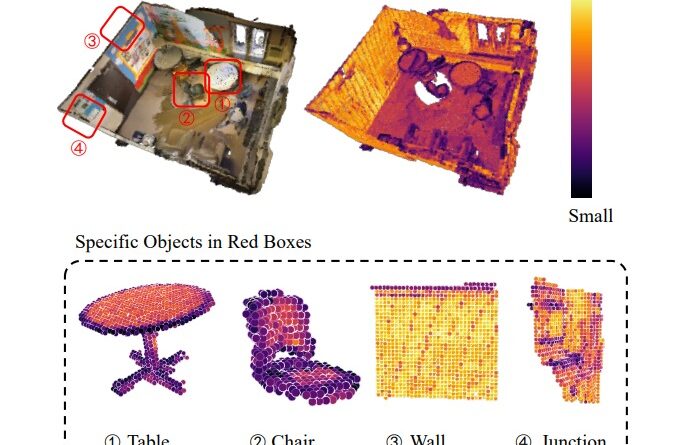

One of the key innovations of OA-CNNs is the adaptation of receptive fields via attention mechanisms. By allowing the network to cater to different parts of the 3D scene with varying geometric structures and appearances, OA-CNNs can capture more nuanced information. This is achieved by partitioning the scene into non-overlapping pyramid grids and employing Adaptive Relation Convolution (ARConv) in multiple scales. The network selectively aggregates multiscale outputs based on local characteristics, thereby enhancing adaptivity without sacrificing efficiency.

Moreover, OA-CNNs leverage adaptive relationships facilitated by self-attention maps. The network dynamically generates kernel weights for non-empty voxels based on their correlations with the grid centroid, further enhancing adaptivity. This lightweight design, with linear complexity proportional to the voxel quantity, effectively expands receptive fields and optimizes efficiency.

Advantages and Applications of OA-CNNs

The effectiveness of OA-CNNs has been validated through extensive experiments, where they have demonstrated superior performance over state-of-the-art methods in semantic segmentation tasks across popular benchmarks such as ScanNet v2, ScanNet200, nuScenes, and SemanticKITTI. The ability of OA-CNNs to enhance adaptivity without compromising efficiency makes them a promising solution for various practical applications.

One of the key applications of OA-CNNs lies in 3D scene understanding, where they can enable machines to interpret and understand complex 3D environments. By leveraging their enhanced adaptivity, OA-CNNs can accurately segment objects and scenes, leading to improved object recognition, scene reconstruction, and augmented reality applications. Additionally, OA-CNNs can be applied in fields such as robotics, autonomous vehicles, and virtual reality, where precise 3D scene understanding is crucial for decision-making and interaction with the environment.

Conclusion

OA-CNNs, or Omni-Adaptive Convolutional Neural Networks, have emerged as a family of networks that greatly enhance the adaptivity of sparse CNNs at minimal computational cost. By incorporating dynamic receptive fields and adaptive relation mapping, OA-CNNs bridge the performance gap between sparse CNNs and point transformers in 3D scene understanding tasks. Their ability to capture nuanced information across diverse scenes and their lightweight design make them a promising solution for various practical applications.

The research conducted in the field of OA-CNNs sheds light on the importance of adaptivity in advancing the capabilities of sparse CNNs. With the continuous evolution of computer vision and the increasing need for accurate 3D scene understanding, OA-CNNs represent a significant step forward in the field. By leveraging their innovations, we can expect to see further advancements in 3D semantic segmentation, object recognition, and other related tasks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰