From Llama 3 to Yi-1.5-34B: Bridging the AI Gap with Superior Performance

Artificial Intelligence (AI) has seen significant advancements in recent years, and one of the latest breakthroughs is the introduction of the Yi-1.5-34B model by 01.AI. This upgraded version of Yi brings a host of improvements, including a high-quality corpus of 500 billion tokens and fine-tuning on 3 million diverse samples. In this article, we will explore the capabilities and potential impact of the Yi-1.5-34B model, as well as its architectural advancements.

Bridging the Gap: From Llama 3 8B to 70B

The Yi-1.5-34B model represents a significant step forward in AI technology, bridging the gap between the Llama 3 8B and 70B models. It combines the computational efficiency of smaller models with the broader capabilities of larger models, striking a balance that allows for intricate tasks without requiring excessive computational resources.

Unprecedented Training and Optimization

The Yi-1.5-34B model builds upon its predecessor, the Yi-34B model, which already set a high standard in the AI community. One key aspect of the Yi-1.5-34B’s superiority is its training regimen. It was pre-trained on an astounding 500 billion tokens, accumulating a total of 4.1 trillion tokens. This intensive training process ensures that the model has a deep understanding of language and can generate high-quality output.

Remarkable Performance and Capabilities

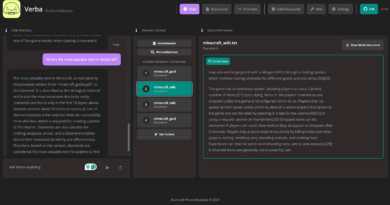

When benchmarked against other models, the Yi-1.5-34B demonstrates remarkable performance. Its large vocabulary enables it to excel at logical puzzles and comprehend complex ideas with finesse. Additionally, the model is capable of generating code snippets longer than those produced by GPT-4, showcasing its practical applications. Users who have tested the Yi-1.5-34B model through demos have praised its speed, efficiency, and versatility for various AI-driven activities.

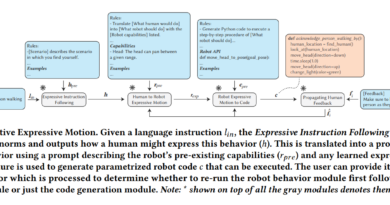

Multimodal Capabilities and Vision-Language Features

The Yi family of models goes beyond text-based language models by incorporating multimodal and vision-language features. By combining a vision transformer encoder with the chat language model, the Yi-1.5-34B can align visual representations within its semantic space. This integration of vision and language opens up new possibilities for AI applications that require a combination of visual and textual understanding.

Handling Long Contexts with Lightweight Ongoing Pretraining

Traditional language models often struggle with processing long contexts. However, the Yi models have been extended to handle contexts of up to 200,000 tokens through lightweight ongoing pretraining. This capability allows for a deeper understanding of lengthy documents and conversations, enabling the Yi-1.5-34B to excel in tasks that require comprehensive context analysis.

Data Engineering for Optimal Performance

The effectiveness of the Yi models can be attributed to meticulous data engineering. For pretraining, 3.1 trillion tokens from Chinese and English corpora were carefully selected using a cascaded deduplication and quality filtering pipeline. This rigorous process ensures that the model receives the highest quality input data, leading to improved performance and reliability.

Fine-Tuning for Precision and Dependability

In addition to pretraining, the Yi-1.5-34B model underwent a fine-tuning process to enhance its capabilities further. Machine learning engineers iteratively refined and validated a small-scale instruction dataset, ensuring that the model’s performance is precise and dependable. This practical approach to data verification guarantees that the Yi-1.5-34B model is a trustworthy tool for researchers and practitioners alike.

The Future of AI with the Yi-1.5-34B Model

The introduction of the Yi-1.5-34B model represents a significant advancement in the field of AI. Its combination of excellent performance, computational efficiency, and multimodal capabilities makes it a valuable tool for researchers and practitioners. The Yi-1.5-34B model’s ability to handle complex tasks such as multimodal integration, code development, and logical reasoning opens up exciting possibilities for various AI-driven applications.

In conclusion, the Yi-1.5-34B model introduces a new standard of excellence in AI models. With its upgraded architecture, extensive training, and fine-tuning process, it pushes the boundaries of what AI can achieve. As AI continues to evolve, models like the Yi-1.5-34B will lead the way towards more advanced and capable systems.

Explore 3600+ latest AI tools at AI Toolhouse 🚀. Don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Read our other blogs on AI Tools 😁

If you like our work, you will love our Newsletter 📰