Google Deepmind and University of Toronto Researchers’ Breakthrough in Human-Robot Interaction: Utilizing Large Language Models for Generative Expressive Robot Behaviors

In recent years, there has been significant progress in the field of human-robot interaction (HRI). Robots are increasingly becoming a part of our daily lives, assisting us in various tasks and even providing companionship. However, one challenge that researchers and developers face is enabling robots to display human-like expressive behaviors. Traditional methods have limitations in adaptability and scalability, while data-driven approaches are often constrained by the need for specialized datasets.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

To address these challenges, researchers at Google DeepMind and the University of Toronto have made a breakthrough in the field of HRI by utilizing large language models (LLMs) for generative expressive robot behaviors. Their approach, known as Generative Express Motion (GenEM), leverages the rich social context available from LLMs to create adaptable and composable robot motion.

The Need for Expressive Robot Behaviors

Expressive robot behaviors play a crucial role in human-robot interaction. They enable robots to communicate effectively, convey emotions, and understand and respond to human social cues. Traditionally, rule-based and template-based methods have been used to program robot behaviors. However, these methods often lack expressivity and adaptability, limiting their utility in diverse social contexts.

On the other hand, data-driven approaches, such as machine learning and generative models, have shown promise in generating expressive behaviors. However, these approaches are often data inefficient and require specialized datasets, which can be time-consuming and costly to create. This limitation becomes more pronounced as the variety of social interactions a robot might encounter increases, necessitating more adaptable and context-sensitive solutions for robot behavior programming.

Leveraging Large Language Models for Generative Expressive Behaviors

The GenEM approach proposed by Google DeepMind and the University of Toronto addresses the limitations of traditional methods by harnessing the power of large language models. LLMs have demonstrated their efficacy in various natural language processing tasks, and now their potential in robotics is being explored.

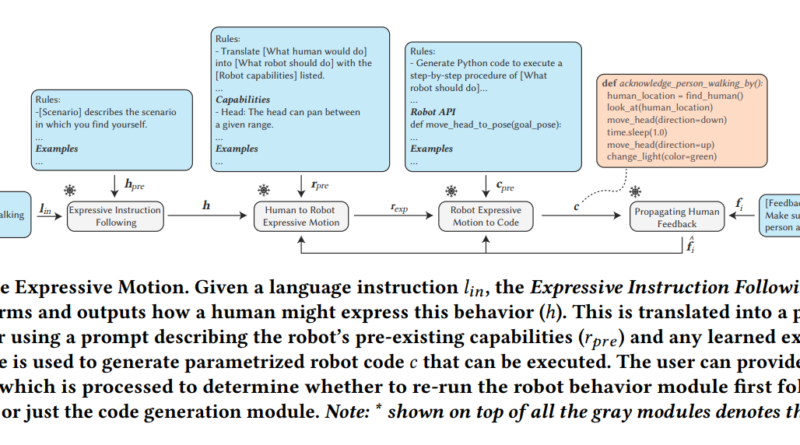

The researchers propose leveraging the rich social context available from LLMs to generate expressive behaviors for robots. The GenEM approach uses a few-shot chain-of-thought prompting methodology to translate human language instructions into parameterized control code using the robot’s available and learned skills. This enables the robot to generate adaptable and composable behaviors.

The Process of Generating Expressive Behaviors

The process of generating expressive behaviors using the GenEM approach involves multiple steps. It starts with user instructions, which are then translated into parameterized control code using the language model. The generated code is then executed by the robot, resulting in expressive behaviors.

To evaluate the effectiveness of the approach, two user studies were conducted. These studies compared the behaviors generated by the GenEM approach to those created by a professional animator. The results showed that the GenEM approach outperformed traditional rule-based and data-driven approaches, providing greater expressivity and adaptability in robotic behavior.

User Feedback and Improvements

In addition to the initial evaluation, user feedback was collected to further refine the robot’s behaviors. The feedback was used to update the robot’s policy parameters and generate new expressive behaviors by composing existing ones. This iterative process allowed continuous improvement and refinement of the robot’s behavior.

Effectiveness and Applications

The effectiveness of the GenEM approach was demonstrated through simulation experiments using a mobile robot and a simulated quadruped. The results showed that the approach performed better than a version where language instructions were directly translated into code. Furthermore, the approach allowed for the generation of behaviors agnostic to embodiment and composable, providing even greater flexibility and adaptability [2].

The breakthrough in utilizing large language models for generative expressive robot behaviors has the potential to revolutionize human-robot interaction. With the ability to generate more human-like behaviors, robots can better understand and respond to human needs and emotions. This opens up new possibilities for applications in various fields, including healthcare, education, and entertainment.

Conclusion

The research conducted by Google DeepMind and the University of Toronto represents a significant advancement in the field of human-robot interaction. By leveraging large language models, the GenEM approach generates expressive, adaptable, and composable robot behaviors autonomously. This breakthrough has the potential to enhance the effectiveness of human-robot interactions, paving the way for robots that can seamlessly integrate into our daily lives and assist us more naturally and intuitively.

The potential of large language models in robotics is just beginning to be explored. As further research and development take place, we can expect even more sophisticated and human-like behaviors from robots, enabling them to become valuable companions and assistants in various domains.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰