OGEN: A Novel AI Approach for Boosting Out-of-Domain Generalization in Vision-Language Models

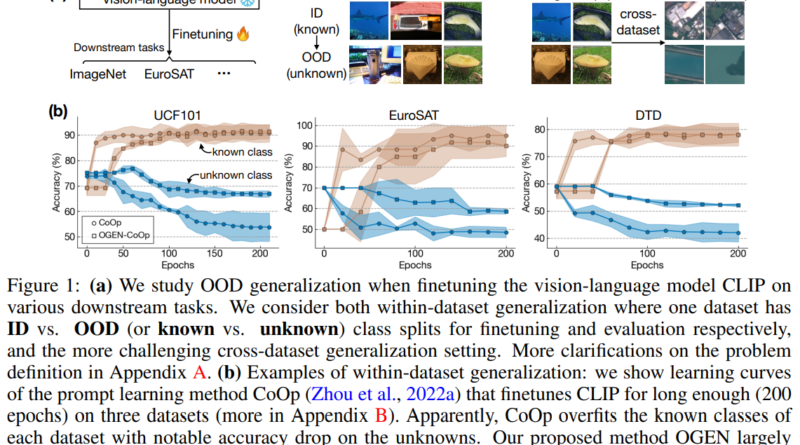

In recent years, large-scale pre-trained vision-language models have shown remarkable generalizability across diverse visual domains and real-world tasks. These models, such as CLIP (Contrastive Language-Image Pretraining), can understand and reason about both images and text, making them powerful tools for various applications. However, while these models excel at zero-shot performance on in-distribution (ID) datasets, they often struggle with out-of-distribution (OOD) samples from novel classes, limiting their overall generalization capabilities.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

To address this challenge, a recent AI paper from Nanyang Technological University (NTU) and Apple introduces a novel approach called OGEN (Out-of-Domain Generalization). This approach aims to boost the out-of-domain generalization of vision-language models by combining image feature synthesis for unknown classes and an unknown-aware finetuning algorithm with effective model regularization.

The Challenge of Out-of-Domain Generalization

Out-of-domain generalization refers to the ability of a model to perform well on samples that are outside the distribution of the training data. In the context of vision-language models, this means being able to understand and generate accurate predictions for images or text that are not present in the training set. While existing models like CLIP exhibit impressive generalization abilities within the known classes, they face challenges when confronted with novel or unknown classes.

The paper highlights the limitations of existing methods for enhancing zero-shot OOD detection, which include softmax scaling and the incorporation of extra text generators. While these methods show promise, they are not sufficient for achieving robust out-of-domain generalization. The authors identify overfitting during finetuning as a major issue, which leads to poor generalization on unknown classes.

Introducing OGEN: A Novel Approach

OGEN addresses the challenge of out-of-domain generalization by introducing a combination of feature synthesis and model regularization techniques. The goal is to improve the model’s ability to handle unknown classes and improve generalization performance on both in-distribution and out-of-distribution samples.

Class-Conditional Feature Generator

To tackle the challenge of effective model regularization, the paper proposes a class-conditional feature generator. This generator synthesizes image features for unknown classes based on the well-aligned image-text feature spaces of CLIP. By leveraging the known classes and their similarities to unknown classes, the generator produces synthetic features that generalize well to “unknown unknowns” – complex visual class distributions in the open domain.

The proposed method incorporates a Multi-Head Cross-Attention (MHCA) mechanism to effectively capture similarities between the unknown class and each known class. This mechanism helps in generating features for unknown classes by extrapolating from similar known classes. By reframing the feature synthesis problem and introducing an “extrapolating bias,” the authors offer an innovative solution to the feature synthesis challenge.

Unknown-Aware Finetuning Algorithm

In addition to feature synthesis, the paper introduces an unknown-aware finetuning algorithm to address the issue of overfitting during finetuning. This algorithm leverages both in-distribution and synthesized out-of-distribution data for joint optimization. The aim is to establish a better-regularized decision boundary that preserves in-distribution performance while enhancing out-of-domain generalization.

To further reduce overfitting, the proposed approach incorporates an adaptive self-distillation mechanism. This mechanism utilizes teacher models from historical training epochs to guide optimization in the current epoch, ensuring consistency between the predictions induced by the teacher and student models.

Evaluating OGEN’s Performance

The paper evaluates the OGEN approach across different finetuning methods for CLIP-like models. The results consistently demonstrate improved out-of-domain generalization performance under two challenging settings: within-dataset (base-to-new class) generalization and cross-dataset generalization.

In the within-dataset generalization setting, OGEN enhances the accuracy of new classes without compromising the accuracy of base classes. This showcases its ability to strike a favorable trade-off between in-distribution and out-of-distribution performance. Comparative analysis with state-of-the-art methods further validates the consistent improvement achieved by OGEN.

Cross-dataset generalization experiments demonstrate the universality of OGEN’s approach. It consistently improves generalization performance across different target datasets, with significant gains observed on datasets with notable distribution shifts from the ImageNet dataset.

Conclusion and Future Work

In conclusion, the AI paper from NTU and Apple introduces OGEN, a novel AI approach for boosting out-of-domain generalization in vision-language models. By combining feature synthesis for unknown classes and an unknown-aware finetuning algorithm with effective model regularization, OGEN achieves improved performance across diverse datasets and settings.

Future work includes extending the evaluation of OGEN to other finetuning methods and exploring its effectiveness in modeling uncertainties on unseen data. The potential of OGEN to enhance the generalization capabilities of vision-language models opens up new avenues for research and practical applications in various domains, from image captioning to question-answering systems.

With the introduction of OGEN, the field of vision-language models takes a significant step towards overcoming the challenges of out-of-domain generalization. This novel approach has the potential to enhance the safety, reliability, and performance of AI systems in real-world applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰