The Platonic Representation Hypothesis: AI’s Unified Model of Reality

Artificial Intelligence (AI) has made significant advancements in recent years, with models becoming increasingly powerful and versatile. One intriguing trend that has emerged is the convergence of AI models towards a unified representation of reality. This phenomenon, known as the “Platonic Representation Hypothesis,” suggests that AI systems strive to capture a shared understanding of the underlying reality that generates the observable data.

The Evolution of AI Models

In the early days of AI, systems were designed to address specific tasks, each requiring specialized solutions. For example, sentiment analysis, parsing, and dialogue generation each had their own dedicated algorithms. However, modern AI models, such as large language models (LLMs), have showcased remarkable versatility. These models can proficiently handle multiple language processing tasks using a single set of weights.

This trend extends beyond language processing, with unified systems emerging across different data modalities. AI models are now capable of processing both images and text simultaneously, combining architectures to achieve more comprehensive understandings.

The Platonic Representation Hypothesis

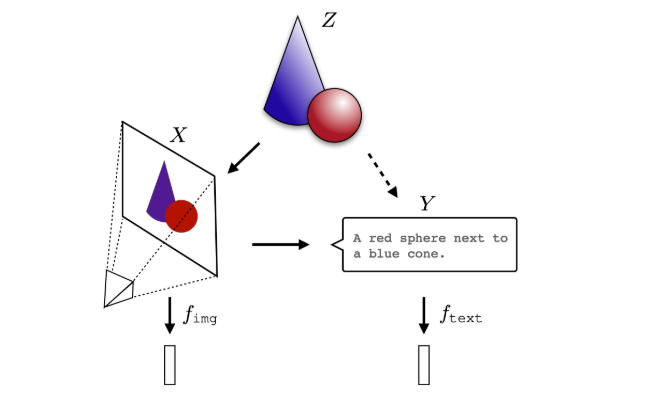

The Platonic Representation Hypothesis posits that representations in deep neural networks, commonly used in AI models, are converging towards a common representation of reality. This convergence is evident across various model architectures, training objectives, and data modalities. The central idea is that there exists an ideal reality that underlies our observations, and AI models are striving to capture a statistical representation of this reality through their learned representations.

Multiple studies have provided evidence supporting this hypothesis. Techniques like model stitching, where layers from different models are combined, have demonstrated that representations learned by models trained on distinct datasets can be aligned and interchanged, indicating a shared representation. Moreover, this convergence extends across modalities. Recent language-vision models have achieved state-of-the-art performance by combining pre-trained language and vision models.

Factors Driving Convergence

Several factors contribute to the observed convergence in representations:

1. Task Generality

As AI models are trained on more diverse tasks and datasets, the volume of representations that satisfy these constraints becomes smaller. This reduction in the solution space drives convergence towards a shared representation of reality.

2. Model Capacity

Larger AI models with increased capacity are better equipped to approximate the globally optimal representation. This increased capacity drives convergence across different model architectures.

3. Simplicity Bias

Deep neural networks have an inherent bias towards finding simple solutions that fit the input data. As the capacity of AI models increases, this simplicity bias favors convergence towards a shared, simple representation of reality.

Convergence in Representations

Researchers have observed that as AI models become larger and more competent across tasks, their representations become more aligned. This alignment extends beyond individual models, with language models trained solely on text exhibiting visual knowledge and aligning with vision models up to a linear transformation.

This convergence in representations has profound implications. AI models that accurately capture a unified representation of reality can potentially reduce hallucination and bias. By scaling models in terms of parameters and incorporating diverse datasets, researchers aim to improve the fidelity of representations.

Formalizing the Concept

To formalize the Platonic Representation Hypothesis, researchers consider an idealized world consisting of discrete events sampled from an unknown distribution. Certain contrastive learners can recover a representation whose kernel corresponds to the pointwise mutual information function over these underlying events. This recovery suggests convergence towards a statistical model of reality.

Implications and Limitations

The Platonic Representation Hypothesis holds several intriguing implications for the future of AI:

1. Improved Representations

Scaling AI models in terms of parameters and incorporating diverse datasets can lead to more accurate representations of reality. This advancement has the potential to reduce hallucination and bias in AI systems.

2. Shared Knowledge Across Modalities

By training models on data from different modalities, researchers can improve representations across domains. Sharing knowledge between vision and language models, for example, can enhance their performance and understanding.

However, the hypothesis also faces limitations. Different modalities may contain unique information that cannot be fully captured by a shared representation. While convergence has been observed in vision and language domains, other domains, such as robotics, still lack standardization in representing world states.

Conclusion

The pursuit of the Platonic Representation is an intriguing aspect of AI’s quest for a unified model of reality. As AI models continue to scale and incorporate diverse data, their representations converge towards a shared statistical model of the underlying reality that generates our observations. While this hypothesis faces challenges and limitations, it offers valuable insights into the pursuit of artificial general intelligence and the quest to develop AI systems that effectively reason about and interact with the world around us.

Remember, AI’s quest for a unified model of reality is an ongoing process, but the advancements made towards this pursuit are remarkable. As researchers continue to explore and refine AI models, we move closer to capturing a deeper understanding of the complex realities that shape our world.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

Explore 3600+ latest AI tools at AI Toolhouse 🚀.

If you like our work, you will love our Newsletter 📰