Harnessing the Power of Abstract Syntax Trees (ASTs) to Revolutionize Code-Centric Language Models

The field of language models has witnessed remarkable advancements in recent years, with significant progress made in code generation and comprehension. Language models trained on vast code repositories, such as GitHub, have demonstrated exceptional performance in tasks like text-to-code conversion, code-to-code transpilation, and understanding complex programming constructs. However, these models often overlook the structural aspects of code and treat it merely as sequences of subword tokens. Recent research suggests that incorporating Abstract Syntax Trees (ASTs) into code-centric language models can greatly enhance their performance.

Researchers from UC Berkeley and Meta AI have presented a novel pretraining paradigm called AST-T5 [1]. AST-T5 leverages the power of ASTs to boost the performance of code-centric language models by enhancing code generation, transpilation, and comprehension. This innovative approach utilizes dynamic programming techniques to maintain the structural integrity of code and equips the model with the ability to reconstruct diverse code structures.

The Significance of ASTs in Code Understanding and Generation

ASTs play a vital role in code understanding and generation. These tree-like structures represent the hierarchical relationships between different syntactic constructs of code, providing a higher-level abstraction of its structure. By incorporating ASTs into language models, researchers aim to enable these models to better understand the semantics and structure of code, leading to improved performance in various code-related tasks.

🔥Explore 3500+ AI Tools and 2000+ GPTs at AI Toolhouse

Traditional language models, such as those based on the Transformer architecture, have revolutionized natural language processing (NLP) tasks. However, when it comes to code, these models face challenges due to the complex and hierarchical nature of programming languages. AST-T5 addresses these challenges by leveraging ASTs during pretraining and fine-tuning, enhancing code-centric language models’ capabilities.

Introducing AST-T5: A Breakthrough Pretraining Paradigm

AST-T5 introduces a new pretraining paradigm that harnesses the power of ASTs to boost the performance of code-centric language models [2]. This paradigm builds upon the T5 model architecture, a widely used transformer-based model in the field of natural language processing. By incorporating ASTs into T5, AST-T5 achieves remarkable improvements in code-related tasks.

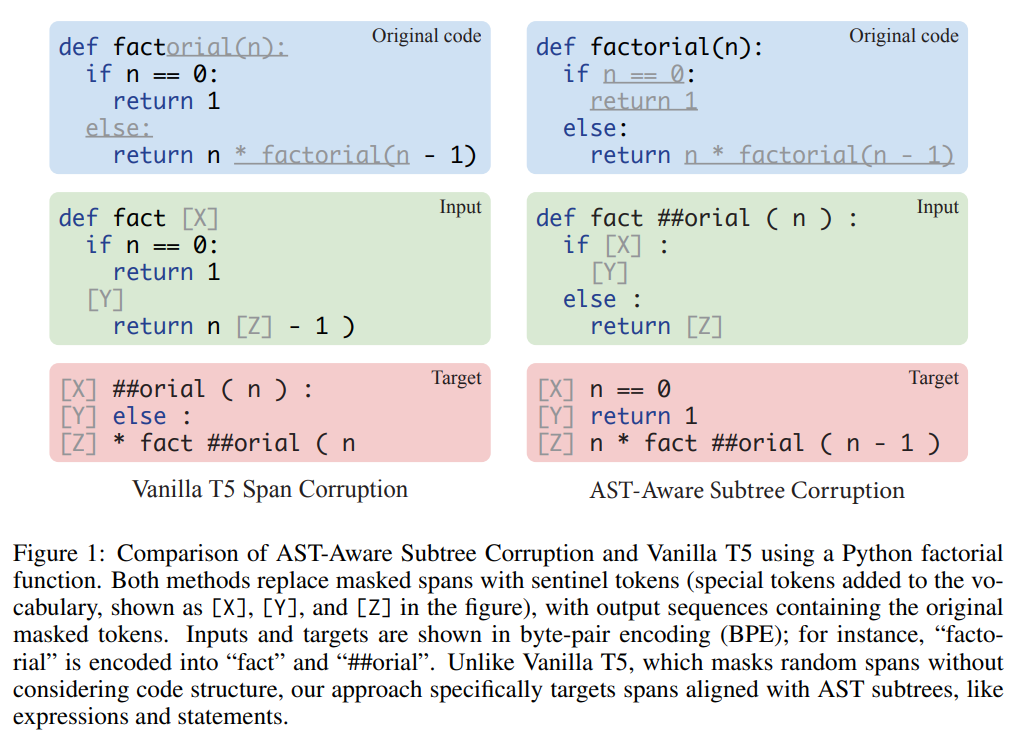

The key innovation of AST-T5 lies in its AST-Aware Segmentation algorithm. This algorithm addresses the token limits imposed by the transformer architecture while preserving the semantic coherence of code. AST-Aware Segmentation dynamically partitions the code into meaningful segments, ensuring that the model captures the relationships between different code constructs accurately.

AST-T5 also employs AST-Aware Span Corruption, a masking technique that allows the model to reconstruct code structures ranging from individual tokens to entire function bodies. This technique enhances the model’s flexibility and structure-awareness, enabling it to generate code with greater accuracy and coherence.

Evaluating the Efficacy of AST-T5

To evaluate the efficacy of AST-T5, controlled experiments were conducted, comparing its performance against T5 baselines with identical transformer architectures, pretraining data, and computational settings. The results consistently demonstrated the superiority of AST-T5 across various code-related tasks.

In code-to-code tasks, AST-T5 outperformed similar-sized language models, surpassing CodeT5 by 2 points in the exact match score for the Bugs2Fix task and by 3 points in the precise match score for Java-C# Transpilation in CodeXGLUE [1]. These results highlight the effectiveness of AST-T5’s structure awareness, achieved through leveraging the AST of code, in enhancing code generation, transpilation, and understanding.

The Future of AST-T5 and Code-Centric Language Models

AST-T5 represents a significant breakthrough in code-centric language models, demonstrating the power of incorporating ASTs in enhancing code understanding and generation. The simplicity and adaptability of AST-T5 make it a potential drop-in replacement for any encoder-decoder language model, paving the way for real-world deployments.

Future research may explore the scalability of AST-T5 by training larger models on more expansive code datasets and evaluating their performance on the entire sanitized subset, eliminating the need for few-shot prompts. Additionally, further investigations into using ASTs in fine-tuning and transfer learning scenarios may uncover additional opportunities for leveraging code structure to enhance language models’ capabilities.

In conclusion, AST-T5’s novel pretraining paradigm harnesses the power of ASTs to revolutionize code-centric language models. By integrating ASTs into the model’s architecture, AST-T5 achieves remarkable improvements in code-related tasks, surpassing existing state-of-the-art models. The incorporation of ASTs enables the models to understand the structural aspects of code, leading to more accurate code generation, transpilation, and comprehension. As the field progresses, AST-T5 and similar advancements hold the potential to reshape the way we interact with and utilize programming languages.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on LinkedIn. Do join our active AI community on Discord.

If you like our work, you will love our Newsletter 📰